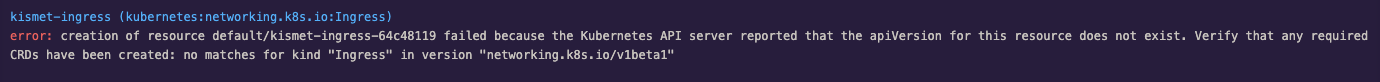

error: no matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beta1"

See original GitHub issueWhat happened?

I am using GitHub action while deploying the app. I removed all resources and tried to set up them again but I am experiencing

Steps to reproduce

I will share my package.json and cluster files. You will get the same error while deploying the app to pulumi.

package.json

{

"name": "aws-typescript",

"devDependencies": {

"@types/node": "latest",

"typescript": "^3.7.5"

},

"dependencies": {

"@pulumi/aws": "latest",

"@pulumi/awsx": "latest",

"@pulumi/docker": "latest",

"@pulumi/eks": "latest",

"@pulumi/kubernetes": "latest",

"@pulumi/pulumi": "latest",

"@pulumi/random": "latest",

"@pulumi/tls": "latest"

}

}

import * as awsx from '@pulumi/awsx';

import * as eks from '@pulumi/eks';

import * as k8s from '@pulumi/kubernetes';

import * as aws from '@pulumi/aws';

const clusterAdminRoleName = 'clusterAdminRole';

const allowedUsersForK8SCluster: aws.iam.PolicyStatement[] = [];

const clusterAdminRoleEKSFullAccessPolicyStatements: aws.iam.PolicyStatement[] =

[

{

Effect: 'Allow',

Action: ['eks:*'],

Resource: '*',

},

{

Effect: 'Allow',

Action: 'iam:PassRole',

Resource: '*',

Condition: {

StringEquals: {

'iam:PassedToService': 'eks.amazonaws.com',

},

},

},

{

Effect: 'Allow',

Action: ['iam:List*'],

Resource: '*',

},

];

const clusterAdminRole = new aws.iam.Role(clusterAdminRoleName, {

inlinePolicies: [

{

name: 'EKS_Full_Access',

policy: JSON.stringify({

Version: '2012-10-17',

Statement: [...clusterAdminRoleEKSFullAccessPolicyStatements],

}),

},

],

assumeRolePolicy: {

Version: '2012-10-17',

Statement: [...allowedUsersForK8SCluster],

},

tags: {

clusterAccess: `${clusterAdminRoleName}-user`,

},

});

export const vpc = new awsx.ec2.Vpc(`app-vpc`, {

numberOfAvailabilityZones: 2,

});

const eksClusterAdminRoleMapping: eks.RoleMapping = {

groups: ['system:masters'],

roleArn: clusterAdminRole.arn,

username: 'pulumi:admin-user',

};

export const cluster = new eks.Cluster('k8-cluster', {

name: 'k8-cluster',

vpcId: vpc.id,

publicSubnetIds: vpc.publicSubnetIds,

privateSubnetIds: vpc.privateSubnetIds,

instanceType: 't2.small',

desiredCapacity: 2,

minSize: 1,

maxSize: 3,

storageClasses: 'gp2',

deployDashboard: false,

roleMappings: [eksClusterAdminRoleMapping],

});

export const namespaceName = 'default';

// Grant cluster admin access to all admins with k8s ClusterRole and ClusterRoleBinding

const role = new k8s.rbac.v1.ClusterRole(

'clusterAdminRole',

{

metadata: {

name: 'clusterAdminRole',

namespace: namespaceName,

},

rules: [

{

apiGroups: ['*'],

resources: ['*'],

verbs: ['*'],

},

],

},

{ provider: cluster.provider }

);

new k8s.rbac.v1.ClusterRoleBinding(

'cluster-admin-binding',

{

metadata: {

name: 'cluster-admin-binding',

},

subjects: [

{

kind: 'User',

name: eksClusterAdminRoleMapping.username,

},

],

roleRef: {

kind: 'ClusterRole',

name: role.metadata.name,

apiGroup: 'rbac.authorization.k8s.io',

},

},

{ provider: cluster.provider }

);

// Export the Cluster's Kubeconfig

export const kubeconfig = cluster.kubeconfig;

export const urn = cluster.urn;

Expected Behavior

I should be able to deploy my app successfully.

Actual Behavior

I got an error that I mention in What Happened? section.

Versions used

CLI

Version 3.34.1

Go Version go1.18.3

Go Compiler gc

Plugins

NAME VERSION

aws 5.8.0

docker 3.2.0

eks 0.40.0

kubernetes 3.19.3

nodejs unknown

random 4.8.0

tls 4.6.0

Host

OS darwin

Version 12.3.1

Arch arm64

This project is written in nodejs: executable='/Users/myuser/.nvm/versions/node/v12.22.0/bin/node' version='v12.22.0'

Current Stack: staging

TYPE URN

I removed resource information.

Found no pending operations associated with staging

Backend

Name pulumi.com

URL https://app.pulumi.com/yasinntza

User yasinntza

Organizations yasinntza

Dependencies:

NAME VERSION

@pulumi/aws 5.8.0

@pulumi/awsx 0.40.0

@pulumi/docker 3.2.0

@pulumi/eks 0.40.0

@pulumi/kubernetes 3.19.3

@pulumi/pulumi 3.34.1

@pulumi/random 4.8.0

@pulumi/tls 4.6.0

@types/node 17.0.42

typescript 3.9.10

Pulumi locates its logs in /var/folders/yl/n1dpjgm50csfyc_p79q_jz040000gn/T/ by default

Additional context

No response

Contributing

Vote on this issue by adding a 👍 reaction. To contribute a fix for this issue, leave a comment (and link to your pull request, if you’ve opened one already).

Issue Analytics

- State:

- Created a year ago

- Reactions:4

- Comments:9 (2 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Issues - GitHub

no matches for kind "CustomResourceDefinition" in version ... version "apiextensions.k8s.io/v1beta1" unable to recognize "STDIN": no matches ...

Read more >Unable to install CRDs in kubernetes kind - Stack Overflow

Firstly you could get the manifest and just change the customresourcedefinitions to use the new API version apiextensions.k8s.io/v1 and ...

Read more >Getting Started - Entando Forum

No matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beta1" ... i'm trying to follow the instruction here dev.entando ...

Read more >Versions in CustomResourceDefinitions - Kubernetes

Note: In apiextensions.k8s.io/v1beta1 , there was a version field instead of versions . The version field is deprecated and optional, but if it...

Read more >Installation fails during common-web-ui chart installation - IBM

If the CRD is not installed, continue with Resolving the problem. ... apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

I have the same issue upgrading an existing EKS cluster’s pulumi dependencies after a Kubernetes upgrade to 1.22.

But, running pulumi up in a CI/CD context, I still get the error reported by original poster (no matches for kind “CustomResourceDefinition” in version “apiextensions.k8s.io/v1beta1”).

Starting from a fresh build agent, using pulumi cli at 0.34.1 with typescript, both the @pulumi/eks and its corresponding resource plugin are installed to 0.40.0 so that the cni template use apiextensions.k8s.io/v1 instead of v1beta. But it still creates the error above.

Investigating this, I noticed that,

pulumi upwill install the old version of pulumi-eks at version 0.33.0, as well as other older resource plugins. What I’ve found is that there is a reference in my state file that match each of these version installations, such as the following in the case of eks:looking for a cleaner solution than editing the state i/o to version 0.40.0.

@Dysproz you need to use at least v0.39.0 of pulumi-eks. EKS has switched v1.22 as the default kubernetes version which doesn’t have CRD supported in the v1beta1 endpoint - see https://github.com/pulumi/pulumi-eks/issues/720#issuecomment-1155351469.

We moved to using v1 in https://github.com/pulumi/pulumi-eks/pull/693 which fixes this problem.