task instance is scheduled repeatedly while dag file is pretty large

See original GitHub issueApache Airflow version: 1.10.10

Kubernetes version (if you are using kubernetes) (use kubectl version):

Environment: VM

- Cloud provider or hardware configuration: 8core, 16GB

- OS (e.g. from /etc/os-release): CentOS Linux release 7.2.1511 (Core)

- Kernel (e.g.

uname -a): 3.10.0-514.26.2.el7.x86_64 - Install tools:

- Others:

What happened:

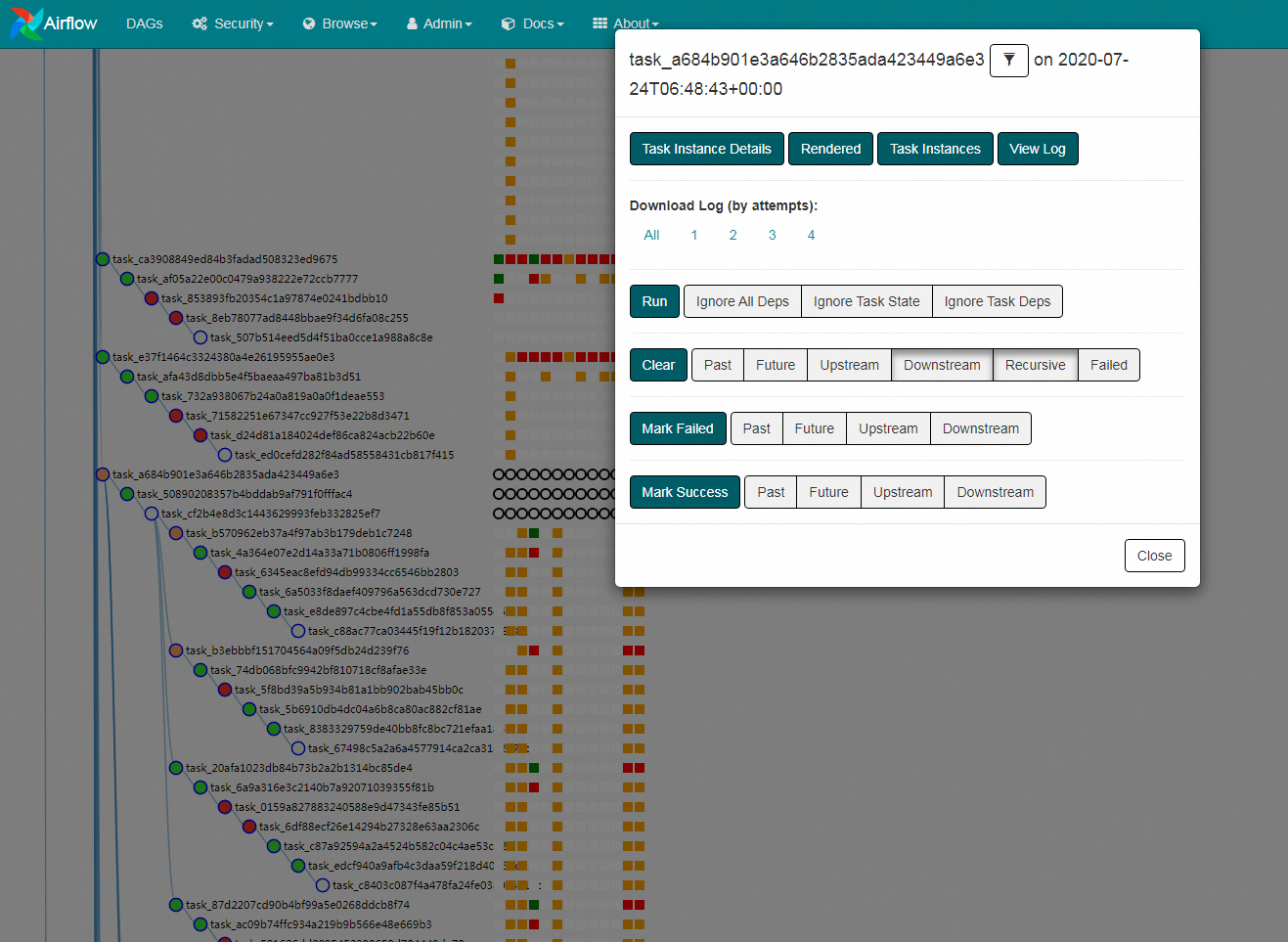

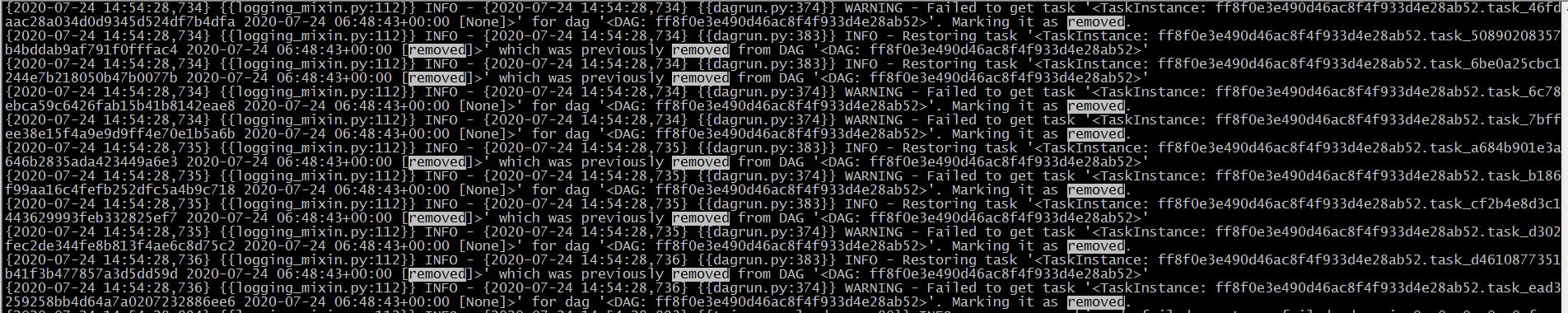

The dag has 477 tasks. Some of the tasks were rescheduled not long after they were SUCCESS, over and over again, until I marked them success. I found such logs in logs/scheduler/latest/ff8f0e3e490d46ac8f4f933d4e28ab52.log:

{2020-07-24 14:54:28,736} {{logging_mixin.py:112}} INFO - {2020-07-24 14:54:28,736} {{dagrun.py:374}} WARNING - Failed to get task '<TaskInstance: ff8f0e3e490d46ac8f4f933d4e28ab52.task_ead3259258bb4d64a7a0207232886ee6 2020-07-24 06:48:43+00:00 [None]>' for dag '<DAG: ff8f0e3e490d46ac8f4f933d4e28ab52>'. Marking it as removed.

What you expected to happen:

The tasks should be found in the dag and not rescheduled if they are success.

How to reproduce it:

Maybe a dag with a large amount of tasks will reproduce it.

Anything else we need to know:

Issue Analytics

- State:

- Created 3 years ago

- Comments:8 (3 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

So we figured out what the problem was:

It had nothing to do with a big DAG, but with a faulty airflow deployment. When we took the deployment down, it kept a lock in the

serialized_dagtable in our postgres database.When the correct deployment went up, it was unable to make changes to the values in that table. Hence, every time the scheduler tried to validate the tasks, it could not find them in the database and marked them as removed.

Simply restarting the database fixed our problem.

I am using version 2.2.5 and I am facing the exact same problem.

I believe it must have something to do when communicating with the DB. We also have a pretty big DAG, and are only seeing that behavior on the 3~5 newest tasks.

@doowhtron 's fix probably works, but doesn’t fix the root cause (that is causing

get_taskto throwAirflowException). I will try and investigate further.