Tasks can be stuck in running state indefinitely

See original GitHub issueApache Airflow version: 1.10.10

Kubernetes version (if you are using kubernetes) (use kubectl version): -

Environment: Cloud Composer

- Composer addon version: 1.12.4

- Python version: 3 (3.6.10)

- Node count: 3

- Machine type: n1-standard-2

What happened:

Sometimes Airflow tasks can be stuck in running state indefinitely.

I was testing Cloud Composer with Airflow 1.10.10 using elastic Dag. My setup of its environment variables was as follows:

- “PERF_DAG_PREFIX”: “workflow”,

- “PERF_DAGS_COUNT”: “10”,

- “PERF_TASKS_COUNT”: “100”,

- “PERF_START_AGO”: “1d”,

- “PERF_SCHEDULE_INTERVAL”: “@once”,

- “PERF_SHAPE”: “no_structure”,

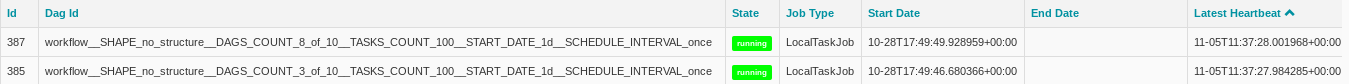

So the Composer instance was supposed to execute 1000 tasks total. The test started on October 28th at around 5:30 PM UTC, and while 998 tasks finished within 70 minutes (at around 6:40 PM UTC), the last two are still in running state. Scheduler and workers are generally running fine, as airflow_monitoring Dag, present on every Composer instance, is regularly executed. Moreover, local task jobs responsible for these two tasks, are also running and updating their heartbeat.

I have done some digging. Both tasks are running on the same hostname: airflow-worker-86455b549d-zkjsc. I have checked the contents of logs table and it appears that these two tasks were among the last 6 this worker has started, which happened at around 5:50 PM UTC (so long before the test was finished). After that point, no more tasks (even for airflow_monitoring) were executed by this worker.

- according to

logstable, for the remaining 4 tasks this worker has only managed to start the--localprocess, but has not changed their status torunning(norunningevent present). For each of these tasks, after around 10 minutes has passed, a new--localprocess was executed by different worker and the task was executed as normal. Within those 10 minutes the scheduler job has restarted, so I assume the scheduler simply rescheduled the tasks inqueuedstate after its restart. - for the two “hanging” tasks, however, their state was changed to

runningand--rawprocess has started as well.

For all of these 6 tasks there is also yet another event in logs table with executing the --local process (on worker other than airflow-worker-86455b549d-zkjsc), occurring at around 11:50 PM UTC, so after 6 hours of the first attempt to start these tasks. This time corresponds to the default value of 21600 seconds for celery broker’s visibility_timeout option. In airflow default config template we can read:

The visibility timeout defines the number of seconds to wait for the worker to acknowledge the task before the message is redelivered to another worker. Make sure to increase the visibility timeout to match the time of the longest ETA you’re planning to use. visibility_timeout is only supported for Redis and SQS celery brokers.

So it appears the worker airflow-worker-86455b549d-zkjsc has not acknowledged getting the message from Redis queue and after 6 hours, the tasks were redelivered to other workers. But at this point, the 4 tasks were already finished and 2 still running, and neither of these two situations is a valid state for task execution. You can even see the attempt to run the hanging task again in its log file:

*** Reading remote log from gs://us-central1-cmp-latest-af1--6dadbf30-bucket/logs/workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once/tasks__47_of_100/2020-10-27T17:31:07.372135+00:00/1.log.

[2020-10-28 17:49:46,865] {taskinstance.py:670} INFO - Dependencies all met for <TaskInstance: workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once.tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 [queued]>

[2020-10-28 17:49:46,932] {taskinstance.py:670} INFO - Dependencies all met for <TaskInstance: workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once.tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 [queued]>

[2020-10-28 17:49:46,932] {taskinstance.py:880} INFO -

--------------------------------------------------------------------------------

[2020-10-28 17:49:46,933] {taskinstance.py:881} INFO - Starting attempt 1 of 1

[2020-10-28 17:49:46,933] {taskinstance.py:882} INFO -

--------------------------------------------------------------------------------

[2020-10-28 17:49:47,028] {taskinstance.py:901} INFO - Executing <Task(PythonOperator): tasks__47_of_100> on 2020-10-27T17:31:07.372135+00:00

[2020-10-28 17:49:47,029] {base_task_runner.py:131} INFO - Running on host: airflow-worker-86455b549d-zkjsc

[2020-10-28 17:49:47,029] {base_task_runner.py:132} INFO - Running: ['airflow', 'run', 'workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once', 'tasks__47_of_100', '2020-10-27T17:31:07.372135+00:00', '--job_id', '385', '--pool', 'default_pool', '--raw', '-sd', 'DAGS_FOLDER/elastic_dag.py', '--cfg_path', '/tmp/tmpkgoycqh4']

[2020-10-28 23:49:55,650] {taskinstance.py:664} INFO - Dependencies not met for <TaskInstance: workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once.tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 [running]>, dependency 'Task Instance State' FAILED: Task is in the 'running' state which is not a valid state for execution. The task must be cleared in order to be run.

I have checked the GKE cluster running for given Composer instance. However, the pod airflow-worker-86455b549d-zkjsc appears to be running fine and I cannot see any issues with it using kubectl. Its logs do not reveal any errors (apart from the last log message being from October 28th at around 5:50 PM UTC). I have also connected to the pod and I was able to see that the aforementioned --local and --raw processes are still running here as well.

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

airflow 1 0.0 0.0 19796 3236 ? Ss Oct28 0:00 /bin/bash /var/local/airflow.sh worker

airflow 15 0.0 0.3 939096 28564 ? Ssl Oct28 0:58 /usr/local/go/bin/gcsfuse --foreground --file-mode 755 --implicit-dirs --limit-ops-per-sec -1 us-central1-cmp-latest-af1--6dadbf30-bucket /home/airflow/gcsfuse

airflow 72 0.1 1.4 408800 107312 ? S Oct28 18:45 [celeryd: celery@airflow-worker-86455b549d-zkjsc:MainProcess] -active- (worker)

airflow 77 0.0 1.2 395484 98192 ? Sl Oct28 1:32 /usr/bin/python /usr/local/bin/airflow serve_logs

airflow 78 0.0 1.1 407548 88796 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-1]

airflow 79 0.0 1.1 407552 88864 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-2]

airflow 80 0.0 1.1 407556 88864 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-3]

airflow 83 0.0 1.1 407560 88868 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-4]

airflow 84 0.0 1.1 407564 88868 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-5]

airflow 85 0.0 1.1 407568 88872 ? S Oct28 0:00 [celeryd: celery@airflow-worker-86455b549d-zkjsc:ForkPoolWorker-6]

airflow 2104 0.3 1.3 470824 106056 ? Sl Oct28 35:08 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2107 0.0 1.3 404660 105060 ? Sl Oct28 0:04 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_6_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2108 0.0 1.3 404348 105000 ? Sl Oct28 0:04 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_10_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__48_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2109 0.3 1.3 470824 106264 ? Sl Oct28 35:12 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_8_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2110 0.0 1.3 404356 104972 ? Sl Oct28 0:04 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_1_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__48_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2111 0.0 1.3 404348 104992 ? Sl Oct28 0:04 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_2_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__48_of_100 2020-10-27T17:31:07.372135+00:00 --local --pool default_pool -sd /home/airflow/gcs/dags/elastic_dag.py

airflow 2130 0.0 1.3 404628 105060 ? Ssl Oct28 0:03 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 --job_id 385 --pool default_pool --raw -sd DAGS_FOLDER/elastic_dag.py --cfg_path /tmp/tmpkgoycqh4

airflow 2138 0.0 1.3 404624 105068 ? Ssl Oct28 0:03 /usr/bin/python /usr/local/bin/airflow run workflow__SHAPE_no_structure__DAGS_COUNT_8_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once tasks__47_of_100 2020-10-27T17:31:07.372135+00:00 --job_id 387 --pool default_pool --raw -sd DAGS_FOLDER/elastic_dag.py --cfg_path /tmp/tmp7zjbj1qc

airflow 79624 0.0 0.0 19972 3544 pts/0 Ss 17:06 0:00 /bin/bash

airflow 79652 0.0 0.0 36128 3164 pts/0 R+ 17:08 0:00 ps -aux

This would have explained the worker airflow-worker-86455b549d-zkjsc not executing any more tasks, as the value of worker_concurrency used is 6, so all the celery workers are still occupied.

If have attempted to kill one of the --raw processes with the pid 2130. After sending the SIGTERM signal to it, the LocalTaskJob 385 (from screen above) changed state to success and the task was marked as failed. Following info was recovered from scheduler pod log (detecting the task as a zombie and marking it as failed actually repeated 7 times):

[2020-11-05 12:45:25,035] {taskinstance.py:1148} ERROR - <TaskInstance: workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once.tasks__47_of_100 2020-10-27 17:31:07.372135+00:00 [running]> detected as zombie@-@{}NoneType: None

[2020-11-05 12:45:25,037] {taskinstance.py:1205} INFO - Marking task as FAILED.dag_id=workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once, task_id=tasks__47_of_100, execution_date=20201027T173107, start_date=20201028T174946, end_date=20201105T124525@-@{}

[2020-11-05 12:45:25,055] {dagbag.py:397} INFO - Filling up the DagBag from /home/airflow/gcs/dags/airflow_monitoring.py

[2020-11-05 12:45:25,072] {dagbag.py:336} INFO - Marked zombie job <TaskInstance: workflow__SHAPE_no_structure__DAGS_COUNT_3_of_10__TASKS_COUNT_100__START_DATE_1d__SCHEDULE_INTERVAL_once.tasks__47_of_100 2020-10-27 17:31:07.372135+00:00 [failed]> as failed

No new messages in task’s log file. Interestingly enough, the process with pid 2104 (the --local process for the terminated --raw process), has not terminated by itself.

What you expected to happen:

The tasks are not stuck in running state until you terminate them manually.

How to reproduce it:

It is hard, as this issue appears randomly. You would have to repeat the test I have done, hoping for the issue to occur. This issue is not related to Cloud Composer specifically, as I have encountered it a few months back using different setup (with Airflow 1.10.3, python 3.7 running on Microsoft Azure virtual machines). From what I can say, it appears to be related to celery/redis, so an instance you use should have CeleryExecutor with Redis as message broker. In the aforementioned previous setup this issue started occurring after switching Airflow instances from Python 2.7 to Python 3.7, so this issue may affect only python 3 instances, though I was not doing tests for python 2.7.

Anything else we need to know:

Issue Analytics

- State:

- Created 3 years ago

- Reactions:10

- Comments:14 (4 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

In Airflow 2.1.3 this problem still exists

Having this issue in Airflow 2.2.3