[DISCUSS] ClusterState feature design

See original GitHub issueAccourding to #5564

Hi, community

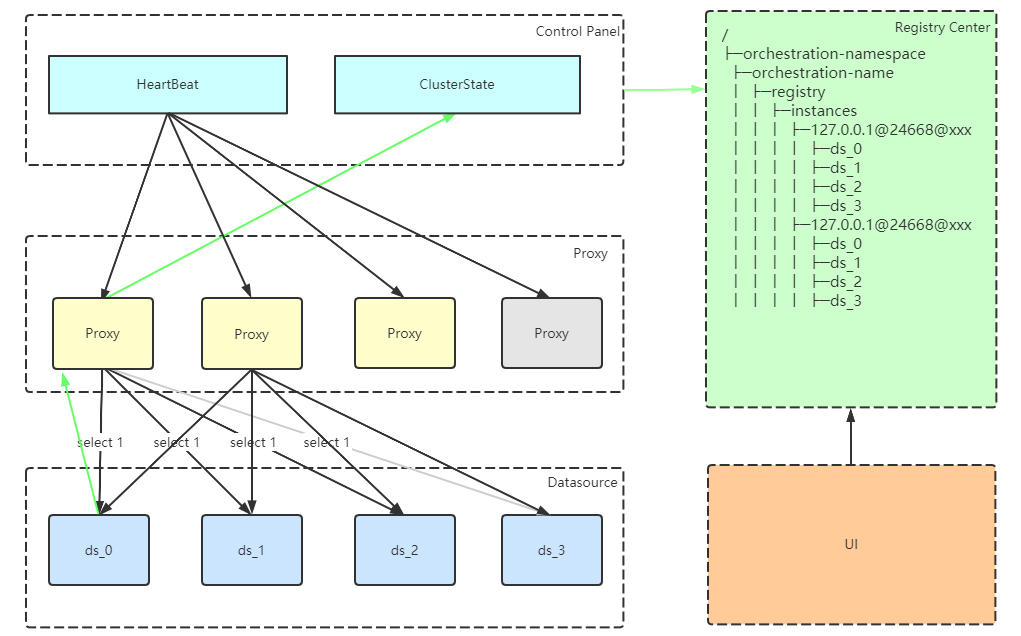

The new module Control Panel which plans to consists of Orchestration, Metrics, OpenTracing has been created now. Control Panel will provide more powerful features for ShardingSphere in the future. So, we consider designing ClusterState module under Control Panel to enhance the orchestration feature, which can monitors the state and heartbeat of Proxy and Datasource in real time.

The following is the design document, any suggestions are welcome.

Current

Currently ShardingSphere only stores and displays Proxy state

Goals

- Add heartbeats between Proxy and corresponding Datasources

- Real-time monitoring of the connection between Proxy and corresponding Datasources

- Generate real-time topology of Proxy and Datasources, node online / offline or abnormal can be displayed intuitively

Overall Design

-

Control Panel designs HeartBeat and ClusterState modules, responsible for heartbeat detection and node state processing

-

Use scheduled tasks to notify the Proxy of heartbeat detection regularly

-

Configurable whether heartbeat detection is enabled

-

Configurable time interval of detection

-

-

Proxy receives heartbeat detection notification from Control Panel and creates heartbeat detection job

-

Get a list of Datasources associated with current Proxy

-

Get a connection, execute heartbeat detection SQL (configurable)

-

Retry mechanism

- Configurable whether to retry

- Configurable maximum number of retries

-

-

Proxy gets Datasource heartbeat detection response

-

Proxy notifies Control Panel, update heartbeat to the Registry Center

Process

Start Registration

- When the Proxy starts, initialize the Datasource. After the startup is complete, save the Proxy instance and the heartbeat with Datasources to the Registry Center

Timing Synchronization

- The Control Panel starts scheduled tasks, and regularly notifies all Proxes to perform heartbeat detection

- Proxy determines whether heartbeat detection is required based on Datasource’s last connection timestamp and state

Asynchronous Update

- Once after the Proxy interacts with a Datasource, update the Datasource last connection timestamp to the Registry Center

Storage Structure

`` ` / ├─orchestration-namespace ├─orchestration-name │ ├─registry │ │ ├─instances │ │ │ ├─127.0.0.1@24668@xxx │ │ │ ├─127.0.0.1@24668@xxx

`` `

Instance node content

` instanceState: DISABLE datasources: sharding_db.ds_1: state: Node state lastConnect: Timestamp retryCount: RetryCount sharding_db.ds_2: state: Node state lastConnect: Timestamp retryCount: RetryCount `

- The runtime Datasources are stored in the Proxy instance of the Registry Center

- When heartbeat response successfully, updates the last connection timestamp and Datasource state to

ONLINE - When heartbeat response failed, updates the Datasource state to

INTERRUPTand waits for retry - New Datasources are added to the Registry Center automatically after detecting heartbeat

States

- ONLINE

- Normal heartbeat response

- INTERRUPT

- Heartbeat response failed and does not exceed the maximum number of retries

- OFFLINE

- Heartbeat response failed and exceeded the maximum number of retries

Configurations

-

Heartbeat detection switch

-

Enabled by default

-

If the switch is closed, keep the current

-

-

Heartbeat detection SQL

- Default

select 1;

- Default

-

Heartbeat detection interval

- Default setting

60s

- Default setting

-

Whether to retry

-

Enabled by default

-

If retry is turned off and the heartbeat response failed, the Datasource state is updated to

OFFLINE

-

-

Maximum number of retries

- Effective when retry is enabled

- Default

3

Modules

/shardingsphere

├─control-panel

| ├─control-panel-cluster

| │ ├─control-panel-cluster-heartbeat

| │ ├─control-panle-cluster-state

Issue Analytics

- State:

- Created 3 years ago

- Reactions:1

- Comments:6 (6 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Hi @menghaoranss , I understand for the registry center instance, I think use proxy port for the data is more suitable.

127.0.0.1@3308@xxx, IP, Port and other data are more suitable.Because the zookeeper EPHEMERAL node does not support child nodes,so,we will persist Datasource state to

instancenode: