AutoGluon stacking quality

See original GitHub issueWhat is the reason why AutoGluon shows significantly worse result compare to simple one layer stacking?

5 hours best_quality training gives private score 0.38131 compare to 0.37353 for 2 hours logistic regression.

Issue Analytics

- State:

- Created 2 years ago

- Comments:13 (1 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

AutoGluon stacking quality · Issue #1060 - GitHub

What is the reason why AutoGluon shows significantly worse result compare to simple one layer stacking? 5 hours best_quality training gives ...

Read more >autogluon.tabular.models

This property allows for significantly improved model quality in many situations compared to non-stacking alternatives. Stacker models can act as base ...

Read more >AutoGluon Tasks

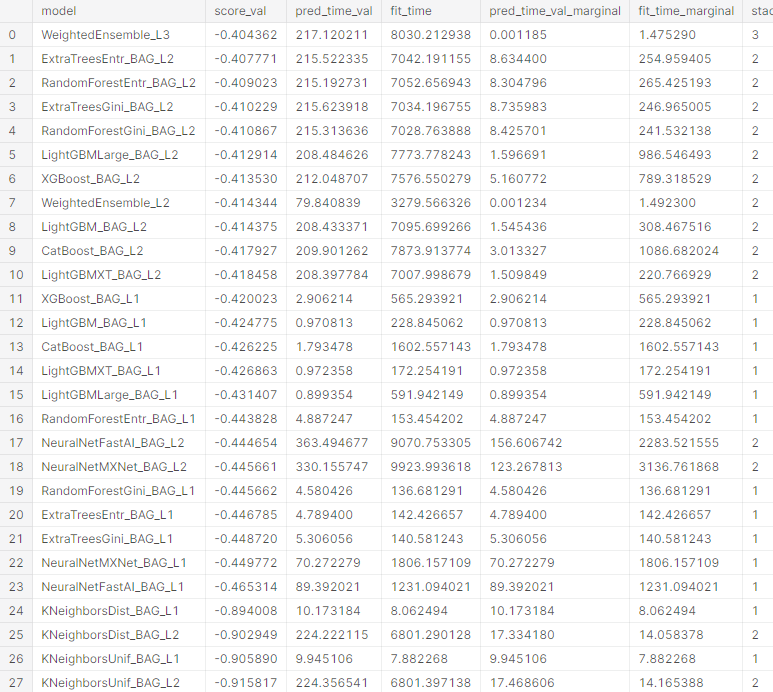

Includes information on test and validation scores for all models, model training times, inference times, and stack levels. Output DataFrame columns include: ' ......

Read more >Predicting Columns in a Table - In Depth - AutoGluon

Often stacking/bagging will produce superior accuracy than hyperparameter-tuning, but you may try combining both techniques (note: specifying presets=' ...

Read more >AutoGluon Documentation 0.2.0 documentation

If stacker models are refit by this process, they will use the refit_full versions ... quality by including test data in predictor.leaderboard(test_data) ....

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Re 1: This already exists (

'LR'is the key to use inhyperparameters), however it is not used by default. I plan to add it as a default model in a future release (possibly in v0.3).Re 2: We have a more sophisticated method of handling bagging / CV than is available in sklearn. We have to handle more complex cases than is supported by CalibratedClassifierCV. In future we may consider contributing the functionality back to sklearn, as this is some of the most important components of AutoGluon.

Re 3: It is impossible to pick the best model ahead of time for the

testscore.bestis picked off of the strongestvalscore. ML would be very easy if we could know which model was best on thetestdata ahead of time, but that is not the case.Re 4: We are happy to accept contributions! If you’d like, please open a PR which adds this model and we can test it / benchmark it to see if it improves upon our existing methods!

You can set to 1000 trees via the

hyperparametersargument in.fit: https://auto.gluon.ai/stable/_modules/autogluon/tabular/predictor/predictor.html#TabularPredictor.fitsomething like: