[BUG] BlockBlob `OpenWriteAsync` method takes twice as much time with the new storage package

See original GitHub issueDescribe the bug

Writing a block blob with the OpenWriteAsync method takes twice as much time with the new Azure.Storage.Blob package compared to the deprecated WindowsAzure.Storage package.

Expected behavior

Performance should have been improved or at least the same with the new Azure.Storage.Blobs package.

Actual behavior (include Exception or Stack Trace)

Using the Azure.Storage.Blob package (version 12.9.1) the BlockBlobClient.OpenWriteAsync() method takes twice as long compared to CloudBlockBlob.OpenWriteAsync() from the now deprecated package WindowsAzure.Storage (version 9.3.3).

To Reproduce We noticed performance degradation for a functionality where we create a zip file in a storage container from a number of images. We have a container with 100 images and we have another container in the same storage account (General Purpose v1) where we store the resulting zip file.

First we open a stream to write the block blob zip file to a container with OpenWriteAsync() and then we create zip entries from multiple block blobs coming from a container in the same storage account.

- With new

Azure.Storage.Blobpackage (on both net5.0 and netcoreapp3.1)

// Create zip

var zip = zipContainer.GetBlockBlobClient("media.zip");

using (var zipArchive = new ZipArchive(

stream: await zip.OpenWriteAsync(overwrite:true).ConfigureAwait(false),

mode: ZipArchiveMode.Create,

leaveOpen: false))

{

var swOpen = new Stopwatch();

var swCopy = new Stopwatch();

for(int i = 1; i <= 100; i++)

{

sw.Start();

var blob = blobList[i];

var fileName = string.Format(CultureInfo.InvariantCulture, "{0:D8}_{1}", i, "image.jpg");

var zipEntry = zipArchive.CreateEntry(fileName, CompressionLevel.NoCompression);

using var zipStream = zipEntry.Open();

swOpen.Start();

using var blobStream = await blob.OpenReadAsync();

swOpen.Stop();

swCopy.Start();

await blobStream.CopyToAsync(zipStream);

swCopy.Stop();

sw.Stop();

Console.WriteLine($"\tBlob {i} transfered in {sw.ElapsedMilliseconds} ms");

Console.WriteLine($"\t\tOpened in {swOpen.ElapsedMilliseconds} ms");

Console.WriteLine($"\t\tCopied in {swCopy.ElapsedMilliseconds} ms");

sw.Reset();

swOpen.Reset();

swCopy.Reset();

}

}

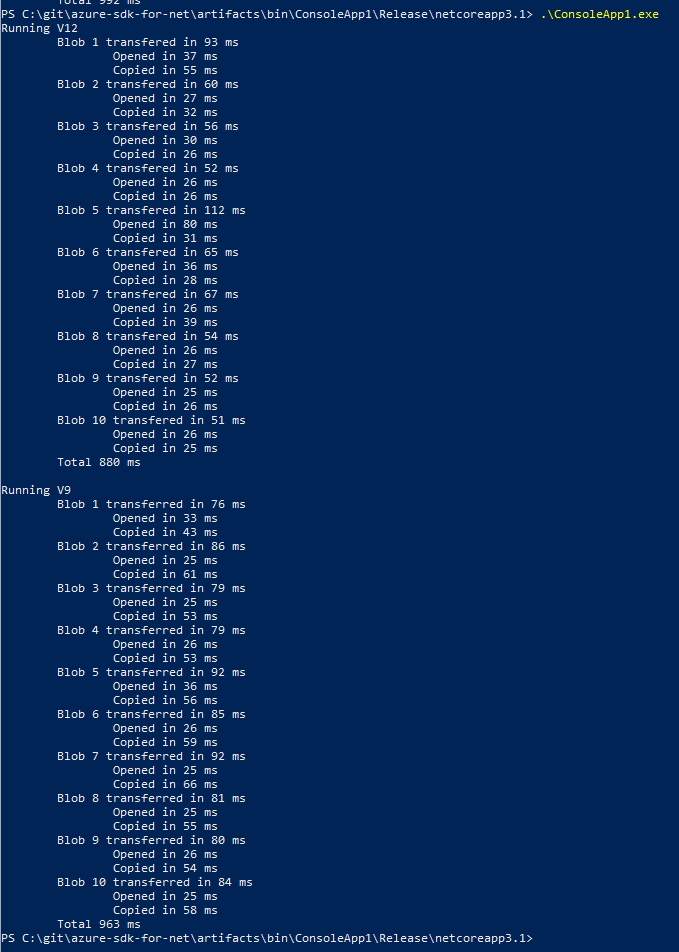

Result:

- With the deprecated

WindowsAzure.Storagepackage (targeting netcoreapp3.1)

// Create zip

var zip = zipContainer.GetBlockBlobReference("media.zip");

using (var zipArchive = new ZipArchive(

stream: await zip.OpenWriteAsync(),

mode: ZipArchiveMode.Create,

leaveOpen: false))

{

var swOpen = new Stopwatch();

var swCopy = new Stopwatch();

for(int i = 1; i <= 100 ; i++)

{

sw.Start();

var blob = (CloudBlockBlob)blobList[i];

var fileName = string.Format(CultureInfo.InvariantCulture, "{0:D8}_{1}", i, "image.jpg");

var zipEntry = zipArchive.CreateEntry(fileName, CompressionLevel.NoCompression);

using var zipStream = zipEntry.Open();

swOpen.Start();

using var blobStream = await blob.OpenReadAsync();

swOpen.Stop();

swCopy.Start();

await blobStream.CopyToAsync(zipStream);

swCopy.Stop();

sw.Stop();

Console.WriteLine($"\tBlob {i} transferred in {sw.ElapsedMilliseconds} ms");

Console.WriteLine($"\t\tOpened in {swOpen.ElapsedMilliseconds} ms");

Console.WriteLine($"\t\tCopied in {swCopy.ElapsedMilliseconds} ms");

sw.Reset();

swOpen.Reset();

swCopy.Reset();

}

}

Result:

Environment:

-

Tested the deprecated

WindowsAzure.Storagepackage with netcoreapp3.1 target framework, and the newAzure.Storage.Blobpackage with both netcoreapp3.1 and net5.0 target frameworks running in a Standard E8s v3 Azure VM, Windows 10 Enterprise -

IDE and version : VS code / dotnet cli

Issue Analytics

- State:

- Created 2 years ago

- Reactions:1

- Comments:6 (3 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

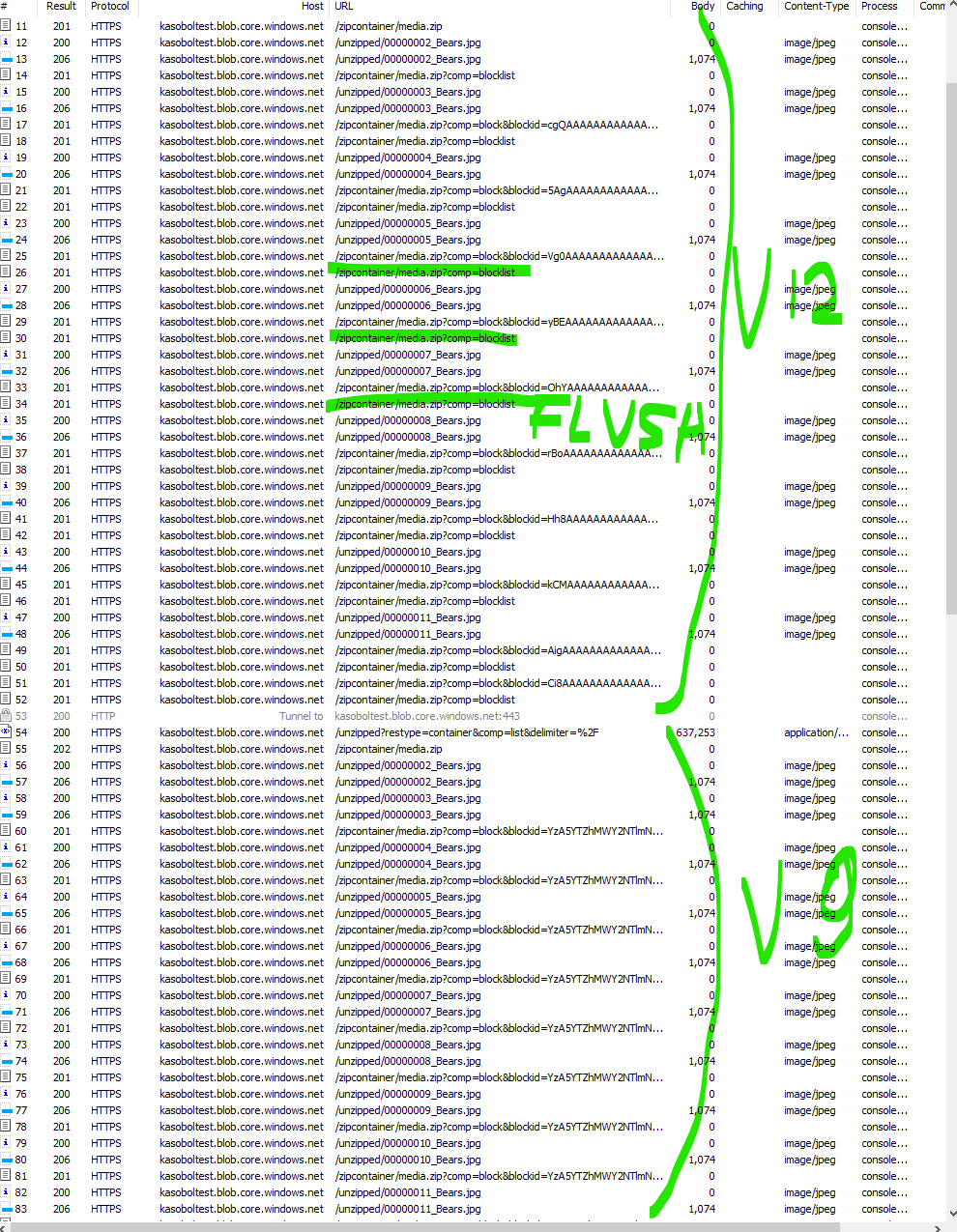

@hnuguse I was able to reproduce the issue. The new version of

OpenWriteattempts to followStreamcontract more closely than what was there in earlier versions. I.e. theFlush/FlushAsyncis fully operational by default. Which means that whenever theZipArchivedecides to flush it actually means the snapshot of data is materialized in the target blob - that means more requests made to storage and larger latency. See the trace for reference:There’s somewhat related issue opened here https://github.com/Azure/azure-sdk-for-net/issues/20652 where we discuss whether a flag disabling intermediate flushes should be added to the

OpenWriteAPIMeanwhile you can consider wrapping the

Streamreturned byOpenWriteto disable flushes. See sample for reference here https://gist.github.com/kasobol-msft/dd88c6a86f06dc981e0de96ef1169c56 . After applying workaround the time looks better:The size is 2kb per image and we have 1000 of these in the container.

Transferring all of these takes a couple minutes, while the total size is only around 1mb. I attached the data we used for testing here media.zip