Python processes hang occasionally when sending single message to service bus topic from Azure Batch Service

See original GitHub issue- Package Name: azure-servicebus

- Package Version: 7.5.0

- Operating System: Ubuntu 18.10

- Python Version: CPython 3.10

Describe the bug We have a fairly simple Python script which iterates over a list of references (strings). We use the reference to look up some records from a database, do some business logic and then update the database records for that reference.

We run the script in Azure Batch. Our Batch Pool is configured with Ubuntu 18.04 and the standard_f4s_v2 VM size. It scales up to a maximum of 3 nodes, and each node runs up to 4 simultaneous Python processes (of the same script). We have been using this solution for several months without issue.

Recently we added a send_service_bus_message function to the script. It is called at the end of each iteration, once per reference, so that downstream systems know when a particular set of database records has been processed. This example is simplified for the sake of brevity, but the send_service_bus_message function is as we have it in our program.

# litigation_grouping.py

import os

from azure.servicebus import ServiceBusClient, ServiceBusMessage

SB_TOPIC: str = "mytopic"

SB_CONN_STR: str = os.environ["SB_CONN_STR"]

def send_service_bus_message(reference: str) -> None:

with ServiceBusClient.from_connection_string(conn_str=SB_CONN_STR, logging_enable=True) as sb:

with sb.get_topic_sender(topic_name=SB_TOPIC) as sender:

msg = ServiceBusMessage(reference)

sender.send_messages(msg, timeout=30)

def do_business_logic(reference: str) -> None:

...

def main():

with open('references.txt') as f:

references = f.read().splitlines()

for reference in references:

do_business_logic(reference)

send_service_bus_message(reference)

if __name__ == "__main__":

main()

While this works as expected most of the time, occasionally one or more processes will hang when exiting the two context manager blocks, i.e. the ServiceBusClient/ServiceBusSender. We don’t get an exception. Not even a timeout exception, which is weird. The process just stops doing any actual work.

Our logging shows that sooner or later the call to send_service_bus_message() doesn’t return control to the caller, so we never get to the next iteration of the for loop in main().

To be clear, each node/VM in our Batch pool is running up to 4 Python processes at the same time. When one process hangs, it does not seem to affect the operation of the other three processes, although they may also “hang” independently at the same section of code at a later point in time.

I initially noticed this behaviour under version 7.4.0 of azure-servicebus and version 1.4.0 of uamqp, and finding this issue I hoped that upgrading to 7.5.0 (and uamqp 1.5.1) would be the answer. Unfortunately the problem is ongoing even after we updated those dependencies.

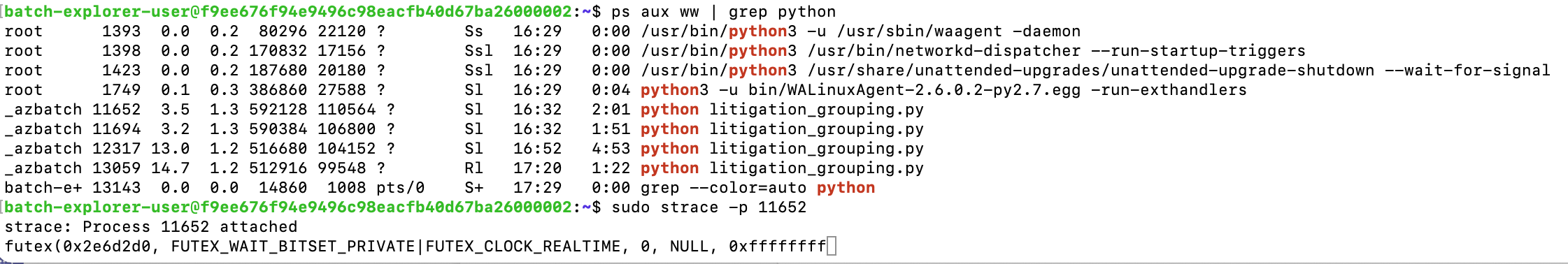

My expertise in troubleshooting issues like these is limited, but so far i have been able to ssh to the Batch nodes and determine that the processes are still alive via ps aux | grep python, but those which have hung show 0% CPU and RAM usage.

I used strace -p <PID> to check the syscalls of the hung processes/threads, all of which return:

batch-explorer-user@f9ee676f94e9496c98eacfb40d67ba26000002:~$ sudo strace -p 11694

strace: Process 11694 attached

futex(0x2065950, FUTEX_WAIT_BITSET_PRIVATE|FUTEX_CLOCK_REALTIME, 0, NULL, 0xffffffff

Interestingly, when I inspect the tree view in htop, the hung processes seem to have one more child thread than the processes which are still working. I suspected this was the keep-alive thread associated with the uamqp connection and the call to Thread.join() in AMQPClient.close(), but I don’t know how I might prove that.

I tried attaching gdb to one of the hung processes, but most of the output from thread apply all py-bt is (frame information optimized out), which as far as I’m aware means that the Python version running on the node was compiled with certain optimisation flags that makes it difficult for gdb to inspect. I tried thread apply all py-list but similarly it is “unable to read information on Python frame”. I did manage to get this from info threads:

(gdb) info threads

Id Target Id Frame

* 1 Thread 0x7f458abc8740 (LWP 11694) "python" 0x00007f458a79f7c6 in futex_abstimed_wait_cancelable (private=0, abstime=0x0, expected=0, futex_word=0x2065950)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

2 Thread 0x7f4582c6d700 (LWP 11696) "python" 0x00007f458a79f9b2 in futex_abstimed_wait_cancelable (private=0, abstime=0x7f4582c6c110, expected=0, futex_word=0x7f457c0011b0)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

3 Thread 0x7f458246c700 (LWP 11697) "python" 0x00007f458a79f7c6 in futex_abstimed_wait_cancelable (private=0, abstime=0x0, expected=0, futex_word=0x2065950)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

4 Thread 0x7f4581c6b700 (LWP 11698) "python" 0x00007f458a79f9b2 in futex_abstimed_wait_cancelable (private=0, abstime=0x7f4581c6a110, expected=0, futex_word=0x7f45780011b0)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

5 Thread 0x7f4580e37700 (LWP 11699) "python" 0x00007f458a79f7c6 in futex_abstimed_wait_cancelable (private=0, abstime=0x0, expected=0, futex_word=0x2065950)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

6 Thread 0x7f4571aea700 (LWP 11906) "python" 0x00007f458a79f7c6 in futex_abstimed_wait_cancelable (private=0, abstime=0x0, expected=0, futex_word=0x2065950)

at ../sysdeps/unix/sysv/linux/futex-internal.h:205

When I enable_logging=True, it seems that often the last log message before the process hangs is Keeping 'SendClient' connection alive..

To Reproduce Steps to reproduce the behavior:

- Run an equivalent Python script to the above example on multiple processes (possibly only on Ubuntu 18.04?)

Expected behavior The script should send one service bus message per reference, and it should not cause the process to hang.

Screenshots

We observed the behaviour on PID 11652, so I tried strace - here is the output.

Additional context As an experiment, I removed the service bus functionality from our code and scheduled a lot of work in Batch Service. None of the processes on any of the nodes hanged. This only appears to happen (occasionally, for some processes rather than all) when I add the service bus related code back into the script.

I read this issue and we are not sharing the client or the sender between threads, so I don’t see that it is the same problem.

Having read various issues where users were exceeding rate limits/quotas and were being throttled, I am confident that we are well below those thresholds. We handle a few hundred “references” at most, and the time spent doing business logic in between messages being sent can be anything from a few seconds to 30 or 40 minutes.

Each “reference” is <= 11 ANSI characters. No other data is included in each ServiceBusMessage.

Issue Analytics

- State:

- Created 2 years ago

- Comments:6 (2 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Hi @rakshith91. Interesting suggestion… I will try to find time to test that.

Hi @chrisimcevoy, since you haven’t asked that we “

/unresolve” the issue, we’ll close this out. If you believe further discussion is needed, please add a comment “/unresolve” to reopen the issue.