Setting data path using PipelineParameter within OutputFileDatasetConfig yields unusable path

See original GitHub issue- Package Name: azureml-core

- Package Version: 1.39.0

- Operating System: windows 11

- Python Version: 3.7.12

Describe the bug When using a PiplelineParameter to handle the output data path within OutputFileDatasetConfig, the pipeline job will complete successfully but create an unusable path on the Datastore file system.

To Reproduce

### Pipeline Parameters ###

# Create pipeline parameter for input dataset name

input_dataset_name_pipeline_param = PipelineParameter(

name="input_dataset_name",

default_value=dataset_name

)

# Create dataset output path from pipeline param so it can be changed at runtime

data_output_path_pipeline_param = PipelineParameter(

name="data_output_path",

default_value=''

)

### Create Inputs and Outputs ###

# Get datastore name, data source path, and its expected schema from mapped values using the colloquial dataset name

datastore_name = DATASET_REFERENCE_BY_NAME[dataset_name].datastore # returns a Datastore object for the input data

data_path = DATASET_REFERENCE_BY_NAME[dataset_name].data_source_path # returns path to the input data

# Create dataset object using the datastore name and data source path

input_dataset = create_tabular_dataset_from_datastore(workspace, datastore_name, data_path) # returns a Dataset object

# Create input tabular

tabular_ds_consumption = DatasetConsumptionConfig(

name="input_tabular_dataset", # name to use to access dataset within Run context

dataset=input_dataset

)

output_datastore = Datastore(workspace, name='datastore_name')

# Create output dataset

output_data = OutputFileDatasetConfig(

name="dataset_output",

destination=(output_datastore, data_output_path_pipeline_param)

).as_upload(overwrite=True)

### Create pipeline steps ###

# Pass input dataset into step1

step1 = PythonScriptStep(

script_name="script.py", # doesn't matter what this does,

source_directory="src/"

name="Step 1",

arguments=["--dataset-name", input_dataset_name_pipeline_param

],

inputs=[tabular_ds_consumption],

outputs=[output_data]

)

pipeline_definition = Pipeline(workspace, steps=[step1])

# The actual submission to run the pipeline job (using PublishPipeline)

pipeline_definition.publish(name="my-pipeline")

experiment = Experiment(name='my-pipeline-experiment')

experiment.submit(

published_pipeline,

continue_on_step_failure=True,

pipeline_parameters={"input_dataset_name": 'dataset', "data_output_path": f"base_data_pull/{dataset_name}/{today}/{dataset_name}.parquet"}

)

Expected behavior

Expected OutputFileDatasetConfig( name="dataset_output", destination=(output_datastore, data_output_path_pipeline_param) ).as_upload(overwrite=True) to upload data to the path input during pipeline run submission using the PipelineParameter input "data_output_path": f"base_data_pull/{dataset_name}/{today}/{dataset_name}.parquet"

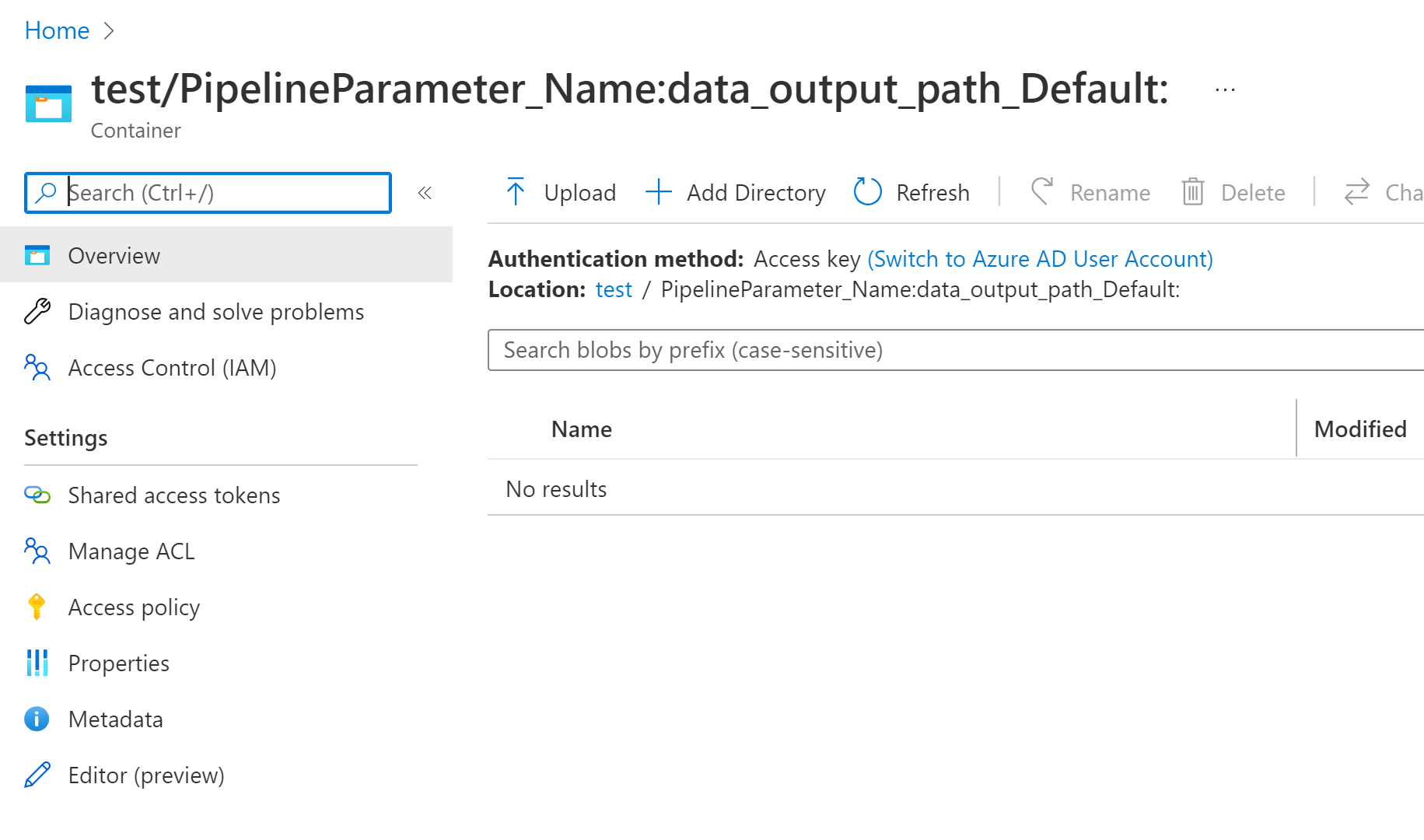

Instead, it created an unusable folder under the datastore, and the pipeline still completes successfully.

Issue Analytics

- State:

- Created a year ago

- Reactions:2

- Comments:16 (5 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Schedule Class - azureml-pipeline-core - Microsoft Learn

The name of the data path pipeline parameter to set with the changed blob path. ... In the following example, when the Schedule...

Read more >Posts | Vlad Iliescu

WAY. I'll show you a couple of improvements in a moment, ... Passing Data Between Pipeline Steps with OutputFileDatasetConfig; Conclusion ...

Read more >How to pass a DataPath PipelineParameter from ...

This notebook demonstrates the use of DataPath and PipelineParameters in AML Pipeline. You will learn how strings and DataPath can be ...

Read more >Data | Azure Machine Learning

Guide to working with data in Azure ML. ... files, # List[str] of absolute paths of files to upload ... from azureml.data import...

Read more >The missing guide to AzureML, Part 3: Connecting to data and ...

Data. The simplest way to work with data in AzureML is to read and write blobs from the blob datastore attached to your...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

@chritter, I had opened a separate ticket with Microsoft. Their reply was that this behavior is intentional as the OutputFileDatasetConfig and PipelineParameter are not intended to be used this way.

I was recommended to introduce a separate PythonScriptStep for the purpose of writing output to a dynamic file path.

Hopefully v2 of AML will smooth out some of these issues going forward.

I ended up creating an upload step and using a pipeline parameter to pass in a FileDataset instead of relying on using OutputFileDatasetConfig. So it looks like:

build_pipelines.py

data_uploader.py takes a FileDataset and an output path and uploads it to our storage to that output path, in our case an ADLSgen2, using azure.storage.filedatalake.DataLakeServiceClient and creating an azure.identity.ClientSecretCredential using our Datastore’s saved SPN creds

So that after we publish the pipeline using some defaults, we can submit a run against the default PublishedPipeline PipelineEndpoint. We use a dataset name tied to a dataset reference that contains the input Datastore and data path info, plus some more info like the pipeline name to use