Very slow terraform scan on medium sized repository

See original GitHub issueDescribe the bug

I’ve just tried checkov on a medium sized repository that contains ~70 modules and around 20 Terraform workspaces. Running checkov against the entire repository takes about 1 hour and 50 minutes on current gen desktop CPU. In contrast something like tfsec only takes in the order of ~20s for the same code.

My understanding is that both tools are primarily busy evaluating the logic of the Terraform code (substituting variables, instantiating modules, …) and applying each rule set is the cheaper part of the execution. Correct me if I am wrong.

cloc says the following about my Terraform folder:

$ cloc terraform/

github.com/AlDanial/cloc v 1.88 T=2.25 s (416.3 files/s, 35823.6 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

HCL 709 12897 5047 50407

…

-------------------------------------------------------------------------------

SUM: 935 14249 5268 60935

-------------------------------------------------------------------------------

Running checkov against individual modules takes somewhere between a ~5 to 30s which is totally fine when looking at their complexity.

Having done some light profiling of the application it appears as if the (costly?) parsing of each HCL block in the Terraform files is done over and over again for each instance of a module.

I slapped some @lru_cache(max_size=1024) annotation on to the parse_var_blocks function which resulted in a 13% reduction of execution time. Increasing the cache size to an unbounded limit had more influence on the performance but not to the point where the runtime is anywhere near what I would feel comfortable with in CI.

Anything going from here seems to require more knowledge about the code base.

commit a4606b31d29a1f5d4f392032ebc6e75f0d7ae0a2

Author: Andreas Rammhold <andreas@rammhold.de>

Date: Fri Mar 19 17:09:20 2021 +0100

Cache calls to find_var_blocks

While using checkov on a larger terraform repository the runtime on all

the files was about 1 hour and 50 minutes. As that large repository is

not a good test case I trimmed it down to a smaller part of the repo

that only took about 12.736s ± 0.153s seconds before this change and with this

change is at 11.196s ± 0.195s which is a nice ~13% speedup in runtime.

diff --git a/checkov/terraform/parser_utils.py b/checkov/terraform/parser_utils.py

index b672c24e..50be5e27 100644

--- a/checkov/terraform/parser_utils.py

+++ b/checkov/terraform/parser_utils.py

@@ -4,6 +4,8 @@ from dataclasses import dataclass

from enum import Enum, IntEnum

from typing import Any, Dict, List, Optional

+from functools import lru_cache

+

import hcl2

@@ -48,6 +50,7 @@ class ParserMode(Enum):

return str(self.value)

+@lru_cache(maxsize=1024)

def find_var_blocks(value: str) -> List[VarBlockMatch]:

"""

Find and return all the var blocks within a given string. Order is important and may contain portions of

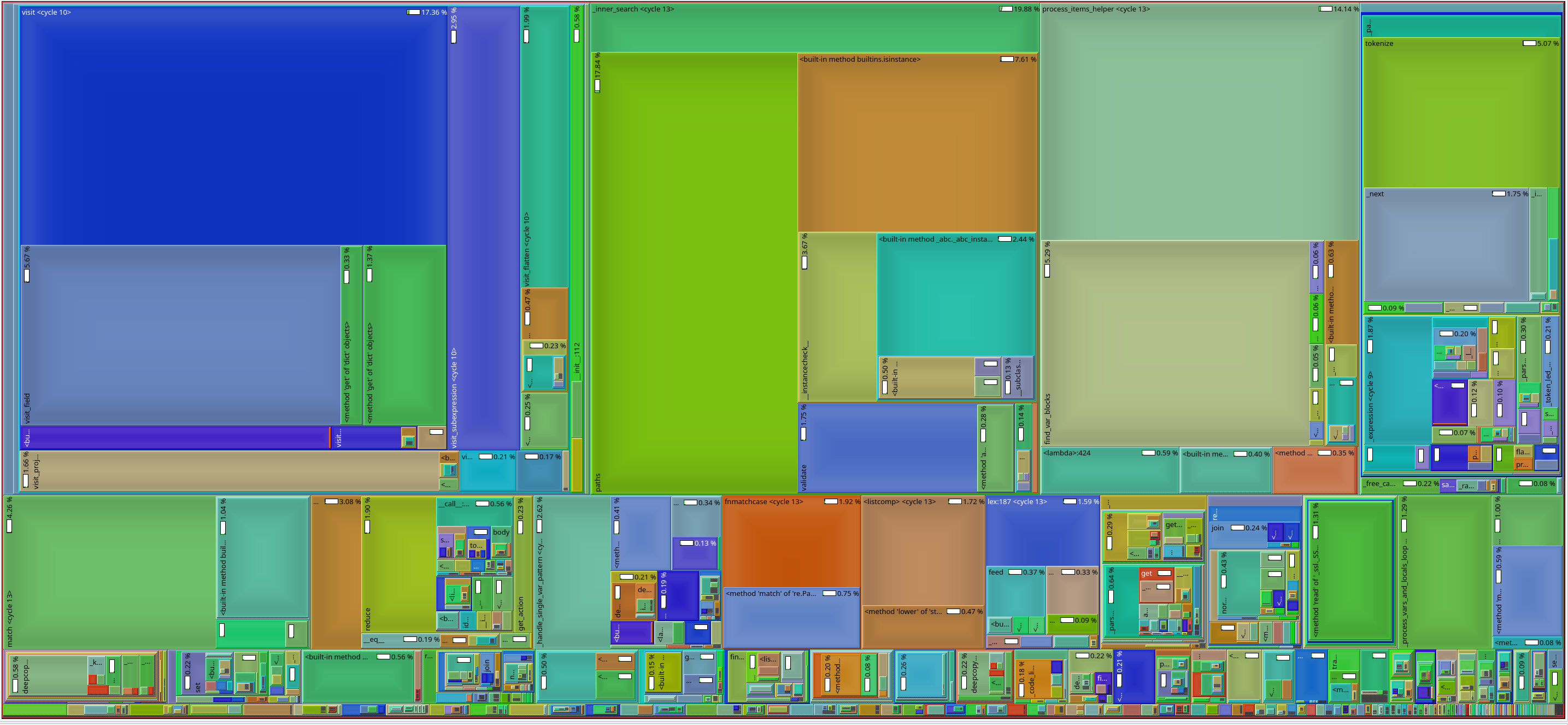

Profile results after applying the above caching patch:

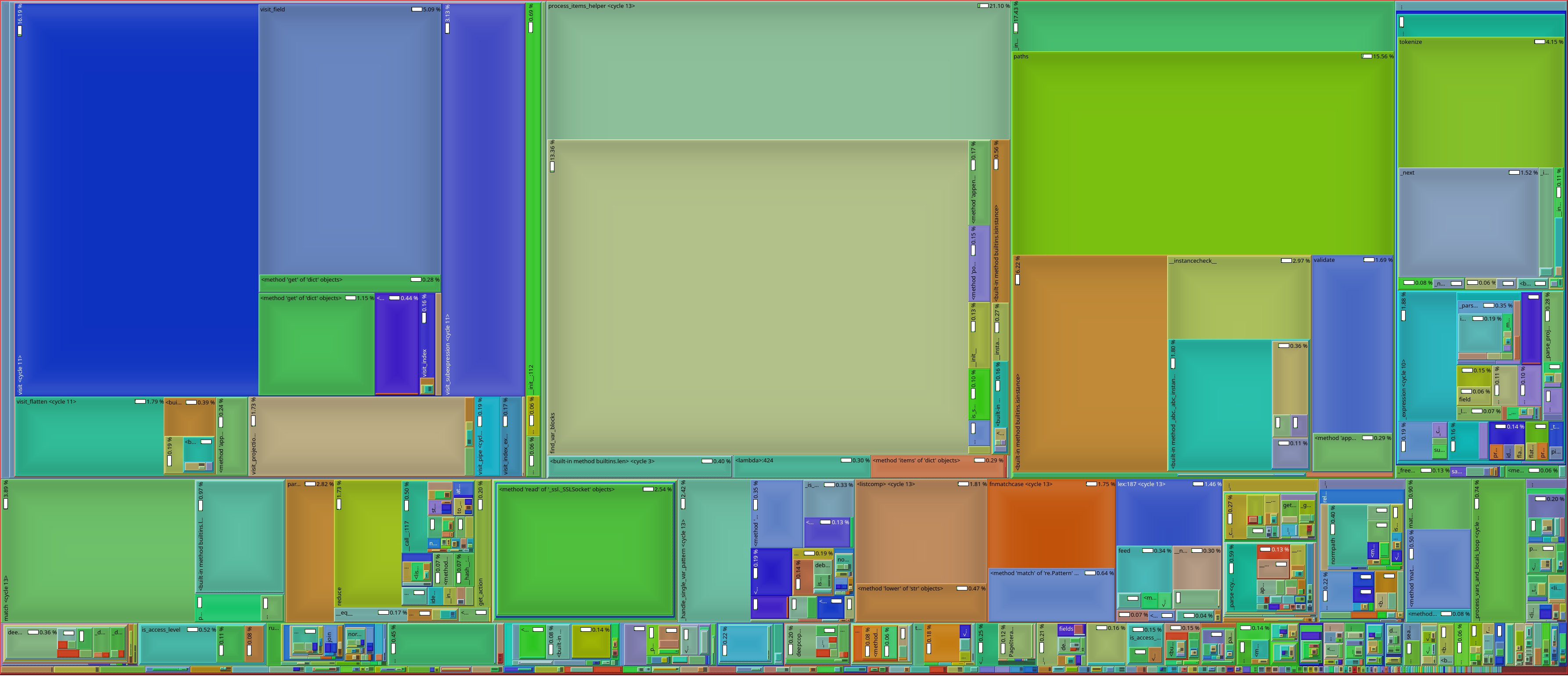

Profile results before applying the above caching patch:

Looking at the output of strace -e openat bin/checkov .... I can see that checkov is reading some files as often as four times within one run.

To Reproduce Steps to reproduce the behavior:

- Checkout a larger terraform repository

- run checkov against the directory

- wait

Expected behavior I would expect a runtime in the order of seconds or minutes but not tens of minutes or almost two hours. .

Desktop (please complete the following information):

- OS: Linux, using checkov docker container and a local python interpreter on NixOS during profiling/testing.

- Checkov Version: 36d065755da53b59ab8371bbe349c9cb3ce526ff

Issue Analytics

- State:

- Created 2 years ago

- Comments:19 (10 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

@andir @robeden please wait with new commits on this. @nimrodkor’s team is leading a big performance change. The PR should be opened soon. I hope we will see different results after those changes. We did see a very big change on our own repos.

great @andir - i’m closing the issue for now. If you’d like to make further improvements, we would accept a PR.