[question] Support for atomic bulk promotion using lockfiles CI

See original GitHub issueSummary

How can we use the lockfiles CI technique documented in https://docs.conan.io/en/latest/versioning/lockfiles/ci.html to atomically build 2 or more packages for promotion?

Background

With much very appreciated help from the Conan team, we have implemented a lockfiles-based CI following the example in https://docs.conan.io/en/latest/versioning/lockfiles/ci.html .

As we are in a larger organization and do not have permissions to create Artifactory repositories on the fly, and since Artifactory move operations for multiple packages are not guaranteed to be atomic, for the moment we have settled on a practice of using a single stable Artifactory repo, and trusting that lockfile-based builds will ensure the consistency.

Roughly, we do the following:

Our conan configuration installed by all users including our Jenkins build job user contains:

[general]

default_package_id_mode = package_revision_mode

This is because we can’t trust that our organization’s packages respect semver, and we need to ensure correctness. So any change in a binary package revision should cause Conan to calculate that all other dependent packages must be rebuilt.

First, we conan create build the desired package to be promoted itself, for the required build profiles, e.g. debug and release:

def buildProfiles = conan_build_profiles.tokenize(',')

conanClient.run(buildInfo: buildInfo, command: "lock create ./conanfile.py --version ${conan_package_version} --user ${conan_package_user} --channel ${conan_package_channel} --build=${conan_build_policy} ${conan_lock_create_base_recipe_information_only_additional_parameters} ".toString())

conanClient.run(buildInfo: buildInfo, command: "export . ${packageNameFromInspect}/${conan_package_version}@${conan_package_user}/${conan_package_channel} ${conan_lock_export_recipe_with_full_reference_additional_parameters}".toString())

def builtPackages = [].toSet()

buildProfiles.each {

conanClient.run(buildInfo: buildInfo, command: "lock create ./conanfile.py --version ${conan_package_version} --user=${conan_package_user} --channel=${conan_package_channel} --lockfile-out=package_per_profile_deps_${it}.lock --profile ${it} --build=${conan_build_policy} ${conan_lock_create_package_per_profile_additional_parameters}".toString())

conanClient.run(buildInfo: buildInfo, command: "info ${packageNameFromInspect}/${conan_package_version}@${conan_package_user}/${conan_package_channel} --graph=dependency_graph_${it}.html -l package_per_profile_deps_${it}.lock --build=${conan_build_policy} ${conan_info_graph_per_profile_additional_parameters}".toString())

conanClient.run(buildInfo: buildInfo, command: "create . ${conan_package_version}@${conan_package_user}/${conan_package_channel} --json conanCreateOutput_${it}.json --lockfile=package_per_profile_deps_${it}.lock ${conan_additional_create_parameters}".toString())

// We created different conanCreateOutput_<someprofile>.json for each buildProfiles element.

def conanCreateOutputJson = readJSON file: "conanCreateOutput_${it}.json"

builtPackages = created_refs(builtPackages, conanCreateOutputJson)

echo "builtPackages[${builtPackages}] after conan create for profile[${it}]"

}

As we go, we parse created_refs from the json output of the conan create commands, to build a list of built packages which should be uploaded to Artifactory, if the entire build succeeds.

Then we proceed with what we called our “buildall verification” using conan install:

// Create base lock file for distribution

conanClient.run(buildInfo: buildInfo, command: "lock create --reference=${conan_distribution_name}/${conan_distribution_version}@${conan_distribution_user}/${conan_distribution_channel} --build=${conan_build_policy} ${conan_lock_create_base_distribution_additional_parameters}".toString())

// Create distribution-based lock files and build-order for each profile

buildProfiles.each {

echo "Distribution lockfile for buildProfile[${it}]"

env.MY_FAILURE_STAGE = STAGE_NAME + "__conan_buildall_verification_build_order_distribution_loop_profile_${it}"

conanClient.run(buildInfo: buildInfo, command: "lock create --reference=${conan_distribution_name}/${conan_distribution_version}@${conan_distribution_user}/${conan_distribution_channel} --build=${conan_build_policy} ${conan_lock_create_distribution_per_profile_additional_parameters} --lockfile-out=distribution_per_profile_deps_${it}.lock --profile ${it}".toString())

conanClient.run(buildInfo: buildInfo, command: "lock build-order distribution_per_profile_deps_${it}.lock --json build_order_${it}.json".toString())

}

// Looping through the build order files to try building downstream packages

buildProfiles.each {

echo "Building downstream packages for buildProfile[${it}] using build-order file build_order_${it}.json"

env.MY_FAILURE_STAGE = STAGE_NAME + "__conan_buildall_verification_downstream_build_loop_profile_${it}"

def buildOrderFile_json = readJSON file: "build_order_${it}.json"

buildOrderFile_json.each { level ->

echo "Building build-order level"

level.each { array ->

def package_ref = array[0]

def package_id = array[1]

def context = array[2]

def id = array[3]

echo "Building build-order item: package_ref[${package_ref}] package_id[${package_id}] context[${context}] id[${id}]"

def (String verify_name, String verify_ver, String verify_user, String verify_channel, String verify_packageRevision) = parseReference(package_ref.toString())

echo "verify package_ref[" + package_ref.toString() + "] verify_name[" + verify_name + "] verify_ver[" + verify_ver + "] verify_user[" + verify_user + "] verify_channel[" + verify_channel + "] verify_packageRevision[" + verify_packageRevision + "]"

conanClient.run(buildInfo: buildInfo, command: "install ${package_ref} --build=${package_ref} --json conanBuildallInstallOutput_${verify_name}_${it}.json --lockfile=distribution_per_profile_deps_${it}.lock --lockfile-out=distribution_per_profile_deps_${it}_updated.lock".toString())

// We created different conanBuildallInstallOutput_<package>_<someprofile>.json for each package that needed to be rebuilt to verify the build.

def conanBuildallInstallOutputJson = readJSON file: "conanBuildallInstallOutput_${verify_name}_${it}.json"

builtPackages = created_refs(builtPackages, conanBuildallInstallOutputJson)

echo "builtPackages[${builtPackages}] after conan install build for profile[${it}]"

conanClient.run(buildInfo: buildInfo, command: "lock update distribution_per_profile_deps_${it}.lock distribution_per_profile_deps_${it}_updated.lock".toString())

}

}

}

}

Notice that during the iteration through the buildOrderFile_json, we augment the lock file using lock update, and also continued to append to builtPackages with the created_refs from each conan install operation.

Finally we upload all builtPackages to Artifactory:

builtPackages.each {

def builtPackage = "${it}"

echo "builtPackage[${builtPackage}]"

// Ensure we have a valid package reference for the upload.

// Work around https://github.com/conan-io/conan/issues/6862

if ( builtPackage.indexOf('@') == -1 ) {

echo 'builtPackage does not contain @'

if ( builtPackage.indexOf('#') == -1 ) {

echo 'builtPackage does not contain #, appending @'

builtPackage = builtPackage + '@'

} else {

echo 'builtPackage does contain #, inserting @ immediately before it'

builtPackage = builtPackage.replaceFirst('#', '@#')

}

}

def command = "upload ${builtPackage} --all -r ${artifactoryUploadRemote} ${conan_additional_upload_parameters}"

conanClient.run buildInfo: buildInfo, command: command.toString()

At this point, we believe we’ve guaranteed that our stable Artifactory repo contains a set of packages, the newest revision of each builds consistently with all the others.

The Question

This mechanism allows us to use conan create initially to build/update a single package and test that its updated version won’t break the build of any package in the dependency DAG. But sometimes we have found that in order to make breaking changes to a package, it’s necessary to be able to prepare and submit for ‘promotion’ N packages at a time, and then test the build of all N of those packages together, along with the subsequent buildall verification of all dependent packages later.

How can we fit this concept into the current lockfiles CI based workflow described above?

Pretty Pictures

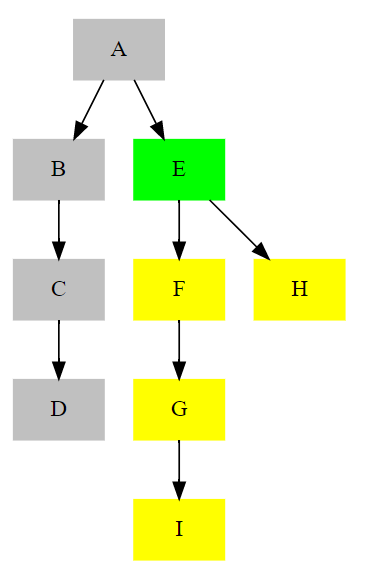

We have the following build DAG. We wish to promote a new version of package E which we do with our initial conan create command. We then use lockfiles and Conan calculates that F,G,I,H will be affected by this change, and will need to be rebuilt, which we will do using conan install over the build_order calculated from the lockfile:

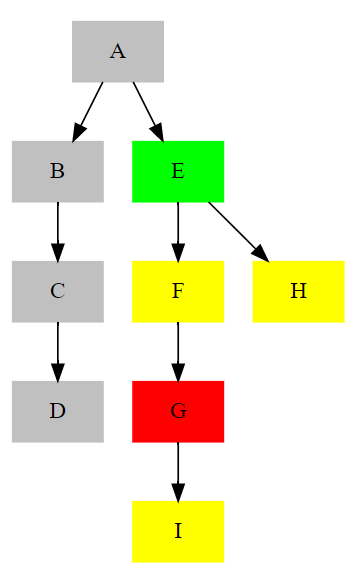

However, we’ve made a change in E which we know will be a breaking change for G:

How can we change our build workflow so that we can conan create both E and G and then submit them to the build DAG verification using conan install?

- I’ve read the CONTRIBUTING guide.

Issue Analytics

- State:

- Created 2 years ago

- Comments:8 (4 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

We have all our packages set to using

[>= ]version ranges, so that our promotion candidate build pulls out the latest version available of each package in our stable Artifactory repo when initially constructing the first lockfile.We’re using git exclusively. Different repos for different packages, which may come from different teams.