Issue warning when map_blocks() function with axis arguments conflicts with know dask array chunk structure

See original GitHub issueHello, I recently ran into this issue and wanted to suggest issuing a warning when mapping a function onto a dask array when the mapped function arguments could yield unexpected/undesirable behavior in relation to the known chunk structure of the array. I provide an example below.

Minimal example: I want to horizontally stack multiple 1-d dask arrays and argsort them along their columns.

import numpy as np

import dask

import dask.array as da

# column vectors

array1 = da.from_array(np.array([5, 9, 1, 0]).reshape((-1, 1)))

array2 = da.from_array(np.array([12, -9, 15, 0]).reshape((-1, 1)))

array3 = da.from_array(np.array([90, -3, 3, 16]).reshape((-1, 1)))

# horizontally stack

combined_array = da.hstack([array1, array2, array3])

# argsort

combined_array.map_blocks(np.argsort, axis=1).compute()

Unexpected/undesired output:

array([[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

[0, 0, 0]])

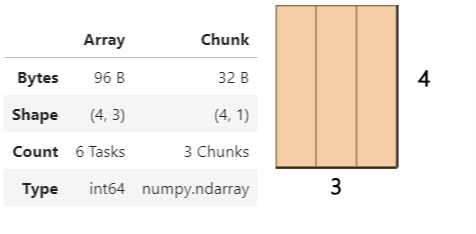

This code results in unexpected and undesirable output. The hstacked array remains chunked along the columns, causing the mapped argsort along axis=1 to return all zeros:

I resolved the issue by rechunking the stacked array so that each row was part of the same chunk:

combined_array = combined_array.rechunk({1: combined_array.shape[1]}) # ensure row contents are part of the same chunk

combined_array.map_blocks(np.argsort, axis=1).compute()

Desired output:

array([[0, 1, 2],

[1, 2, 0],

[0, 2, 1],

[0, 1, 2]])

Suggestion: To help prevent unexpected and undesirable results, it may be worth alerting the user if the arguments to their mapping function (axis=1 in this case) conflict with the known chunk structure of their array. What do you think?

Issue Analytics

- State:

- Created 3 years ago

- Comments:5 (4 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

I think this is good to close since in the linked PR, it was decided this warning may not be needed.

Thanks for following up here @Madhu94