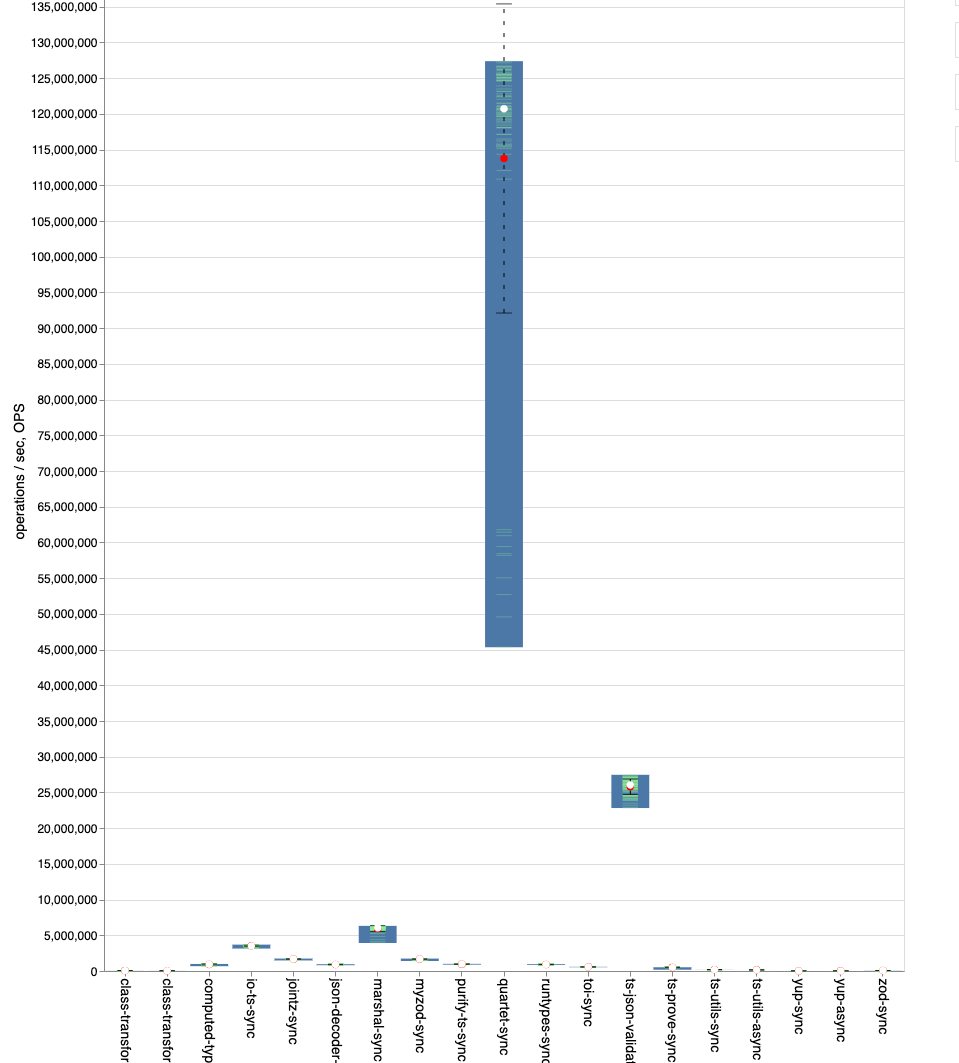

JIT compiled validators should be much much faster

See original GitHub issueIn the intro you perform a comparison against class-transformer which is probably at the lowest end of validators in terms of performance…

You should actually compare to other JIT libraries and not to composition library.

ts-json-validator (avj) or quartet

They both have a huge advantage over marshal.ts

Since the top 3 libraries are JIT, you can see the gap.

Note that JIT libraries do excel at benchmarks, less at realtime world scenarios since JIT libraries LOVE it when the same validator function is running over and over, getting inlined all the way.

BTW, I believe that if you can shorten the amount of function calls done from calling to perform validation and until returning a result you will get better benchmark results.

Issue Analytics

- State:

- Created 3 years ago

- Comments:7 (5 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

When is JIT Faster Than A Compiler? - Shopify Engineering

A good compiler can greatly speed up your code by putting a lot of effort into improving it ahead of time. This is...

Read more >Implementing ART just-In-time (JIT) Compiler

The JIT compiler complements ART's current ahead-of-time (AOT) compiler and improves runtime performance, saves storage space, and speeds ...

Read more >java - JIT recompiles to do fast Throw after more iterations if ...

So, the sooner the code is compiled by C2 - the less iterations will pass before the loop finishes. How profiling in C1-compiled...

Read more >I found 10000x faster TypeScript validator library

TypeBox is faster than my library typescript-json in the is() function ... and TypeBox could be much faster than other validator libraries.

Read more >Reader Q&A: When will better JITs save managed code?

First, JIT compilation isn't the main issue. The root cause is much more fundamental: Managed languages made deliberate design tradeoffs to ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Ah I see, you talk about the linked benchmark repo. Well I talked with the author already that he is using Marshal wrong. Marshal has 2 main features: Validating and transforming data. The author uses both features at the same time for a simple validation check, although transforming is traditionally way slower and not necessary for validation. In other libraries like ts-json-validator (avj) or quartet he uses only the validation feature. So clearly the benchmark is flawed. I tried to correct it in https://github.com/moltar/typescript-runtime-type-benchmarks/pull/148, but the author refuses to merge since a totally different topic type guarding doesn’t meet his criteria.

Also here is the issue where I explain in detail why his benchmark is fundamentally flawed for validation. https://github.com/moltar/typescript-runtime-type-benchmarks/issues/149. There is explained why Marshal is faster than

ts-json-validatorandajvfor actua validation use-cases. The overall consensus wasSo, the linked repo is not actually a validation benchmark, but just a very simple runtime type checker. It’s very misleading to say now that Marshal is slower in validation, when you really talk about a very specific subset, namely just type checking.

I’m going to remove the link as its highly misleading and instead link to the benchmark I use in Marshal’s test suite.

Are you trolling? You literally added to Marshal’s case additional validation rules like isNegative etc which you did not add to quartet etc and wonder why the latter is faster?

I linked the benchmark code so you can clearly see that Marshal is in actual real-world use-cases (where nobody only checks for types, but also for content stuff, like arrays, string length, number range…) way way faster. In synthetic benchmarks where only types are checked is Marshal slower - as it was not tuned for those rather exotic use-cases.