TrueNAS SCALE 21.04 -- iscsi/nfs crash loop

See original GitHub issueAcknowledging that TrueNAS SCALE is in alpha, I’m having trouble getting democratic-csi to work at all (freenas-iscsi, freenas-nfs, nfs-client). I’m using Rancher as a GUI for over a k3s cluster, if it makes a difference.

To just debug one problem at a time, here is my iscsi setup:

- I have installed the prerequisites on every node

- I have set up a portal and initiator on TrueNAS, and I know iscsi works fine because I have vSphere connecting to a different iscsi target using a different portal and different initator.

- I have created a

csiuser, provided my public key, and allowed no-password sudo on the TrueNAS CLI.

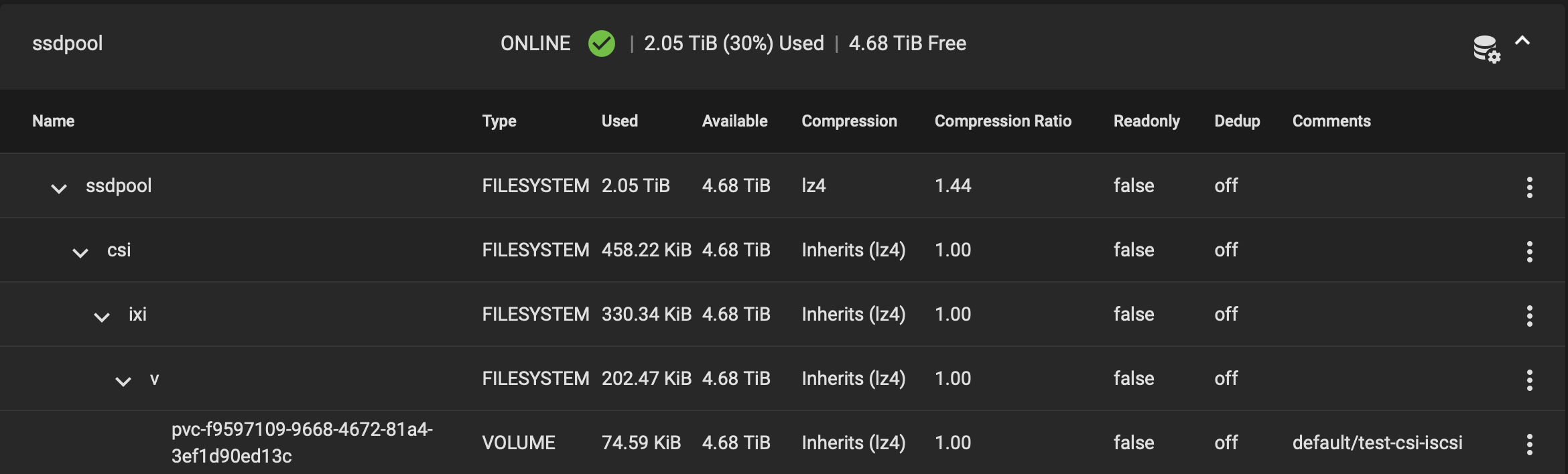

Whenever I try to create a PVC, it gets stuck in pending status. I can see that the volume gets created on TrueNas:

Once in a while it will make it to

Once in a while it will make it to bound, but then I cannot use the PVC:

Unable to attach or mount volumes: unmounted volumes=[redis-data], unattached volumes=[redis-data config redis-tmp-conf tmp redis-token-bmq9m start-scripts health]: timed out waiting for the condition

MountVolume.MountDevice failed for volume "pvc-b9269150-0a75-40e3-b1c2-6b5722904c07" : rpc error: code = Aborted desc = operation locked due to in progress operation(s): ["volume_id_pvc-b9269150-0a75-40e3-b1c2-6b5722904c07"]

However, the csi pod ends up stuck in a crashloopbackoff status.

Here’s the subset of the logs that I believe are pertinent (a huge amount of goroutine ## ... follows. I can share if required.

I0606 00:12:35.566992 1 controller.go:1335] provision "default/test-csi-iscsi" class "csi-truenas-iscsi": started

W0606 00:12:35.568611 1 controller.go:570] "fstype" is deprecated and will be removed in a future release, please use "csi.storage.k8s.io/fstype" instead

I0606 00:12:35.568703 1 event.go:282] Event(v1.ObjectReference{Kind:"PersistentVolumeClaim", Namespace:"default", Name:"test-csi-iscsi", UID:"f9597109-9668-4672-81a4-3ef1d90ed13c", APIVersion:"v1", ResourceVersion:"5146721", FieldPath:""}): type: 'Normal' reason: 'Provisioning' External provisioner is provisioning volume for claim "default/test-csi-iscsi"

I0606 00:12:35.568830 1 controller.go:731] CreateVolumeRequest name:"pvc-f9597109-9668-4672-81a4-3ef1d90ed13c" capacity_range:<required_bytes:536870912 > volume_capabilities:<mount:<fs_type:"xfs" > access_mode:<mode:SINGLE_NODE_WRITER > > parameters:<key:"csi.storage.k8s.io/pv/name" value:"pvc-f9597109-9668-4672-81a4-3ef1d90ed13c" > parameters:<key:"csi.storage.k8s.io/pvc/name" value:"test-csi-iscsi" > parameters:<key:"csi.storage.k8s.io/pvc/namespace" value:"default" > parameters:<key:"fsType" value:"xfs" >

I0606 00:12:35.573848 1 connection.go:182] GRPC call: /csi.v1.Controller/CreateVolume

I0606 00:12:35.573896 1 connection.go:183] GRPC request: {"capacity_range":{"required_bytes":536870912},"name":"pvc-f9597109-9668-4672-81a4-3ef1d90ed13c","parameters":{"csi.storage.k8s.io/pv/name":"pvc-f9597109-9668-4672-81a4-3ef1d90ed13c","csi.storage.k8s.io/pvc/name":"test-csi-iscsi","csi.storage.k8s.io/pvc/namespace":"default","fsType":"xfs"},"volume_capabilities":[{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}}]}

I0606 00:12:38.562702 1 leaderelection.go:273] successfully renewed lease democratic-csi/org-democratic-csi-truenas-iscsi

I0606 00:12:43.596482 1 leaderelection.go:273] successfully renewed lease democratic-csi/org-democratic-csi-truenas-iscsi

I0606 00:12:48.662538 1 leaderelection.go:273] successfully renewed lease democratic-csi/org-democratic-csi-truenas-iscsi

I0606 00:12:52.518999 1 connection.go:185] GRPC response: {}

E0606 00:12:52.519388 1 connection.go:131] Lost connection to unix:///csi-data/csi.sock.

I0606 00:12:52.519361 1 connection.go:186] GRPC error: rpc error: code = Unavailable desc = transport is closing

I0606 00:12:52.519580 1 controller.go:752] CreateVolume failed, supports topology = false, node selected false => may reschedule = false => state = Background: rpc error: code = Unavailable desc = transport is closing

I0606 00:12:52.519964 1 controller.go:1109] Temporary error received, adding PVC f9597109-9668-4672-81a4-3ef1d90ed13c to claims in progress

W0606 00:12:52.520127 1 controller.go:961] Retrying syncing claim "f9597109-9668-4672-81a4-3ef1d90ed13c", failure 0

E0606 00:12:52.520297 1 controller.go:984] error syncing claim "f9597109-9668-4672-81a4-3ef1d90ed13c": failed to provision volume with StorageClass "csi-truenas-iscsi": rpc error: code = Unavailable desc = transport is closing

I0606 00:12:52.520513 1 controller.go:1335] provision "default/test-csi-iscsi" class "csi-truenas-iscsi": started

F0606 00:12:52.520582 1 connection.go:87] Lost connection to CSI driver, exiting

Here are the logs from the csi-driver:

{"service":"democratic-csi","level":"info","message":"initializing csi driver: freenas-iscsi"}

6/5/2021 8:45:56 PM {"service":"democratic-csi","level":"info","message":"starting csi server - name: org.democratic-csi.truenas-iscsi, version: 1.2.0, driver: freenas-iscsi, mode: controller, csi version: 1.2.0, address: , socket: unix:///csi-data/csi.sock"}

6/5/2021 8:46:11 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:11 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:11 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: GetPluginInfo call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.29.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:11 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: GetPluginInfo response: {\"name\":\"org.democratic-csi.truenas-iscsi\",\"vendor_version\":\"1.2.0\"}"}

6/5/2021 8:46:11 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.29.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: GetPluginInfo call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: GetPluginInfo response: {\"name\":\"org.democratic-csi.truenas-iscsi\",\"vendor_version\":\"1.2.0\"}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: GetPluginCapabilities call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: GetPluginCapabilities response: {\"capabilities\":[{\"service\":{\"type\":\"CONTROLLER_SERVICE\"}},{\"volume_expansion\":{\"type\":\"ONLINE\"}}]}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: ControllerGetCapabilities call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:17 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: ControllerGetCapabilities response: {\"capabilities\":[{\"rpc\":{\"type\":\"CREATE_DELETE_VOLUME\"}},{\"rpc\":{\"type\":\"LIST_VOLUMES\"}},{\"rpc\":{\"type\":\"GET_CAPACITY\"}},{\"rpc\":{\"type\":\"CREATE_DELETE_SNAPSHOT\"}},{\"rpc\":{\"type\":\"LIST_SNAPSHOTS\"}},{\"rpc\":{\"type\":\"CLONE_VOLUME\"}},{\"rpc\":{\"type\":\"EXPAND_VOLUME\"}}]}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: GetPluginInfo call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: GetPluginInfo response: {\"name\":\"org.democratic-csi.truenas-iscsi\",\"vendor_version\":\"1.2.0\"}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: GetPluginCapabilities call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: GetPluginCapabilities response: {\"capabilities\":[{\"service\":{\"type\":\"CONTROLLER_SERVICE\"}},{\"volume_expansion\":{\"type\":\"ONLINE\"}}]}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: ControllerGetCapabilities call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.34.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: ControllerGetCapabilities response: {\"capabilities\":[{\"rpc\":{\"type\":\"CREATE_DELETE_VOLUME\"}},{\"rpc\":{\"type\":\"LIST_VOLUMES\"}},{\"rpc\":{\"type\":\"GET_CAPACITY\"}},{\"rpc\":{\"type\":\"CREATE_DELETE_SNAPSHOT\"}},{\"rpc\":{\"type\":\"LIST_SNAPSHOTS\"}},{\"rpc\":{\"type\":\"CLONE_VOLUME\"}},{\"rpc\":{\"type\":\"EXPAND_VOLUME\"}}]}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: ControllerGetCapabilities call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-go/1.29.0\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:23 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: ControllerGetCapabilities response: {\"capabilities\":[{\"rpc\":{\"type\":\"CREATE_DELETE_VOLUME\"}},{\"rpc\":{\"type\":\"LIST_VOLUMES\"}},{\"rpc\":{\"type\":\"GET_CAPACITY\"}},{\"rpc\":{\"type\":\"CREATE_DELETE_SNAPSHOT\"}},{\"rpc\":{\"type\":\"LIST_SNAPSHOTS\"}},{\"rpc\":{\"type\":\"CLONE_VOLUME\"}},{\"rpc\":{\"type\":\"EXPAND_VOLUME\"}}]}"}

6/5/2021 8:46:41 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-node/1.24.0-pre1 grpc-c/8.0.0 (linux; chttp2; game)\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:46:51 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:47:41 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-node/1.24.0-pre1 grpc-c/8.0.0 (linux; chttp2; game)\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:48:01 PM {"service":"democratic-csi","level":"error","message":"handler error - driver: FreeNASDriver method: Probe error: Error: Timed out while waiting for handshake"}

6/5/2021 8:48:01 PM { code: 13, message: 'Error: Timed out while waiting for handshake' }

6/5/2021 8:48:41 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-node/1.24.0-pre1 grpc-c/8.0.0 (linux; chttp2; game)\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:48:53 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:49:41 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-node/1.24.0-pre1 grpc-c/8.0.0 (linux; chttp2; game)\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:49:53 PM {"service":"democratic-csi","level":"info","message":"new response - driver: FreeNASDriver method: Probe response: {\"ready\":{\"value\":true}}"}

6/5/2021 8:50:41 PM {"service":"democratic-csi","level":"info","message":"new request - driver: FreeNASDriver method: Probe call: {\"_events\":{},\"_eventsCount\":1,\"call\":{},\"cancelled\":false,\"metadata\":{\"_internal_repr\":{\"user-agent\":[\"grpc-node/1.24.0-pre1 grpc-c/8.0.0 (linux; chttp2; game)\"]},\"flags\":0},\"request\":{}}"}

6/5/2021 8:50:43 PM running server shutdown, exit code: SIGTERM

Here are my helm values:

csiDriver:

name: "org.democratic-csi.truenas-iscsi"

driver:

# see the driver-config-secret.yaml template for syntax

existingConfigSecret: democratic-csi-driver-config-iscsi-secret

config:

driver: freenas-iscsi

storageClasses:

- name: csi-truenas-iscsi

defaultClass: false

reclaimPolicy: Delete

volumeBindingMode: Immediate

allowVolumeExpansion: true

parameters:

# for block-based storage can be ext3, ext4, xfs

fsType: xfs

mountOptions: []

# secrets:

# provisioner-secret:

# controller-publish-secret:

# node-stage-secret:

# node-publish-secret:

# controller-expand-secret:

# ## if your cluster supports snapshots you may enable below

# volumeSnapshotClasses: []

# #- name: freenas-nfs-csi

# # parameters:

# # # if true, snapshots will be created with zfs send/receive

# # # detachedSnapshots: "false"

# # secrets:

# # snapshotter-secret:

node:

rbac:

enabled: true

enabled: true

hostNetwork: true

hostIPC: true

kubeletHostPath: /var/lib/kubelet

and here is the secret:

---

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: democratic-csi-driver-config-iscsi-secret

namespace: democratic-csi

# labels:

# app.kubernetes.io/name: {{ include "democratic-csi.name" . }}

# helm.sh/chart: {{ include "democratic-csi.chart" . }}

# app.kubernetes.io/instance: {{ .Release.Name }}

# app.kubernetes.io/managed-by: {{ .Release.Service }}

stringData:

driver-config-file.yaml: |-

driver: freenas-iscsi

instance_id:

httpConnection:

protocol: http

host: '10.2.2.1'

port: 80

# use only 1 of apiKey or username/password

# if both are present, apiKey is preferred

# apiKey is only available starting in TrueNAS-12

apiKey: 'REDACTED'

# username: csi

# password: ""

allowInsecure: true

# use apiVersion 2 for TrueNAS-12 and up (will work on 11.x in some scenarios as well)

# leave unset for auto-detection

#apiVersion: 2

sshConnection:

host: '10.2.2.1'

port: 22

username: csi

# use either password or key

# password: ""

privateKey: |

-----BEGIN OPENSSH PRIVATE KEY-----

REDACTED

-----END OPENSSH PRIVATE KEY-----

zfs:

cli:

## allow non-root user to sudo

sudoEnabled: true

## leave paths unset for auto-detection

# paths:

# zfs: /usr/local/sbin/zfs

# zpool: /usr/local/sbin/zpool

# sudo: /usr/local/bin/sudo

# chroot: /usr/sbin/chroot

# can be used to set arbitrary values on the dataset/zvol

# can use handlebars templates with the parameters from the storage class/CO

datasetProperties:

"org.freenas:description": "{{ parameters.[csi.storage.k8s.io/pvc/namespace] }}/{{ parameters.[csi.storage.k8s.io/pvc/name] }}"

# total volume name (zvol/<datasetParentName>/<pvc name>) length cannot exceed 63 chars

# https://www.ixsystems.com/documentation/freenas/11.2-U5/storage.html#zfs-zvol-config-opts-tab

# standard volume naming overhead is 46 chars

# datasetParentName should therefore be 17 chars or less

datasetParentName: ssdpool/csi/ixi/v

## do NOT make datasetParentName and detachedSnapshotsDatasetParentName overlap

## they may be siblings, but neither should be nested in the other

detachedSnapshotsDatasetParentName: ssdpool/csi/ixi/s

iscsi:

targetPortal: "10.2.2.1:3260" # specific address for iscsi

targetPortals: []

# leave empty to omit usage of -I with iscsiadm

interface:

nameTemplate: "{{ parameters.[csi.storage.k8s.io/pvc/namespace] }}-{{ parameters.[csi.storage.k8s.io/pvc/name] }}"

namePrefix: csi-

# nameSuffix: "-cluster"

# add as many as needed

targetGroups:

# get the correct ID from the "portal" section in the UI

- targetGroupPortalGroup: 2

# get the correct ID from the "initiators" section in the UI

targetGroupInitiatorGroup: 3

# None, CHAP, or CHAP Mutual

targetGroupAuthType: None

# get the correct ID from the "Authorized Access" section of the UI

# only required if using Chap

targetGroupAuthGroup:

extentInsecureTpc: true

extentXenCompat: false

extentDisablePhysicalBlocksize: true

# 512, 1024, 2048, or 4096,

extentBlocksize: 4096

# "" (let FreeNAS decide, currently defaults to SSD), Unknown, SSD, 5400, 7200, 10000, 15000

extentRpm: "SSD"

# 0-100 (0 == ignore)

extentAvailThreshold: 0

Issue Analytics

- State:

- Created 2 years ago

- Comments:30 (14 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

TrueNAS Scale Release Plan | Page 4

I want to replace my unraid with truenas scale so that I can mount iscsi and nfs shares to vsphere. I realize that...

Read more >SOLVED - Core to Scale Upgrade: NFS & iSCSI Fail

I like living dangerously. All VM's moved to local storage on the hosts. Backups (both) completed sucessfully. So I pulled the trigger

Read more >Spontaneous system crashes with iSCSI LUNs

I have a system that had been running stable on 9.2.1 for over a year, and then I recently upgraded to 9.3.1 and...

Read more >Crash on heavy iSCSI...assume same as post 39433

I have a FreeNAS server in production using iSCSI mounts, that's been running on FreeNAS-9.3-STABLE-201509282017. The FreeNAS server as of ...

Read more >TrueNas Core 12 Nightly Starting iscsi causes crash

I created a 4TB sparse zvol and configured iscsi. When i start the iscsi service the system crashes and reboots.

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Quick update: The iscsi storageClass and deployment are working just fine. The nfs one is generally functional, but continuously has to restart the controller because it gets a timeout on the liveness probe. I’m going to keep monitoring, but probably won’t muck with the current config until I update truenas when the next version of SCALE releases (next week).

P.S. Just edited the title to be more descriptive if someone is searching through the issues.

I restarted the nfs service with

sudo systemctl restart nfs-ganeshaand things started working. I’m guessing this is some bug on the Truenas SCALE side, since running down the list of systemd services showed a bunch having errored out (likelogrotate).I should probably reboot the system, but I have to reconfigure the networking every time I do so due to another bug…