Getting bad rendered target image

See original GitHub issueHi, I’m experimenting with your repository using T-LESS object number 1.

I’ve created my custom cfg file as described in the readme and trained an AAE with “ae_train”.

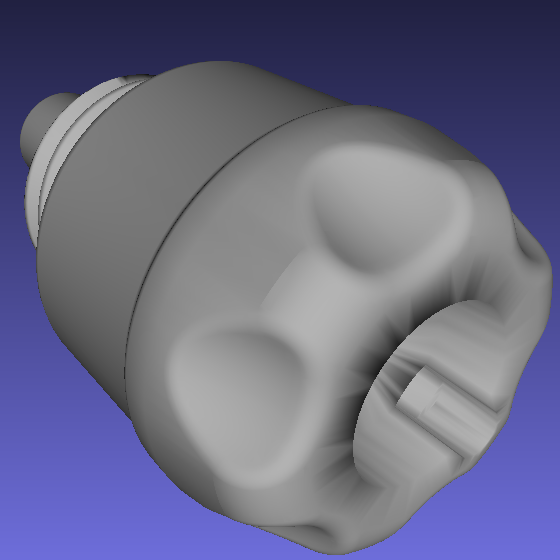

When I explore the output checkpoint images produced by the script during training I’m seeing some strange glitches with the target images as shown here:

training_images_9999.png:

training_images_19999.png:

training_images_19999.png:

training_images_29999.png:

training_images_29999.png:

I’m wondering if that ugly rendering is an OpenGL fault or because of some modifications I had to do to convert to Python3 but nothing special:

printtoprint()xrangetorange()-in geometry.py line 45 change fromhashed_file_name = hashlib.md5( ''.join(obj_files) + 'load_meshes' + str(recalculate_normals) ).hexdigest() + '.npy'tohashed_file_name = hashlib.md5( (''.join(obj_files) + 'load_meshes' + str(recalculate_normals)).encode('utf-8') ).hexdigest() + '.npy'- in geometry.py line 70 changed from

for i in range(0, N-1, 3):tofor i in range(0, N-2, 3):--> maybe this line is causing that problem? I’ve changed this because you are accessing vertices[i+2] and N-1 was causing _index out of bounds error. - in meshrenderer.py in function render (line 83), added cast to

int()forHandWright after theassert(someway their type was float)

I’m currently working to figure out what is causing this problem, any idea is precious to me… thank you in advance!

EDIT

My config file is the following:

[Paths]

MODEL_PATH: /home/DATI/insulators/models_cad/obj_01.ply

BACKGROUND_IMAGES_GLOB: /home/DATI/insulators/VOCdevkit/VOC2012/JPEGImages/*.jpg

[Dataset]

MODEL: cad

H: 128

W: 128

C: 3

RADIUS: 700

RENDER_DIMS: (720, 540)

K: [1075.65, 0, 720/2, 0, 1073.90, 540/2, 0, 0, 1]

# Scale vertices to mm

VERTEX_SCALE: 1

ANTIALIASING: 1

PAD_FACTOR: 1.2

CLIP_NEAR: 10

CLIP_FAR: 10000

NOOF_TRAINING_IMGS: 20000

NOOF_BG_IMGS: 15000

[Augmentation]

REALISTIC_OCCLUSION: False

SQUARE_OCCLUSION: False

MAX_REL_OFFSET: 0.20

CODE: Sequential([

#Sometimes(0.5, PerspectiveTransform(0.05)),

#Sometimes(0.5, CropAndPad(percent=(-0.05, 0.1))),

Sometimes(0.5, Affine(scale=(1.0, 1.2))),

Sometimes(0.5, CoarseDropout( p=0.2, size_percent=0.05) ),

Sometimes(0.5, GaussianBlur(1.2*np.random.rand())),

Sometimes(0.5, Add((-25, 25), per_channel=0.3)),

Sometimes(0.3, Invert(0.2, per_channel=True)),

Sometimes(0.5, Multiply((0.6, 1.4), per_channel=0.5)),

Sometimes(0.5, Multiply((0.6, 1.4))),

Sometimes(0.5, ContrastNormalization((0.5, 2.2), per_channel=0.3))

], random_order=False)

[Embedding]

EMBED_BB: True

MIN_N_VIEWS: 2562

NUM_CYCLO: 36

[Network]

BATCH_NORMALIZATION: False

AUXILIARY_MASK: False

VARIATIONAL: 0

LOSS: L2

BOOTSTRAP_RATIO: 4

NORM_REGULARIZE: 0

LATENT_SPACE_SIZE: 128

NUM_FILTER: [128, 256, 512, 512]

STRIDES: [2, 2, 2, 2]

KERNEL_SIZE_ENCODER: 5

KERNEL_SIZE_DECODER: 5

[Training]

OPTIMIZER: Adam

NUM_ITER: 30000

BATCH_SIZE: 64

LEARNING_RATE: 2e-4

SAVE_INTERVAL: 10000

[Queue]

# OPENGL_RENDER_QUEUE_SIZE: 500

NUM_THREADS: 10

QUEUE_SIZE: 50

Issue Analytics

- State:

- Created 4 years ago

- Comments:7 (1 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Messed up reflections with cube render target

The image produced by the cube render target has lots of artefacts. I noticed that this almost disappears when you set the screen...

Read more >Extremely odd Render Target issue. - Community | MonoGame

Once the render target is ready, I call the “Draw” method on the control, telling it to render to the parent buffer. Easy-peasy....

Read more >render target problem · Issue #5015 · google/filament - GitHub

Describe the bug when I render the scene use like this: app.offscreenRenderTarget = RenderTarget::Builder()

Read more >Fast Render Target Rendering in Unreal Engine 4 | Froyok

The test scenario is relatively simple: I have a material that displays a texture. The goal is to render this material in a...

Read more >r/unrealengine - Skylight not rendering on Render Target Image

I'm glad one of those solutions worked, though it's too bad it had to be Lumen. I wouldn't lose hope though, this might...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Ok, solved by executing “ae_embed.py” after the training. Now I’m getting correctly predicted rotations and pass to the next step of 6D pose estimation, yayy

Hi Martin, thank you for responding. I had to change that line in geometry.py because it gave me “OutOfBounds” when reading the list of vertices.

I think I have fixed this looking at the .ply that I was loading. The file is listing vertices and then faces, but the code in geometry.py was reading the vertices in order. For example, the original code is assuming that vertices are face-ordered like this: [vertex1_face1, vertex2_face1, vertex3_face1, vertex1_face2, vertex2_face2, vertex3_face2, … ] But in a normal .ply file you find: [vertex,1, vertex2, vertex3,… ] and then a description of how faces are build, referencing vertices with their index: [(0, 4, 100), (0, 3, 10), …]

So you can immagine that reading a standard .ply file assuming that vertices are face-ordered will cause the sort of glitches that are shown in my previous post.

I have changed the way vertices are passed to “compute_normals” in geometry.py to have a list of face-ordered vertices, copying some vertices if necessary.

Just to be sure: are you pre-processing the .ply files with some code?

I have another issue to be honest: I have trained an AAE with correctly rendered images but now I am getting the same (wrong) prediction for different test poses: Test Image 1: predicted Rotation:

predicted Rotation:

[[ 1.0000000e+00 0.0000000e+00 0.0000000e+00] [ 0.0000000e+00 -1.0000000e+00 -1.2246468e-16] [ 0.0000000e+00 1.2246468e-16 -1.0000000e+00]]pred_view 1:Test image 2:

predicted Rotation (same as test image 1):

[[ 1.0000000e+00 0.0000000e+00 0.0000000e+00] [ 0.0000000e+00 -1.0000000e+00 -1.2246468e-16] [ 0.0000000e+00 1.2246468e-16 -1.0000000e+00]]pred_view 2:

I’m thinking that there can be some problem in the codebook, like KNN failure or something similar. Do you have some idea about this?