Device is not actually using gpu

See original GitHub issueDescribe the bug

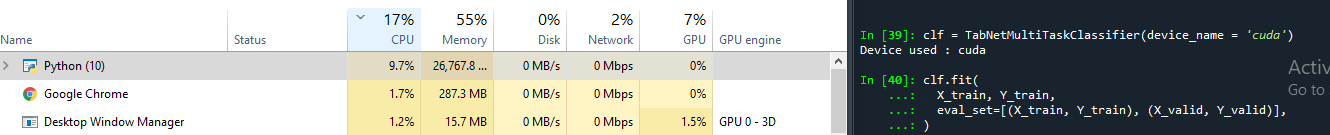

I train the model with device_name == ‘cuda’ or left as default to use the gpu but trains on cpu instead. What is the current behavior? I train the model with device_name == ‘cuda’ or left as default to use the gpu but trains on cpu instead. It finds Device used : cuda but doesn’t seem to use the gpu

If the current behavior is a bug, please provide the steps to reproduce.

from pytorch_tabnet.multitask import TabNetMultiTaskClassifier

import optuna

import pandas as pd

import numpy as np

import utils

utils.get_latest_round_data(tournament_only=False)

train_data = utils.load_data('training')

valid_data = utils.load_data('validation')

train_data = train_data.dropna()

valid_data = valid_data.dropna()

targets, features = utils.get_targets_features(train_data)

X_train = train_data[features].values

Y_train = (train_data[targets].values*12).astype('int')

X_valid = valid_data[features].values

Y_valid = (valid_data[targets].values*12).astype('int')

clf = TabNetMultiTaskClassifier(device_name = 'cuda')

clf.fit(X_train, Y_train,

eval_set=[(X_train, Y_train), (X_valid, Y_valid)])

Expected behavior

It should train on gpu Screenshots

Other relevant information:

Additional context

Issue Analytics

- State:

- Created 2 years ago

- Comments:10

Top Results From Across the Web

Top Results From Across the Web

[SOLVED] Laptop Not Using GPU – 2022 Tips - Driver Easy

Facing the issue of your laptop not using a dedicated GPU? Don't worry. In this post, we will tell you some tricks to...

Read more >Solution to TensorFlow 2 not using GPU | by Shakti Wadekar

This article addresses the reason and debugging/solution process to solve the issue of tensorflow 2 (tf2) not using GPU.

Read more >tensorflow on GPU: no known devices, despite cuda's ...

The main symptom: when running tensorflow, my gpu is not detected (the code being run, and its output).

Read more >Training not using GPU desipte having tensorflow-gpu #2336

I have checked the tensorflow-gpu usage by using tf.Session() and I can see that it can find my nvidia GPU: device 0. But...

Read more >How To Fix Nvidia GPU Driver Issues Or Hardware ... - YouTube

NVIDIA # GPU #Drivers #PChelp #Windows #Geforce #RTXHow to fix Nvidia GPU driver issues or hardware not found Windows 11 Windows 10This is ......

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Yes, like you said it was a bit faster, and I saw the GPU utilization increase to a couple percent.

Yes! It was all good now. Thank you!