Allocating a JsonParser for a byte[] looks inefficient

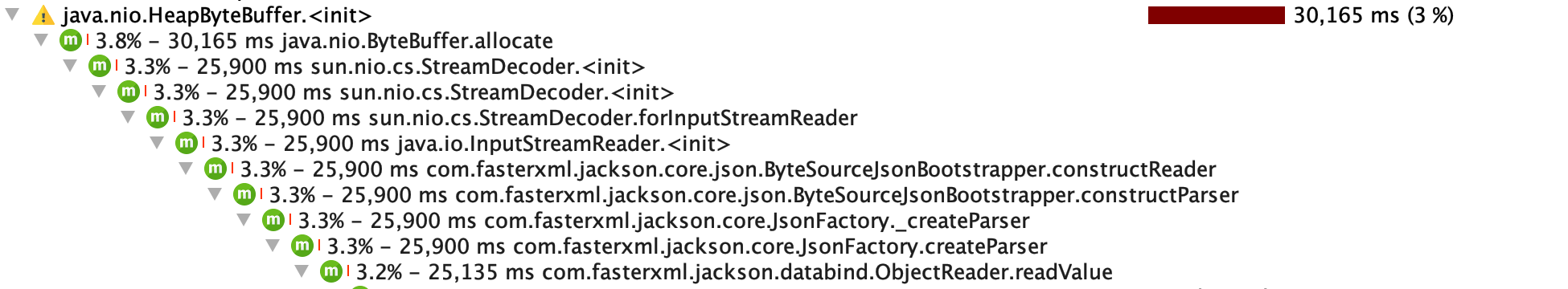

See original GitHub issueWhen profiling one of our Kafka consumers, I noticed that for every message a new HeapByteBuffer is allocated. This was surprising to me, because I thought they would be re-used, but it seems that the JsonFactory actually uses a new InputStreamReader for every byte array. And this InputStreamReader will use HeapByteBuffers for its internal buffering.

Is this something Jackson could do more elegantly by reusing the buffers? There seems to be no obvious way of reusing StreamDecoder, or InputStream reader, but since the source is known to be a byte array, there should be something better

Jackson version is 2.10.1.

(The API called in this screenshot is ObjectMapper.readValue(byte[]), but there seems to be no way to call this more efficiently since all calls end up in com.fasterxml.jackson.core.json.ByteSourceJsonBootstrapper.constructReader())

(The API called in this screenshot is ObjectMapper.readValue(byte[]), but there seems to be no way to call this more efficiently since all calls end up in com.fasterxml.jackson.core.json.ByteSourceJsonBootstrapper.constructReader())

Issue Analytics

- State:

- Created 4 years ago

- Comments:8 (8 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Yeah, that big buffer allocation for readers/streams by JDK has for years been huge overhead, and main reason for custom implementations of certain objects that otherwise I’d leave as-is (like UTF-8 Reader). And I am guessing that your test case uses even smaller messages than 1kB? (jvm-benchmark for example uses rather small, 400b as json)

Yes, they are small. With Kafka you use a small key and a large payload. You look at the key to figure out if you want the message. The code that showed up as a hotspot was the code looking at those about 1kb keys that are json serialized.

For me, I try to make minimalist JHM benchmarks. In this case a very simple small JSON as bytes and then one ObjectMapper using a default factory and one with a disabled feature flag to trigger the two different code paths. It really seems to be the heap bytebuffer allocation that tips the scale. It also allocates 8x as much memory when going down the

Readerpath.