bigtable-hbase-beam causes Dataflow job worker to fail

See original GitHub issueproblem statement with the following maven pom.xml dependency, Cloud Dataflow job worker fails to start.

<dependency>

<groupId>com.google.cloud.bigtable</groupId>

<artifactId>bigtable-hbase-beam</artifactId>

<version>1.5.0</version>

</dependency>

The workaround is to delete the above section in pom.xml and related Java code. Below is the error in Dataflow’s StackDriver logging:

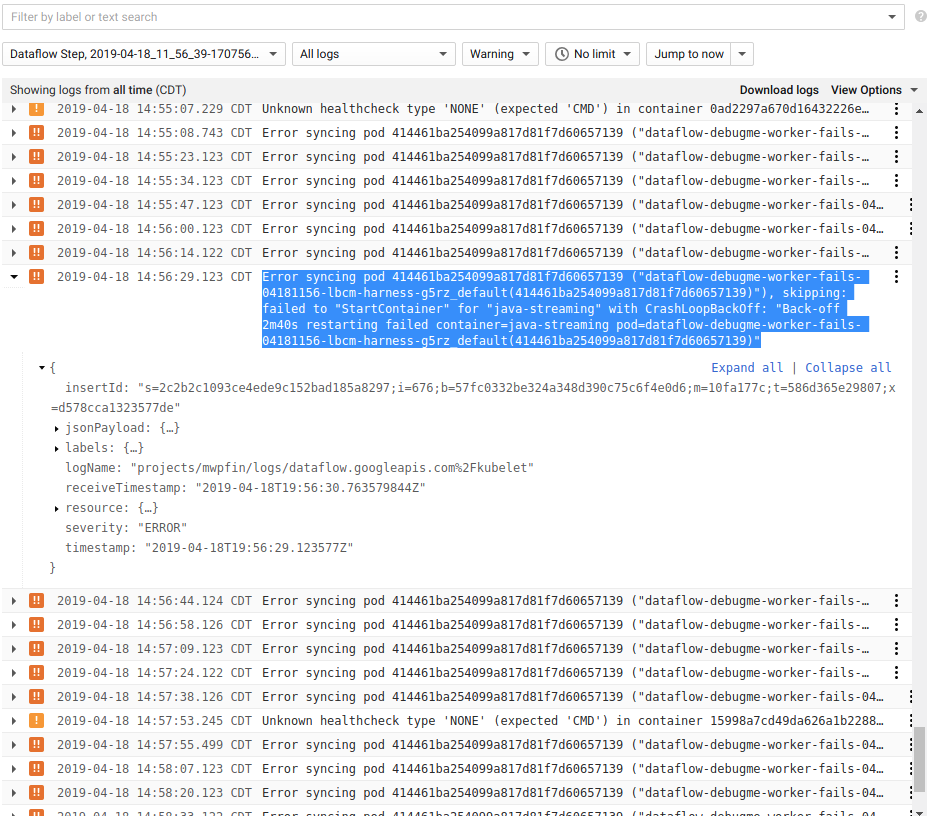

Error syncing pod 414461ba254099a817d81f7d60657139 (“dataflow-debugme-worker-fails-04181156-lbcm-harness-g5rz_default(414461ba254099a817d81f7d60657139)”), skipping: failed to “StartContainer” for “java-streaming” with CrashLoopBackOff: “Back-off 2m40s restarting failed container=java-streaming pod=dataflow-debugme-worker-fails-04181156-lbcm-harness-g5rz_default(414461ba254099a817d81f7d60657139)”

The source is bigtable-beam-dataflow-worker-fail.zip.

To reproduce the bug, modify com/finack/models/Constants.java with the correct pub/sub subscriptions, project ID, BigTable instance:

- PROJECT_ID

- PUBSUB_SUBSCRIPTION_TO_PIPELINE

- BIGTABLE_CLOUD_INSTANCE_ID

- CFAMILY # column family for the table

- TABLE_ID

Create a BigTable and pub/sub subscription per the fields above. Execute the following to run locally:

mvn exec:java -Dexec.mainClass=com.finack.app.FinackPipeline -Dexec.args=" --project=mwpfin --runner=DirectRunner --mode=debug " # --mode=debug is optional, without it, console output will be less verbose

If local runner executes without error, publish to the topic linked to the subscription and verify messages enter BigTable:

- Download the publish.py module.

- edit the first 2 lines of publish.py to put the correct project ID and topic.

- Every run will create a pub sub message with current timestamp.

Ideally, messages will write to BigTable. Then kill the local runner and execute the following command to run with DataflowRunner. Put a cloud storage path for --stagingLocation=

mvn exec:java -Dexec.mainClass=com.finack.app.FinackPipeline -Dexec.args="--project=mwpfin --stagingLocation=gs://tmp/staging/ --runner=DataflowRunner --mode=dataflow "

Observe the error from StackDriver logging:

The job appears to be running but does not write to BigTable. I proved BigTable dependency was the cause because of the following:

Code without that dependency and write to System.out.println method instead of BigTable would not have the Error syncing pod errors.

The job appears to be running but does not write to BigTable. I proved BigTable dependency was the cause because of the following:

Code without that dependency and write to System.out.println method instead of BigTable would not have the Error syncing pod errors.

I have not been able to access the compute engine node or the failed pod to debug further. I can’t find documentation that shows how. Maybe that’s not possible. Are you able to reproduce?

Issue Analytics

- State:

- Created 4 years ago

- Comments:7 (4 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Try adding this to your

bigtable-beam-hbasedependency:That worked for us. That ought to work for you as well even for the 1.5.0 release.

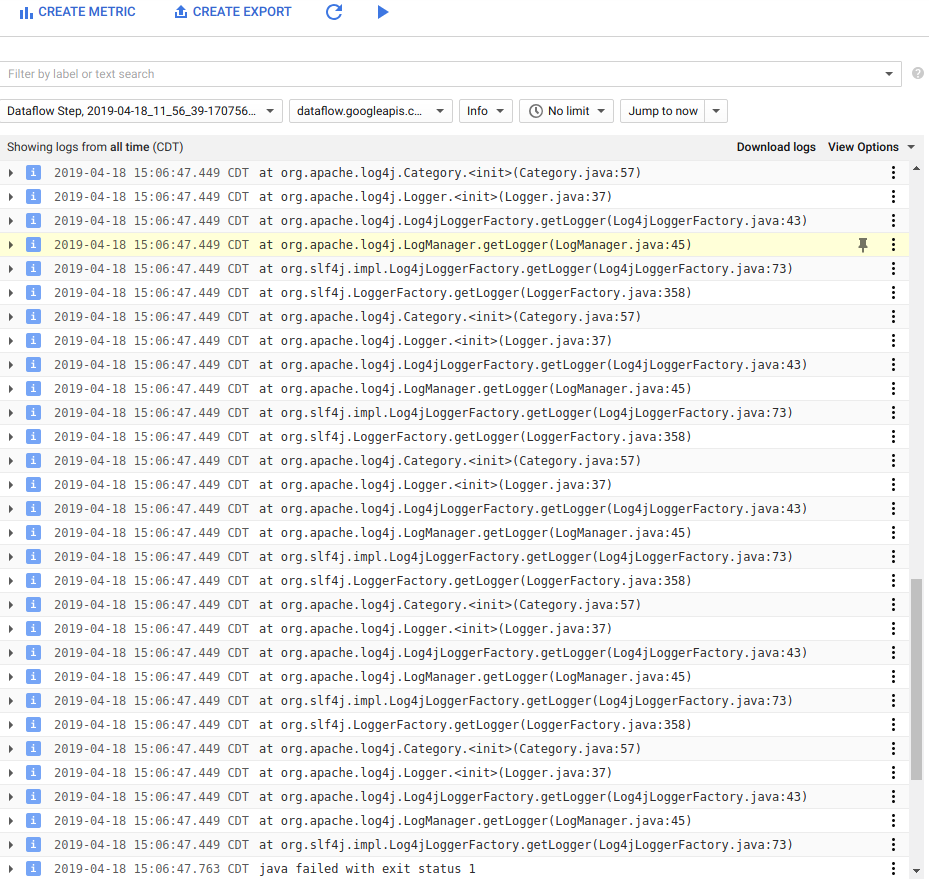

I found the fix to enable StackDriver logging: replace

with

On behalf of Maven wave partners (Google cloud north America service of 2018), we Thank @sduskis.