RFC: Add support for Performance Budgets

See original GitHub issueGoal: Let’s make performance budgets front-and-center in your developer workflow

Performance budgets enable shared enthusiasm for keeping a site’s user experience within the constraints needed to keep it fast. They usher a culture of accountability that enable stakeholders to weigh the impact to user-centric metrics of each change to a site.

If you’re using Lighthouse locally (via DevTools) or in CI, it should be clear when you’re stepping outside your team’s agreed on perf budgets.

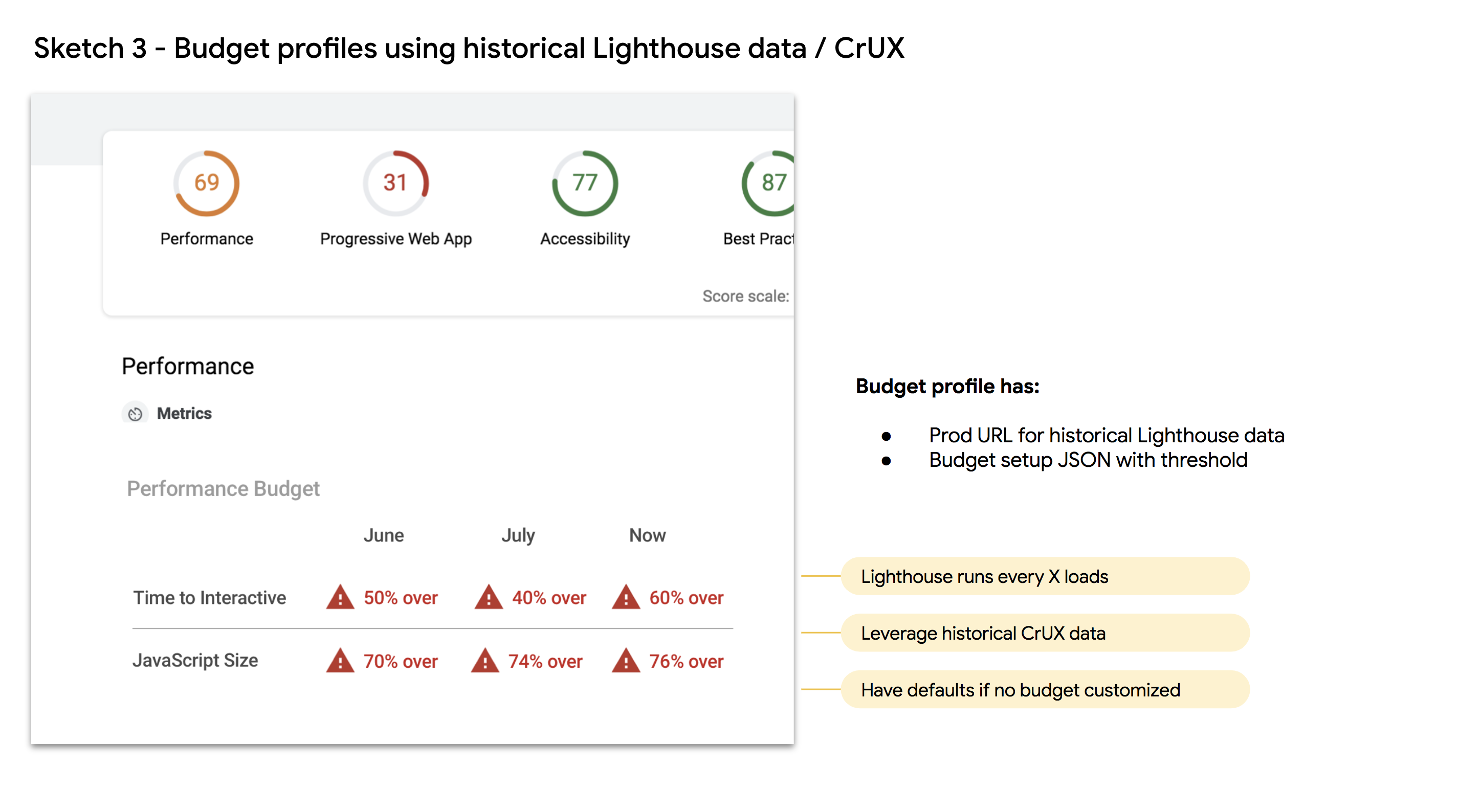

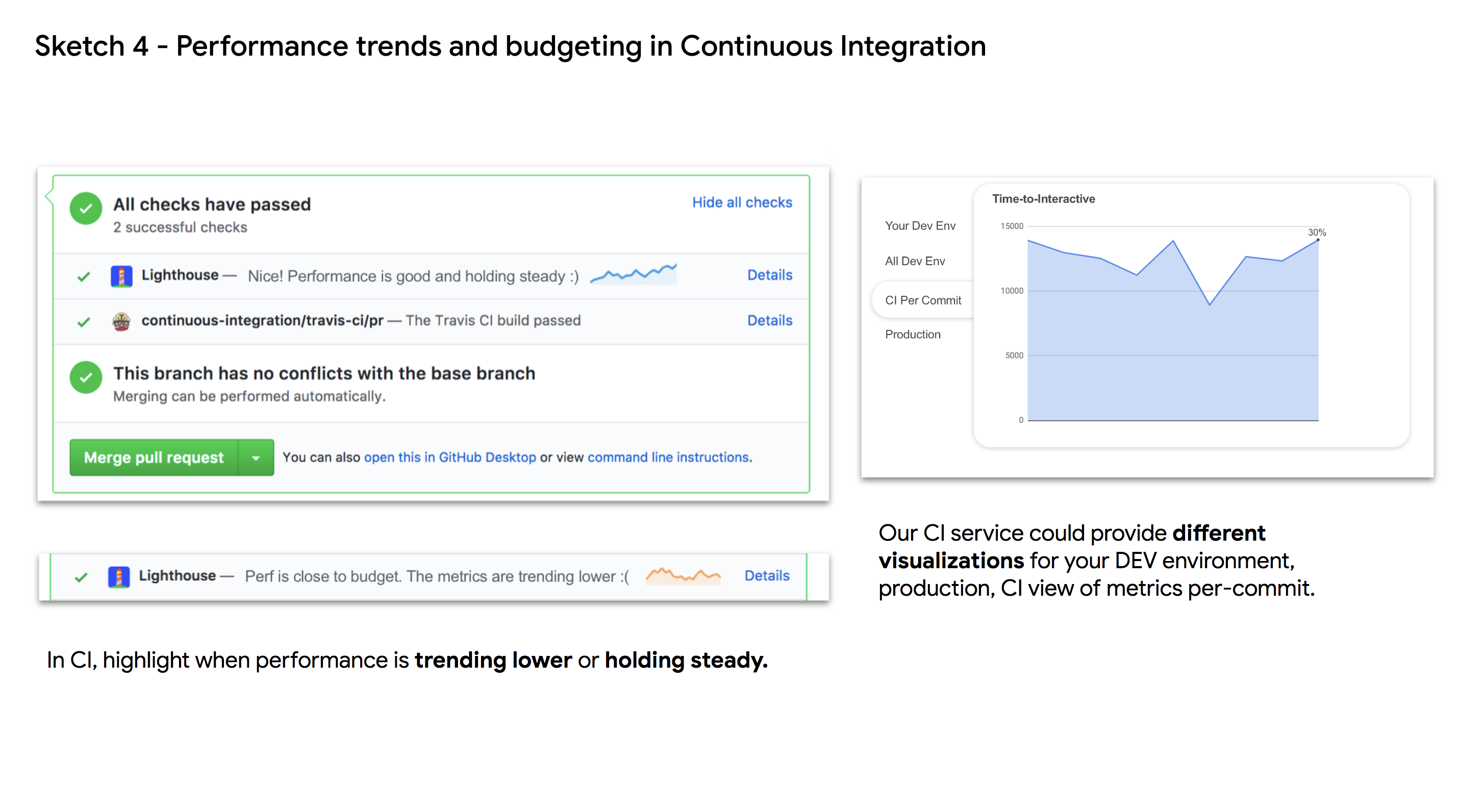

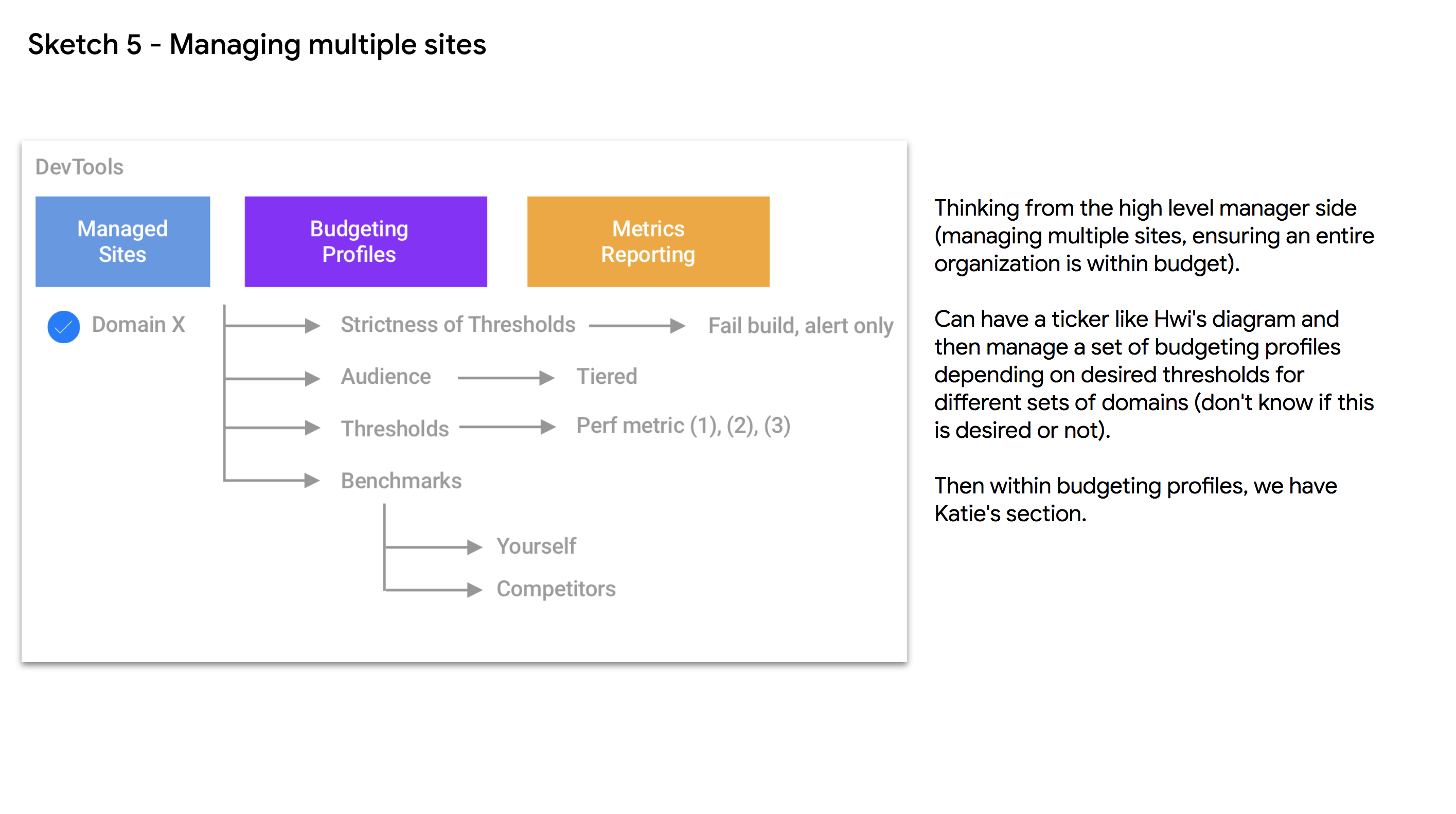

UX mocks we’re evaluating

See the complete set of UX mocks

Setting budgets

A budget can be set for one or more metrics and will tell the team that the metric can’t go above or below a certain amount.

Referencing work by Tim Kadlec, metrics for perf budgets can include:

-

Milestone timings: timings based on the user-experience loading a page (e.g Time-to-Interactive). You’ll often want to pair several milestone timings to accurately represent the complete story during page load.

-

Quantity based metrics : based on raw values (e.g. weight of JavaScript (KB/MB)). These are focused on the browser experience.

-

Rule-based metrics: scores generated by tools such as Lighthouse or WebPageTest. Often, a single number or series to grade your site.

Teams who incorporate budgets into their workflow will often have CI warn or error a build if a PR regresses performance.

Budgets could vary by whether you’re in production or dev, target device class (desktop/mobile/tablet) or network conditions. We should evaluate to what extent developers will need/want to differenciate between these considerations when setting a budget.

Some examples of budgets

- Our home page must load and get interactive in < 5s on slow 3G/Moto G4 (lab)

- Our product page must ship less than 150KB of JavaScript on mobile

- Our search page must include less than 2MB of images on desktop

Budgets can be more or less specific and the thresholds applied can vary based on target networks and devices.

While Lighthouse already provides some default thresholds for metrics like Time-to-Interactive or large JavaScript bundles, a budgeting feature enables teams to adapt budgets to fit their own needs.

Where should budgets be incorporated? (strawman)

Please note this is just a rough sketch for what an integration could look like

Potential touch points for rolling out a performance budgeting solution:

- Chrome DevTools: Support for reading a budget.js/json file for a project. Workspaces could detect the presence of such a file and ask you if you want it applied for the project. Highlight where useful that budgets have been crossed (e.g. Lighthouse, Network panel)

- Lighthouse: We have many options here, some of which are mocked out lower down. One is setting a budget via a config file (per above). Your Lighthouse report highlights any time metrics/resource sizes cross that budget.

- Lighthouse CI: Reads a provided budget.json/budgets.js which will fail the build when supplied budgets are crossed. (e.g TTI < 5s, total JS < 170KB). Work towards this being easy to adopt for use with Travis/GitHub projects.

- Third-party tooling?: Encourage adoption of budget.js/json in popular tools (webpack, SpeedCurve, Calibre, framework tooling, CMS)

- Potentially in RUM dashboards, although we might want to consider what this means… e.g. TTI vs FID budgets

Defining a performance budget

Ultimately, we should leave this up to teams but give them some strong defaults.

Walking back from Alex Russell’s “Can You Afford It?: Real-world performance budgets”, this may be:

- Time-To-Interactive < 5s on Slow 3G on a Moto G4 (~4-5x CPU throttling)

- JavaScript budget of < 170KB if targeting mobile.

- Budgets for other resources can be drawn from a total page weight target. If a page cannot be larger than 200KB, your budget for images, JS, CSS, etc will need to fit in.

Budget targets and the resource constraints that drop out of them will heavily depend on who your end users are. If you’re attempting to be interactive pretty quickly on a low-mid end device, you can’t be shipping 5MB of JavaScript.

budgets.js/budgets.json

If we were to opt for a budgets.js/json style configuration file, this could look something like the following (strawman):

module.exports = {

{

"preset": "mobile-slow-3g",

"metrics": {

"time-to-interactive": {

"warn": ">5000",

"error": ">6000"

},

"first-contentful-paint": {

"warn": ">2000",

"error": ">3000"

}

},

"sizes": {

"javascript": {

"warn": ">170",

"error": ">300"

},

"images": {

"warn": ">500",

"error": ">600"

}

}

},

{

"preset": "desktop-wifi",

"metrics": {

"time-to-interactive": {

"warn": ">3000",

"error": ">4000"

},

"first-contentful-paint": {

"warn": ">1000",

"error": ">2000"

}

},

"sizes": {

"javascript": {

"warn": ">700",

"error": ">800"

},

"images": {

"warn": ">1200",

"error": ">1800"

}

}

}

}

However, we should be careful not to tie ourselves too tightly to this as variance/thresholds may change how we think about configuration. Paul and Patrick have done some great research about presets and we could lean more into that as needed.

Metrics threshold considerations

Lighthouse runs may vary, especially across different machines.

As we explore performance budgeting, we should consider what impact this may have on how users set the thresholds they use for budgeting. Some options as we’ve talked to projects like lighthouse-thresholds:

Option 1: Multiple LH runs (e.g. “runs”: 3 in config)

Pros

- Easy to implement.

- Easy to understand what’s occurring for consumers.

- Could calculate the Median Absolute Distribution, accounting for outliers.

Cons

- Very small (non-significant) sample size.

- More likely to be viewed as failing on network issues rather than site-specific issues. (ie. the I’ll-retry-this-build-until-it-works solution)

- Multiple runs on the same machine will still suffer from the same network problems.

- CPU and time in CI environments (fought so hard for low build times, shame to let that increase significantly)

Option 2: Define thresholds in ranges

e.g. “first-contentful-paint”: { “threshold”: 1000, “deviation”: “15%” }

Pros

- Results could be compared with user-defined deviation allowances.

- Results could fail if a results has deviated from the budget more than x%.

Cons

- Consumers are unlikely to want to set both an upper & lower bound (eg. why would it matter if TTI was really low?).

- A range definition could lead to a perception of unreliability.

Option 3: Generate measurement data and commit that to code

e.g. A user runs lighthouse-thresholds measure which triggers 10 or more LH runs, performed locally and results/data saved to a file. That file could then be committed and used to compare runs in CI/PRs against. If budgets are updated, so is the generated file.

Pros

- Would take more time to implement.

- Allows for a large sample size of measurements.

- More accurate calculation of Mean Absolute Deviation

- Opportunity to calculate and use standard deviations.

- Site is measured and budgeted against real data.

- Allows for more complicated statistical analysis like a Mean-Variance Analysis

- This file would be a lot like test snapshot files, so devs are already used to seeing things like this.

- In the absence of this file it could just revert to doing a regular threshold check.

Cons

- More complicated to communicate.

- Requires an accurate production run on a local machine (or elsewhere) - though could just be run against actual production locally.

- Another file to maintain and keep in source code along with the budget file.

Option 4: Distributed runs . e.g. LH is run on multiple machines multiple times

Pros

- Much more accurate performance representation.

- Network issues are eliminated (or at least reduced).

- Much more likely to be seen as reliable

Cons

- Difficult to implement

- Lots of http requests for a distributed solution = slow build?

- Resource intensive, possibly expensive

- CI machines have gigabit networks, performance is likely a lot better than real-world - does it matter?

Options 5: be smarter about how the metrics are computed e.g. Run LH a small number of times but be smarter about how that data is measured against thresholds.

Pros

- Can more accurately filter outliers.

- Can more accurately create a measure of Statistical Dispersion

Cons

- More difficult to communicate to consumers.

- Still such a small sample size.

- Statistics aren’t magic.

How is this beneficial to Ligthhouse?

This would allow Lighthouse to reduce the friction for developer adoption of performance budgets, helping more sites hit a decent Lighthouse performance score.

Are you willing to work on this yourself?

Yep. I’m happy to own overall technical direction for budgeting and have asked @khempenius (implementation), @developit (framework integrations) and @housseindjirdeh (advisory) to work on this too.

Existing solutions supporting performance budgeting

- SpeedCurve’s perf budgeting and alerts

- Calibre supports performance budgeting

- Performance budget builder by Brad Frost

- Lighthouse CI with target scores

- Lighthouse Thresholds - (pass or fail based on thresholds for audit categories)

- pwmetrics by Paul Irish

- Webpack performance budgets feature

- bundlesize for tracking JavaScript bundle sizes

- PerformanceBudget.io

Issue Analytics

- State:

- Created 5 years ago

- Reactions:42

- Comments:19 (11 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

FINALLY had a look at this—super excited about it!

Echoing @housseindjirdeh, I love the idea of

budgets.js/jsonbecoming something that could be used across other tools.I like this too. Will likely lead to more people using performance budgets (and

budgets.json). It will also help provide some general awareness and a starting point for folks.I may be overthinking it, but couldn’t

thresholdaccomplish the same thing aswarn/error? I’m picturing folks setting a budget and then LH warns if it’s between the budget and the threshold and errors if it exceeds the threshold.This is exciting! 🎉🎉🎉

Some initial thoughts (feel free to ignore):

budgets.js/jsonadoption with other tools.budgets.jsonfile to set performance budgets! Click here to see more information.”