Insanely slow data reading from google drive

See original GitHub issue- Describe the current behavior:

I’m trying to read data as pairs of .png/.pkl files

The problem is that sometimes speed of reading data is around 300 samples per second (it’s the expected one, I almost always have this speed in colab and even on local machine with HDD as well), but sometimes it falls down to 1 sample per second, which leads to 300x slowdown, which is of course not an expected behaviour.

To further readers - I think it is about caching, I found a little trick how I can prevent such slow loading. I just had to delete whole unpacked dataset, and then just apply !unzip dataset.zip, after that it has been cached and it started to load pretty fast!

Browser : Chrome, ver. 86.0.4240.183, 64-bit

No notebook, though, because it uses a lot of in-disk dependicies, so you won’t be able to replicate same mistake.

But if you really want to improve this part of your service, here is how you can try to replicate it:

First of all, suppose notebook localted at “content/drive/My Drive/some_folder_name”, and dataset located at “content/drive/My Drive/some_folder_name/dataset”

Then for all images pathes and pkl pathes we read image via cv2.imread and read pickle via

with open(pickle_path, 'rb') as file:

____pickle_data = pickle.load(file)

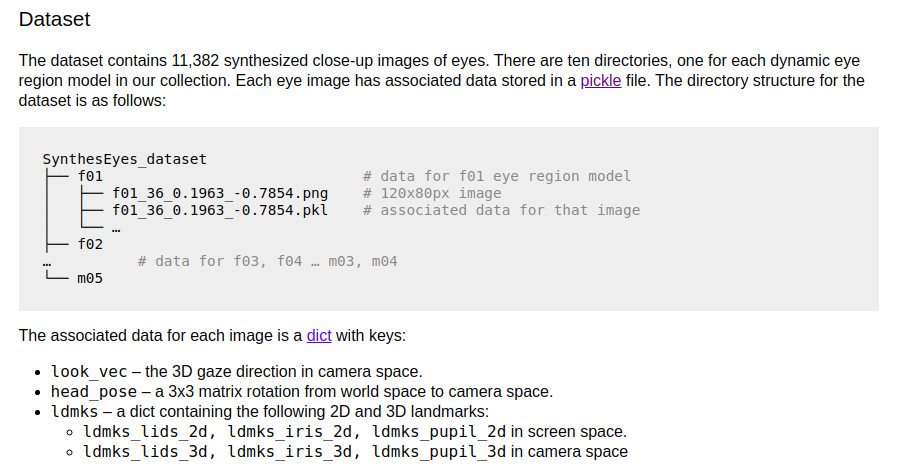

Dataset architecture :

Torch dataset class :

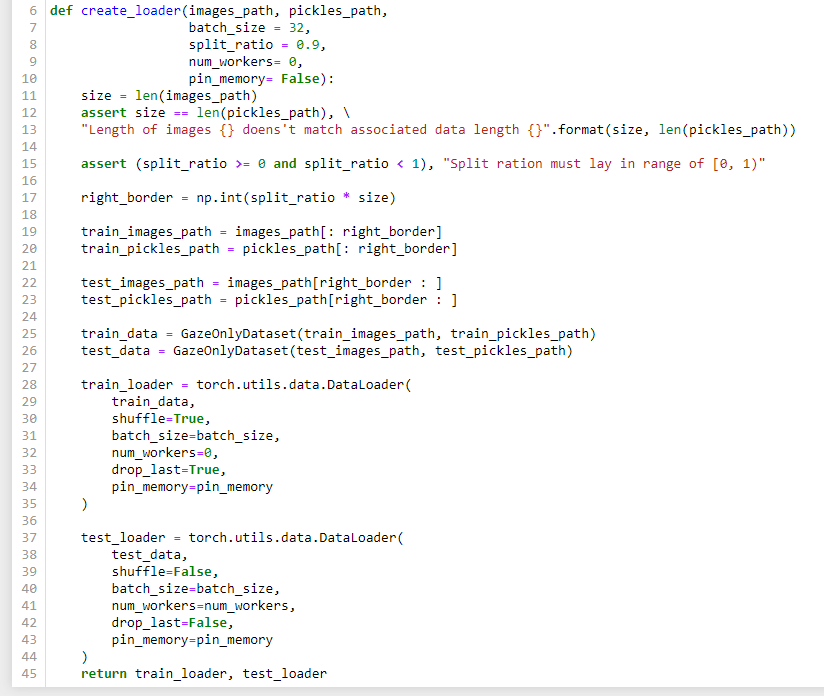

Create loader function :

Issue Analytics

- State:

- Created 3 years ago

- Comments:6

Top Related StackOverflow Question

Top Related StackOverflow Question

Have you tried copying the data to local storage instead of reading the data from google drive? If not, it’s worth trying this by copying your data to /tmp at the start of every session before running your models. Something like this:

Stopped using Colab service.