Inference performance drop 22X on GPU hardware with optimum[onnxruntime-gpu] (compared with transformer)

See original GitHub issueSystem Info

pip list:

onnx 1.12.0

onnxruntime-gpu 1.12.1

optimum 1.3.0

...

transformers 4.21.2

docker image:

nvcr.io/nvidia/tensorrt:22.07-py3

gpu type:

Tesla V100-SXM2-16GB

Who can help?

Information

- The official example scripts

- My own modified scripts

Tasks

- An officially supported task in the

examplesfolder (such as GLUE/SQuAD, …) - My own task or dataset (give details below)

Reproduction

- create docker container

docker run --gpus all -it --name=tensorrtx -d --rm -v /home/ytseng/models:/models nvcr.io/nvidia/tensorrt:22.07-py3 - install required python package

ln -s /usr/local/bin/pip /usr/bin/pip && pip install optimum[onnxruntime-gpu] transformers - save huggingface model and export to onnx

cmd:

python save_and_export_model.py

transformer_model = "/models/optimum_m2m100/m2m100_transformer"

onnx_model = "/models/optimum_m2m100/m2m100_onnx"

def download_transformer_model():

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

# Load tokenizer and PyTorch weights form the Hub

tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

pt_model = AutoModelForSeq2SeqLM.from_pretrained(model_checkpoint)

# Save to disk

tokenizer.save_pretrained(transformer_model)

pt_model.save_pretrained(transformer_model)

def export_to_onnx_model():

"""

-------- export to onnx

"""

from optimum.onnxruntime import ORTModelForSeq2SeqLM

# Load a model from transformers and export it through the ONNX format

model = ORTModelForSeq2SeqLM.from_pretrained(model_checkpoint, from_transformers=True)

# Save the onnx model

model.save_pretrained(onnx_model)

if __name__ == '__main__':

download_transformer_model()

export_to_onnx_model()

- run translation on transformer api and optimum api

testing cmd:

python run_translation.py trans ; echo '---------------' ; python run_translation.py opt

# -*- coding: utf-8 -*-

import time, logging

import torch, os

import sys

from transformers import AutoTokenizer

logging.basicConfig(level=logging.INFO)

model_checkpoint = "facebook/m2m100_418M"

transformer_model = "/models/optimum_m2m100/m2m100_transformer"

onnx_model = "/models/optimum_m2m100/m2m100_onnx"

loop = 5

if len(sys.argv) != 2:

logging.error("call with python run_translation.py <backend>")

exit(1)

chinese_text = "机器学习是人工智能的一个分支。人工智能的研究历史有着一条从以“推理”为重点,到以“知识”为重点,再到以“学习”为重点的自然、清晰的脉络。显然,机器学习是实现人工智能的一个途径,即以机器学习为手段解决人工智能中的问题。机器学习在近30多年已发展为一门多领域交叉学科,涉及概率论、统计学、逼近论、凸分析(英语:Convex analysis)、计算复杂性理论等多门学科。机器学习理论主要是设计和分析一些让计算机可以自动“学习”的算法。机器学习算法是一类从数据中自动分析获得规律,并利用规律对未知数据进行预测的算法。因为学习算法中涉及了大量的统计学理论,机器学习与推断统计学联系尤为密切,也被称为统计学习理论。算法设计方面,机器学习理论关注可以实现的,行之有效的学习算法。很多推论问题属于无程序可循难度,所以部分的机器学习研究是开发容易处理的近似算法。机器学习已广泛应用于数据挖掘、计算机视觉、自然语言处理、生物特征识别、搜索引擎、医学诊断、检测信用卡欺诈、证券市场分析、DNA序列测序、语音和手写识别、战略游戏和机器人等领域"

logging.info(f"chinese_text is {chinese_text}")

logging.info(f"chinese_text length is {len(chinese_text)}")

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

logging.info(f"This test will use device: {device}")

def get_transformer_model():

from transformers import M2M100ForConditionalGeneration, M2M100Tokenizer

model = M2M100ForConditionalGeneration.from_pretrained(transformer_model).to(device)

tokenizer = M2M100Tokenizer.from_pretrained(transformer_model)

return (model, tokenizer)

def get_optimum_onnx_model():

from optimum.onnxruntime import ORTModelForSeq2SeqLM

model = ORTModelForSeq2SeqLM.from_pretrained(onnx_model).to(device)

tokenizer = AutoTokenizer.from_pretrained(transformer_model)

return (model, tokenizer)

def translate():

if str(sys.argv[1]) == "trans":

(model, tokenizer) = get_transformer_model()

elif str(sys.argv[1]) == "opt":

(model, tokenizer) = get_optimum_onnx_model()

else:

logging.info("backend type is illegal, should be 'opt' or 'trans'")

exit(1)

start = time.time()

for i in range(loop):

encoded_zh = tokenizer(chinese_text, return_tensors="pt").to(device)

generated_tokens = model.generate(**encoded_zh, forced_bos_token_id=tokenizer.get_lang_id("en"))

result = tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)

logging.info(f"#{i}: {result}")

end = time.time()

total_time = end - start

logging.info(f"total: {total_time}")

logging.info(f"loop: {loop}")

logging.info(f"avg(s): {total_time / loop}")

logging.info(f"throughput(translation/s): {loop / total_time}")

if __name__ == '__main__':

translate()

Expected behavior

We expected that the performance results are closed between the transformer backend and optimum[onnxruntime-gpu] backend. But it turns out that optimum is 22X slower than transformer one. We’re not sure if it’s related to a latest reported bug #362 , since we noticed that they used seq2seq transformer model with GPU hardware as well.

Here’s some output log for no.3 step in previous Reproduction sections.

root@ec44f06bb0bf:/workspace# python run_translation.py trans ; echo '---------------' ; python run_translation.py opt

INFO:root:chinese_text is ...

INFO:root:chinese_text length is 460

INFO:root:This test will use device: cuda

INFO:root:Transformer backend is enabled in this test

/usr/local/lib/python3.8/dist-packages/transformers/generation_utils.py:1202: UserWarning: Neither `max_length` nor `max_new_tokens` have been set, `max_length` will default to 200 (`self.config.max_length`). Controlling `max_length` via the config is deprecated and `max_length` will be removed from the config in v5 of Transformers -- we recommend using `max_new_tokens` to control the maximum length of the generation.

warnings.warn(

INFO:root:#0: ...

INFO:root:total: 13.273212909698486

INFO:root:loop: 5

INFO:root:avg(s): 2.6546425819396973

INFO:root:throughput(translation/s): 0.3766985457113096

---------------

INFO:root:chinese_text is ...

INFO:root:chinese_text length is 460

INFO:root:This test will use device: cuda

INFO:root:Optimum backend is enabled in this test

/usr/local/lib/python3.8/dist-packages/transformers/generation_utils.py:1202: UserWarning: Neither `max_length` nor `max_new_tokens` have been set, `max_length` will default to 200 (`self.config.max_length`). Controlling `max_length` via the config is deprecated and `max_length` will be removed from the config in v5 of Transformers -- we recommend using `max_new_tokens` to control the maximum length of the generation.

warnings.warn(

INFO:root:#0: ...

INFO:root:total: 303.64781045913696

INFO:root:loop: 5

INFO:root:avg(s): 60.72956209182739

INFO:root:throughput(translation/s): 0.016466445097824505

Issue Analytics

- State:

- Created a year ago

- Reactions:4

- Comments:11 (4 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Accelerated Inference with Optimum and Transformers Pipelines

Inference has landed in Optimum with support for Hugging Face Transformers pipelines, including text-generation using ONNX Runtime.

Read more >Optimizing Transformers for GPUs with Optimum - philschmid

Convert a Hugging Face Transformers model to ONNX for inference; Optimize model for GPU using ORTOptimizer; Evaluate the performance and speed.

Read more >Accelerate Transformer inference on GPU with Optimum and ...

In this video, I show you how to accelerate Transformer inference with Optimum, an open-source library by Hugging Face, and Better ...

Read more >Developing Inference-Efficient Transformers for Vision Tasks

Following the success of transformer in natural language processing, many vision ... Senior Deep Learning Software Engineer – Autonomous Vehicles, NVIDIA.

Read more >Enabling Efficient Inference of Transformer Models at ... - arXiv

better per GPU efficiency than DeepSpeed Transformer by ... bandwidth to achieve comparable latency during inference.

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Also, we did some nsight GPU profiling on these execution and got some strange finding. We hope these will help.

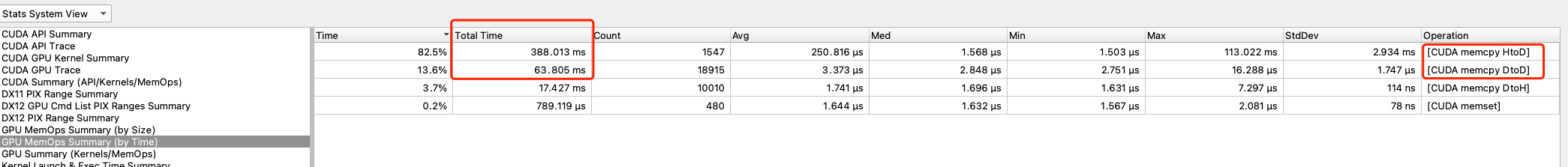

no.1: optimum took lots of time on data copy optimum GPU memops summary (time) transformer GPU memops summary (time)

transformer GPU memops summary (time)

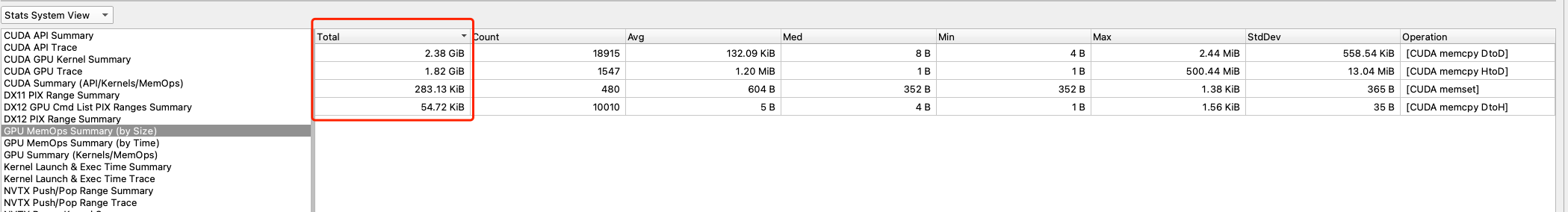

no.2 optimum had large size of data copy optimum GPU memops summary (size) transformer GPU memops summary (size)

transformer GPU memops summary (size)

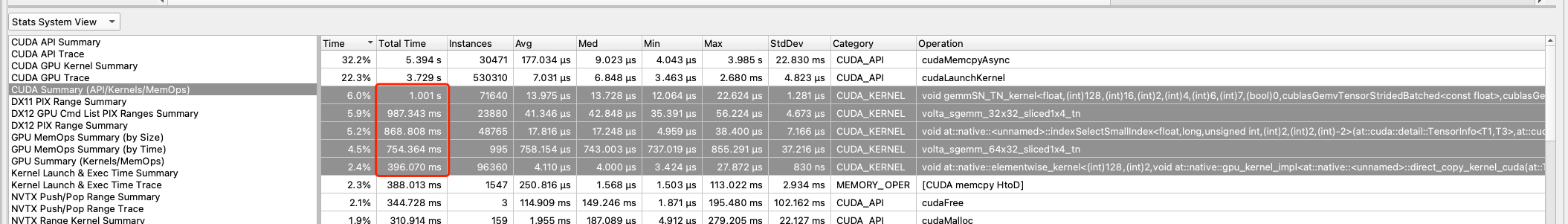

no.3: the kernel didn’t take too much time for both (compared with time cost by the data copy between host and device) optimum top GPU kernels cost - CUDA summary (API/Kernels/Memops) transformer top GPU kernels cost - CUDA summary (API/Kernels/Memops)

transformer top GPU kernels cost - CUDA summary (API/Kernels/Memops)

Thanks, can reproduce and will fix the bug shortly. Our nightly workflows for GPU appear to have been broken, will try to fix as well.