TFBert activation layer will be casted into float32 under mixed precision policy

See original GitHub issueTo reproduce

import tensorflow as tf

from transformers import TFBertForQuestionAnswering

tf.keras.mixed_precision.experimental.set_policy('mixed_float16')

model = TFBertForQuestionAnswering.from_pretrained('bert-base-uncased')

User will receive warning saying

WARNING:tensorflow:Layer activation is casting an input tensor from dtype float16 to the layer's dtype of float32, which is new behavior in TensorFlow 2. The layer has dtype float32 because its dtype defaults to floatx.

If you intended to run this layer in float32, you can safely ignore this warning. If in doubt, this warning is likely only an issue if you are porting a TensorFlow 1.X model to TensorFlow 2.

To change all layers to have dtype float16 by default, call `tf.keras.backend.set_floatx('float16')`. To change just this layer, pass dtype='float16' to the layer constructor. If you are the author of this layer, you can disable autocasting by passing autocast=False to the base Layer constructor.

This log means that under mixed-precision policy, the gelu activation layer in TFBert will be executed with float32 dtype. So the input x(link) will be casted into float32 before sent into activation layer and casted back into float16 after finishing computation.

Causes

This issue is mainly due to two reasons:

-

The dict of ACT2FN is defined outside of TFBertIntermediate class. The mixed-precision policy will not be broadcasted into the activation layer defined in this way. This can be verified by adding

print(self.intermediate_act_fn._compute_dtype)below this line. float32 will be given. -

tf.math.sqrt(2.0) used in gelu will always return a float32 tensor even if it is under mixed-precision policy. So there will be dtype incompatibility error after we move the ACT2FN into TFBertIntermediate class. This can be further solved by specifying dtype of tf.math.sqrt(2.0) to be consistent with

tf.keras.mixed_precision.experimental.global_policy().compute_dtype.

Pros & Cons

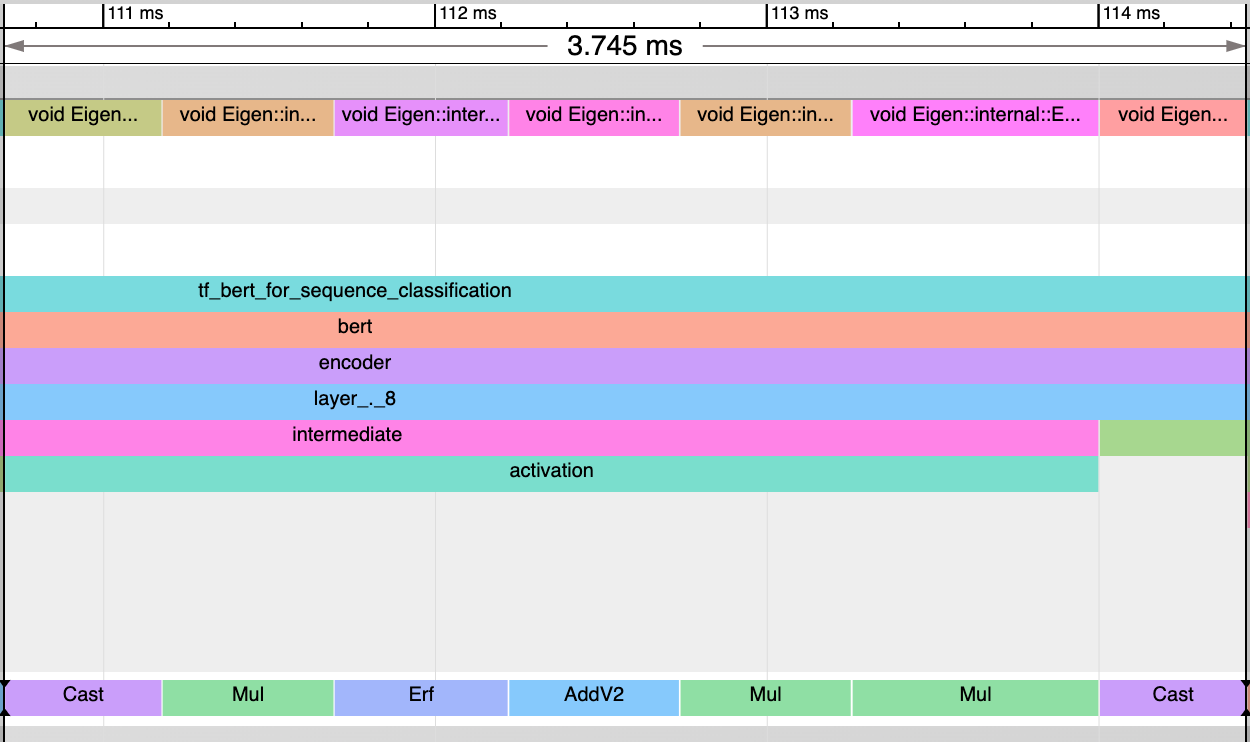

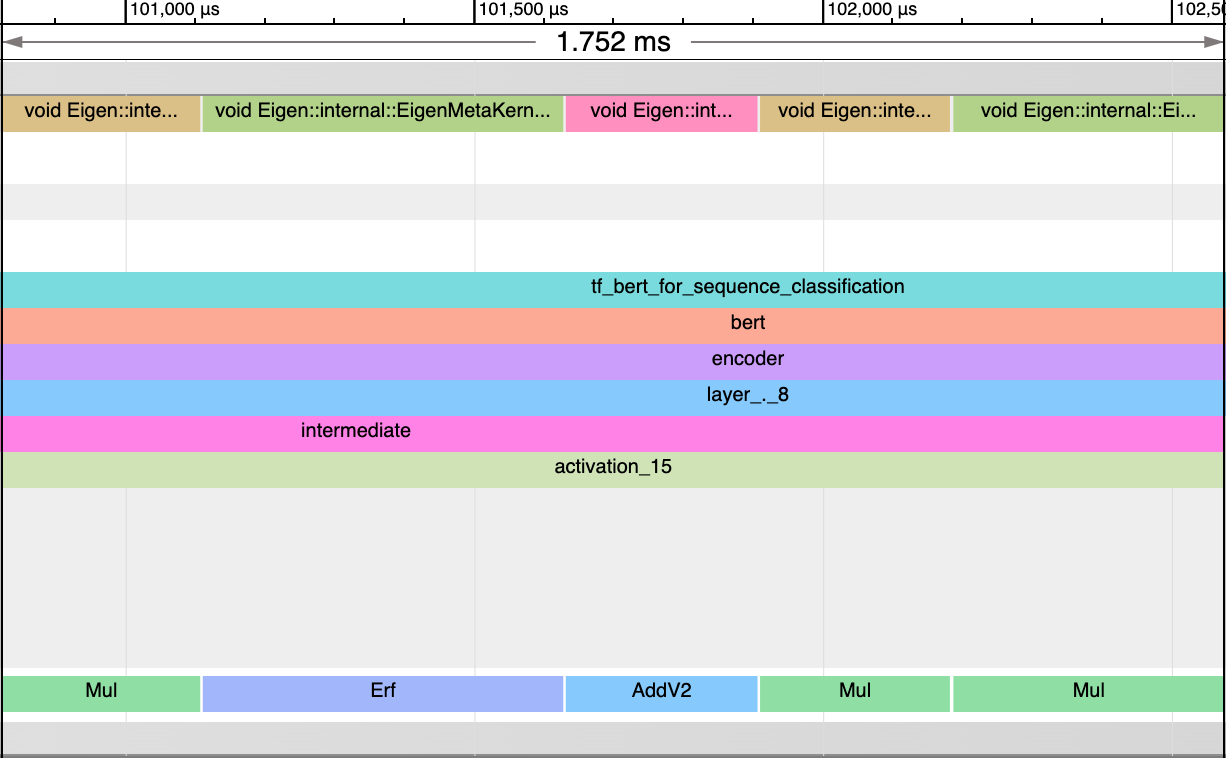

Pros: activation layer will have higher precision as it is computed with float32 dtype Cons: the two additional Cast operation will increase latency.

PR

The PR that can help getting rid of warning log and make the activation layer be executed in float16 under mixed-precision policy: https://github.com/jlei2/transformers/pull/3 This PR is not a concrete one because there are some other models importing this ACT2FN dict so it can only solve the issue existed in TFBert related model.

Benchmark Results

As Huggingface official benchmark tests don’t support mixed-precision, the comparison was made using Tensorflow Profiling Tool(https://www.tensorflow.org/tfx/serving/tensorboard).

1 GPU-V100 Model: Huggingface FP16 Bert-Base with batch_size=128 and seq_len = 128.

After applying the PR, the two Cast ops disappear and the time spent on activation layer is reduced from 3.745 ms to 1.75ms.

This issue is not like a bug or error and the pros & cons are listed above. Will you consider make changes corresponding to this? @patrickvonplaten Thanks!

Issue Analytics

- State:

- Created 3 years ago

- Reactions:1

- Comments:20 (11 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Awesome! Happy to know that the issue is fixed on your side as well!! The PR should be merged this week 😃

Thanks! I will rework this.