Optimizing scheduling of build pods

See original GitHub issueThis issue is about how to optimize the scheduling of BinderHub specific Build Pods (BPs).

Out of scope of this issue is the discussion on how to schedule the user pods, which could be done with image locality (ImageLocalityPriority configuration) in mind.

Scheduling goals

- Historic repo build node locality (Historic locality) It is believed that often a builder pod rebuilding a repo would work faster if it had previously built a repository even though the repo has changed, for example in the README.md file of the repo. So, we want to schedule build pods on nodes where it has previously been scheduled if possible.

- Not blocking scale down in autoscaling clusters We would also like to avoid schedule pods on nodes that may want to scale down.

What is our desired scheduling practice?

We need to answer how we actually want the build-pods to schedule, it is not a obvious way to do it is typically hard to optimize both for performance and auto-scaling viability for example.

Boilerplate desired scheduling practice

I’ll now provide a boilerplate idea to start out from on how to schedule the build pods.

- We make the scheduler informed about if this is an autoscaling cluster or not. If it is, we greatly reduce the score of almost empty nodes that we could scale down.

- We schedule the build pod on nodes with history of building this.

Technical solution implementation details

We must utilize a non-default scheduler. We could use the default kube-scheduler binary and customize its behavior through configuration, or we could make our own. I think we add too much complexity if we are to make our own though, even though making your own scheduler is certainly possible.

-

We utilize a custom scheduler, but like z2jh’s scheduler we deploy a official kube-scheduler binary with a customized configuration that we can reference from the build pods specification using the

spec.schedulerNamefield.[

spec.schedulerName] If specified, the pod will be dispatched by specified scheduler. If not specified, the pod will be dispatched by default scheduler. — Kubernetes PodSpec documentation. -

We customize the kube-scheduler binary, just like in z2jh through a provided config, but we try to use node annotations somehow. For example, we could make the build pod annotate the node it runs on with the repo it attempts to build, and then later the scheduler can attempt to schedule on this repo.

-

We make the BinderHub builder pod annotate the node by communicating with the k8s API. To allow the BinderHub to communicate like this, it will require some RBAC details setup, for example like the z2jh’s user-schedulers RBAC. It will need a ServiceAccount, a ClusterRole, and a ClusterRoleBinding, where the ClusterRole will define it is should be allowed to read and write annotations on nodes. For an example of a pod communicating with the k8s API, we can learn relevant parts from the z2jh’s image-awaiter which also communicates with the k8s API.

-

We make the BinderHub image-cleaner binary also cleanup associated node annotations along with it cleaning nodes, which would also require associated RBAC permissions like the build pods would need to annotate in the first place.

Knowledge reference

kube-scheduler configuration

https://kubernetes.io/docs/concepts/scheduling/kube-scheduler/#kube-scheduler-implementation

kube-scheduler source code

https://github.com/kubernetes/kubernetes/tree/master/pkg/scheduler

Kubernetes scheduling basics

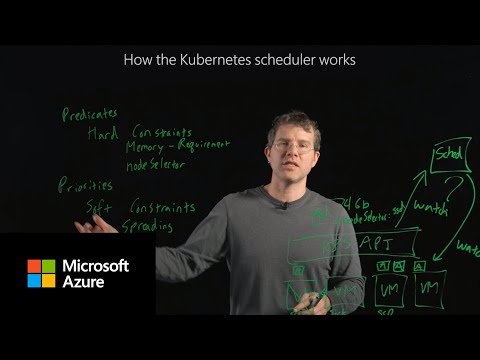

From this video, you should pick up the role of a scheduler, and that the default binary that can act as a scheduler can consider predicates (aka. filtering) and priorities (aka. scoring).

Kubernetes scheduling deep dives

Past related discussion

Issue Analytics

- State:

- Created 4 years ago

- Comments:5 (5 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

I’ve started work on 1 (and a little bit of 2). PR coming soon.

It was great discussing this and seeing how we started with a fairly complicated idea like “write a custom scheduler” and now have a much simpler solution!

There is another implementation of rendezvous hashing here.

To get the possible values of the

kubernetes.io/hostnamelabel it seems we can describe each of the DIND pods in the daemonset. TheirNodefield contains a value that is (on our GKE and OVH clusters) the same as the one used in the label. This means that to compute the list of possible node names we get describe the daemonset to get theSelector(e.g.name=ovh-dind), select pods with that (kubectl get pods -l name=ovh-dind) then inspect theNodefield of each pod we found. This is nice because we don’t need to inspect the nodes themselves so we don’t need a cluster level role.The following would be my suggestion for how to split this into several steps that can be tackled individually:

at_most_everydecorator fromhealth.pyto throttle API callsWhat do you think? And do you want to tackle one of these already?

Maybe we can start a new issue on “Resource requests for build and DIND pods” to discuss what the options are and how to configure things.