CUDA out of memory After 74 epochs

See original GitHub issue🐛 Bug

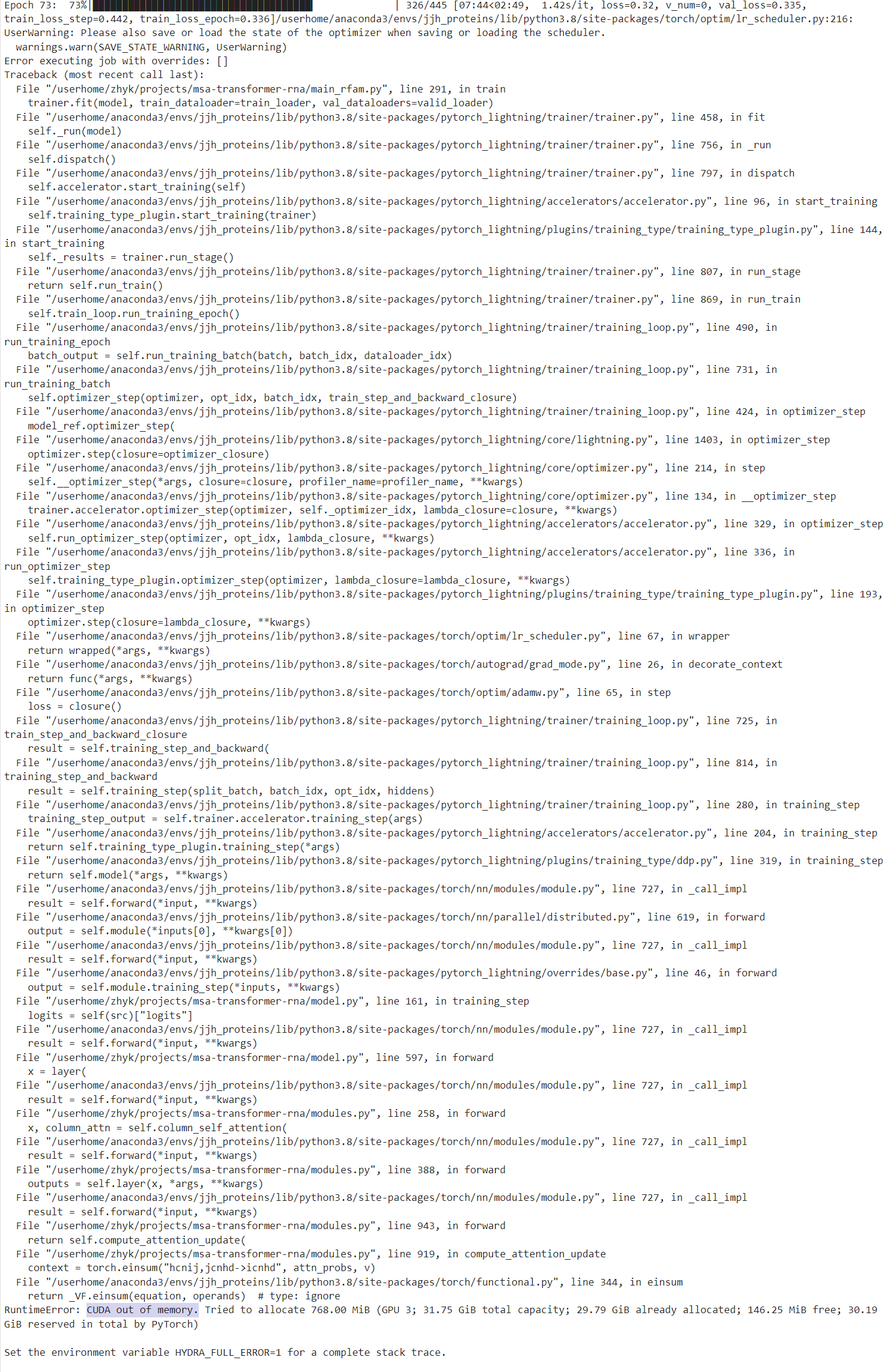

I am using the pytorch-lighting to run on 8 V100GPUs,and I set a seed in my train srcipt, everything went well at beginning, but encountered CUDA out of memory while running the 74th epoch. This can be steadily reproduced since I have run the experiment 4 times with the same result. The detailed log information is posted in the Additional context section.

Expected behavior

It will be great if you can give me some suggestion! Thank you!

Environment

- PyTorch Lightning Version : 1.3.0

- PyTorch Version : 1.7.1

- Python version : 3.8

- OS : Linux

- CUDA/cuDNN version:11.0

- GPU models and configuration: V100 32GB

- How you installed PyTorch (

conda,pip, source):conda

Additional context

cc @justusschock @kaushikb11 @awaelchli @akihironitta @rohitgr7 @borda

Issue Analytics

- State:

- Created a year ago

- Comments:7 (3 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

CUDA out of memory After 74 epochs · Issue #12874 - GitHub

I am using the pytorch-lighting to run on 8 V100GPUs,and I set a seed in my train srcipt, everything went well at beginning,...

Read more >'CUDA error: out of memory' after several epochs

The strange thing is that this error arises after 7 epochs, so it seems like some GPU memory allocation is not being released....

Read more >CUDA out of memory only during validation not training

I'm trying to fine-tune an AraBERT2GPT2 model using the EncoderDecoderModel class on a relatively small dataset. I train only for 1 epoch and ......

Read more >CUDA out of memory error training after a few epochs

Hi, I'm having some memory errors when training a GCN model on a gpu, the model runs fine for about 25 epochs and...

Read more >RuntimeError: CUDA out of memory in training with pytorch ...

1 Answer 1 ... Your GPU doesn't have enough memory. Try to reduce the batch size. If still the same, try to reduce...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Here is the the implementation of

forward: During Training, parameter: repr_layers=None, need_head_weights=False, return_contacts=FalseI figure out my problem is caused by Pytorch and its compilation. It’s fixed by reinstall Pytorch with clean environment.