epoch_end Logs Default to Steps Instead of Epochs

See original GitHub issue🐛 Bug

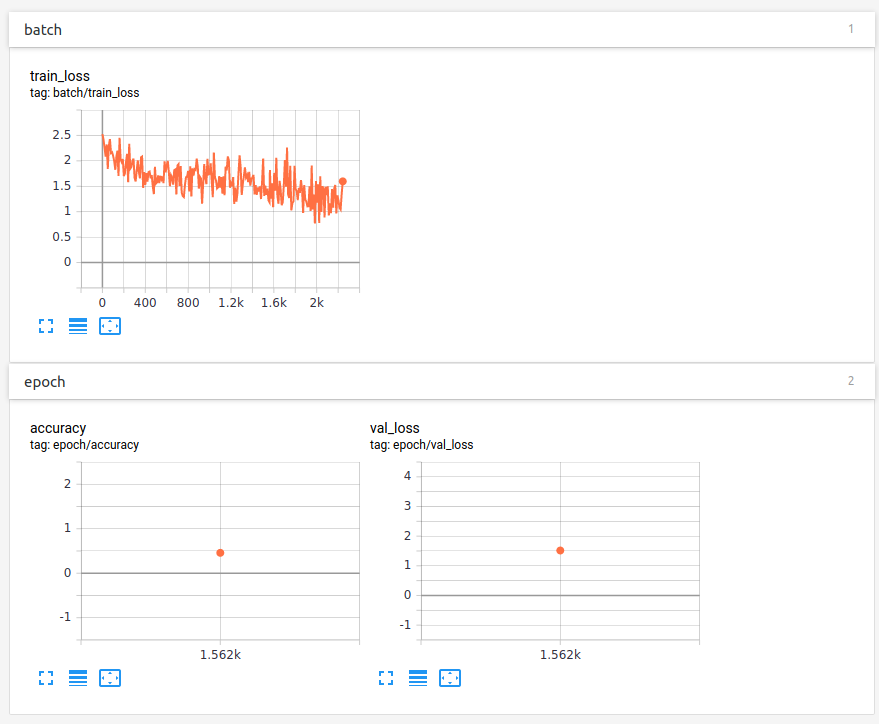

Logs generated within validation_epoch_end have their iteration set to the number of steps instead of number of epochs.

To Reproduce

Steps to reproduce the behavior:

- Create LightningModile with the below functions.

- Train the module for 1 or more epochs

- Run Tensorboard

- Note the iteration for logs generated in

validation_epoch_end

Code Sample

def training_step(self, train_batch, batch_idx):

x, y = train_batch

y_hat = self.forward(x)

loss = self.cross_entropy_loss(y_hat, y)

accuracy = self.accuracy(y_hat, y)

logs = {'batch/train_loss': loss}

return {'loss': loss, 'log': logs}

def validation_step(self, val_batch, batch_idx):

x, y = val_batch

y_hat = self.forward(x)

loss = self.cross_entropy_loss(y_hat, y)

accuracy = self.accuracy(y_hat, y)

return {'batch/val_loss': loss, 'batch/accuracy': accuracy}

def validation_epoch_end(self, outputs):

avg_loss = torch.stack([x['batch/val_loss'] for x in outputs]).mean()

avg_accuracy = torch.stack([x['batch/accuracy'] for x in outputs]).mean()

tensorboard_logs = {'epoch/val_loss': avg_loss, 'epoch/accuracy' : avg_accuracy}

return {'avg_val_loss': avg_loss, 'accuracy' : avg_accuracy, 'log': tensorboard_logs}

Version

v0.7.1

Screenshot

Expected behavior

Logs generated in *_epoch_end functions use the epoch as their iteration on the y axis

The documentation seems unclear on the matter. The Loggers documentation is empty. The LightningModule class doesn’t describe logging in detail and the Introduction Guide has a bug in the example self.logger.summary.scalar('loss', loss) -> AttributeError: 'TensorBoardLogger' object has no attribute 'summary'.

Is there a workaround for this issue?

Issue Analytics

- State:

- Created 4 years ago

- Comments:5

Top Results From Across the Web

Top Results From Across the Web

epoch_end Logs Default to Steps Instead of Epochs #1151

Bug Logs generated within validation_epoch_end have their iteration set to the number of steps instead of number of epochs.

Read more >LightningModule - PyTorch Lightning - Read the Docs

The log() object automatically reduces the requested metrics across a complete epoch and devices. Here's the pseudocode of what it does under the...

Read more >Is there a way to only log on epoch end using the new ...

I was able to do this by forcing step to be self.current_epoch (essentially ignoring global_step ) with the old dict interface, but TrainResult ......

Read more >What is the difference between steps and epochs in ...

A training step is one gradient update. In one step batch_size examples are processed. An epoch consists of one full cycle through the...

Read more >Callbacks - Keras 2.1.3 Documentation

The logs dictionary that callback methods take as argument will contain keys for quantities ... Events are sent to root + '/publish/epoch/end/' by...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Looks like the LightningModule documentation for validation_epoch_end has a note regarding this, to use

stepkey as in:'log': {'val_acc': val_acc_mean.item(), 'step': self.current_epoch}. This does work correctly as a workaround - perhaps this should be the default for epoch end?In the current version of PytorchLightening we are encouraged to use

self.log()to log statistics, as opposed to returning a dictionary as mentioned in the previous comment. I can not see an obvious way to control the step with the newself.log()method?