mlflow training loss not reported until end of run

See original GitHub issueI think I’m logging correctly, this is my training_step

result = pl.TrainResult(loss)

result.log('loss/train', loss)

return result

and validation_step

result = pl.EvalResult(loss)

result.log('loss/validation', loss)

return result

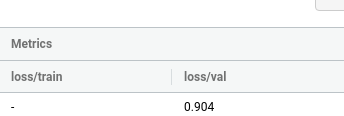

The validation loss is updated in mlflow each epoch, however the training loss isn’t displayed until training has finished. Then it’s available for every step. This may be a mlflow rather than pytorch-lighting issue - somewhere along the line it seems to be buffered?

Versions:

pytorch-lightning==0.9.0 mlflow==1.11.0

Edit: logging TrainResult with on_epoch=True results in the metric appearing in mlflow during training, it’s only the default train logging which gets delayed. i.e.

result.log('accuracy/train', acc, on_epoch=True)

is fine

Issue Analytics

- State:

- Created 3 years ago

- Comments:8 (8 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

mlflow training loss not reported until end of run #3392 - GitHub

The validation loss is updated in mlflow each epoch, however the training loss isn't displayed until training has finished.

Read more >MLflow Tracking — MLflow 2.0.1 documentation

You can record runs using MLflow Python, R, Java, and REST APIs from anywhere you run your code. ... Training loss; validation loss;...

Read more >MLflow quickstart part 1: training and logging - Databricks

MLflow quickstart: training and logging. This tutorial is based on the MLflow ElasticNet Diabetes example. It illustrates how to use MLflow to track...

Read more >Track machine learning training runs - Azure Databricks

If no active experiment is set, runs are logged to the notebook experiment. To log your experiment results to a remotely hosted MLflow...

Read more >ML End-to-End Example (Azure) - Databricks

Run a parallel hyperparameter sweep to train machine learning models on the dataset; Explore the results of the hyperparameter sweep with MLflow; Register...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Thanks for the assistance, no nothing unresolved.

So I think this is because of the default behaviour of the

TrainResultand the wayrow_log_intervalworks. And it only appears if the number of batches per epoch is less thanrow_log_intervalBy default TrainResult logs on step and not on epoch. https://github.com/PyTorchLightning/pytorch-lightning/blob/aaf26d70c4658e961192ba4c408558f1cf39bb18/pytorch_lightning/core/step_result.py#L510-L517

When logging only per step, the logger connector only logs when the

batch_idxis a multiple ofrow_log_interval. However if you don’t have more thanrow_log_intervalbatches, the metrics are not logged. https://github.com/PyTorchLightning/pytorch-lightning/blob/aaf26d70c4658e961192ba4c408558f1cf39bb18/pytorch_lightning/trainer/logger_connector.py#L229-L237@david-waterworth Do you have less than 50 batches per epoch in your model? can you try setting

row_log_intervalto be less than the number of train batches to confirm whether the issue is caused by this?