Wrong steps per epoch while DDP training

See original GitHub issue🐛 Bug

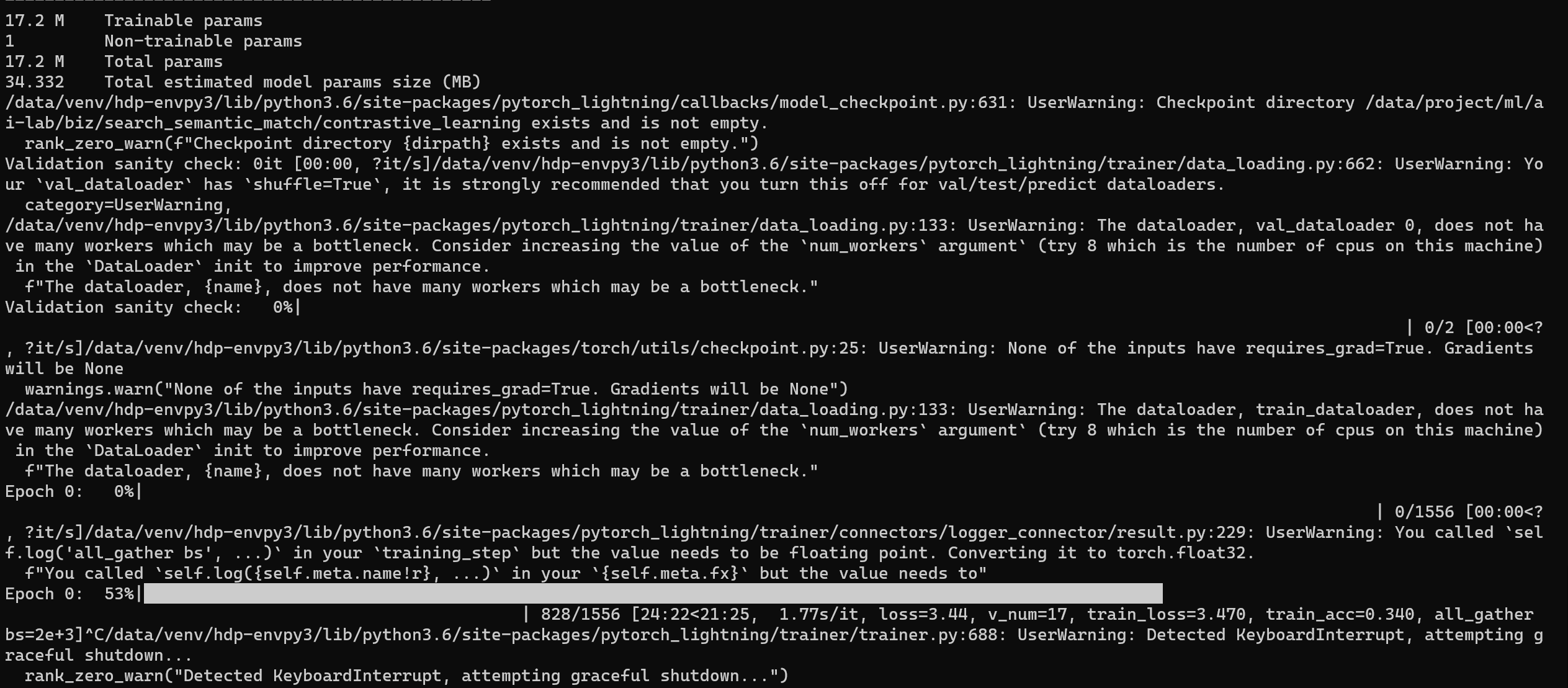

I’m using DPP to train a encoder model with 5 GPU nodes, batch_size is 400 pre node, model will gather all embeddings from 5 nodes to create a 2000*2000 matrix, then compute loss with this matrix.

Each node keeps 2623678 train samples and 491939 val samples, so the steps per epoch shoud be 2623678//400+491939//400 is 7788, BUT, the preocess bar show the steps per epoch is 1556, seems come from 2623678//2000+491939//2000

trainer config:

trainer = pl.Trainer(accelerator='gpu', devices=1, auto_select_gpus=True, max_epochs=100,

callbacks=callbacks, precision=16,

log_every_n_steps=20, strategy="ddp_sharded", num_nodes=5)

trainer.fit(model=self.pl_model, datamodule=self.data_module)

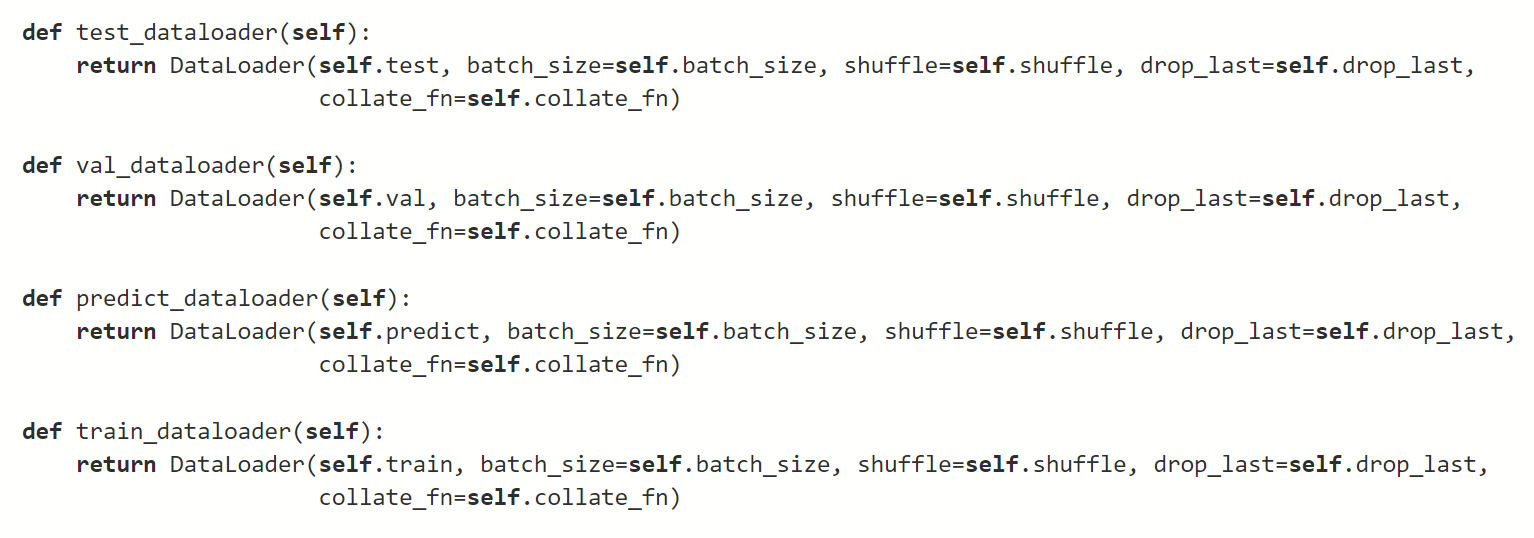

The train dataloader and val dataloader created with batch size 400:

To Reproduce

Expected behavior

Environment

- PyTorch Lightning Version : 1.5.10

- PyTorch Version : 1.9.0+cu102

- Python version : 3.6.8

- OS : Linux

- CUDA/cuDNN version: 11.0

- How you installed PyTorch (

conda,pip, source): pip

Additional context

cc @justusschock @kaushikb11 @awaelchli @akihironitta @rohitgr7

Issue Analytics

- State:

- Created a year ago

- Comments:8 (4 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Number of steps per epoch · Issue #5449 · Lightning ... - GitHub

Since with dp I believe the total training steps doesn't scale with number of devices so the above code might not be correct...

Read more >Weird number of steps per epoch - Trainer - PyTorch Lightning

Hello, I'm facing an issue of a weird number of steps per epochs being displayed and processed while training. the number of steps...

Read more >Choosing number of Steps per Epoch - Stack Overflow

Traditionally, the steps per epoch is calculated as train_length // batch_size, since this will use all of the data points, one batch size...

Read more >Trainer — PyTorch Lightning 1.8.5.post0 documentation

If you want to stop a training run early, you can press “Ctrl + C” on your keyboard. The trainer will catch the...

Read more >Why Parallelized Training Might Not be Working for You

The single process run takes 73 seconds to complete, while the DDP training run is almost eight times slower, taking 443 seconds to...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Oh this is a very important detail!!! In this case, make sure to tell Lightning not to add a distributed sampler, as explained by @rohitgr7. You can set

Trainer(replace_sampler_ddp=False).Because of the distributed sampler in PyTorch, you wouldn’t need to split the dataset manually normally, as this would normally be done online through sampling.

Awesome! Glad this was a relatively easy fix 😃 Let us know if there are further questions.