OutOfDirectMemoryError errors causing linkerd to fail

See original GitHub issueIssue Type:

- Bug report

- Feature request

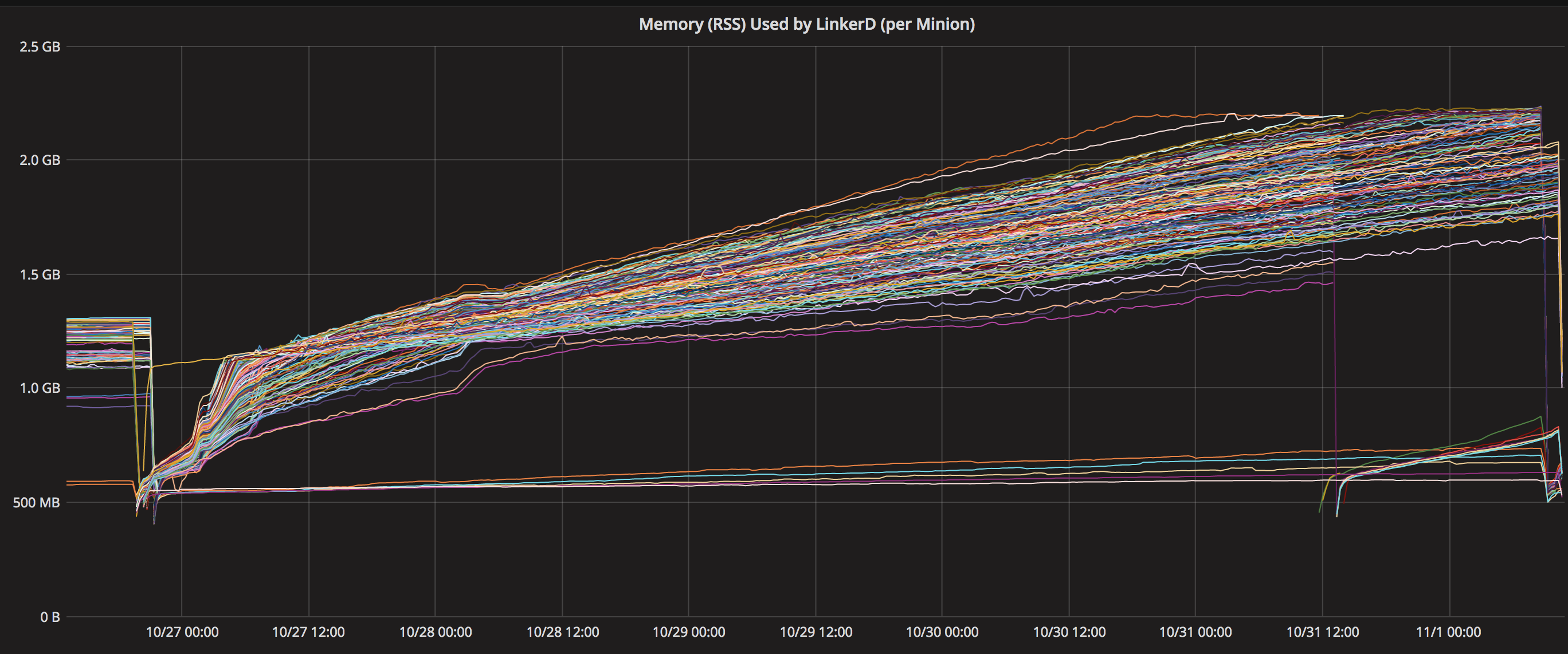

What happened: Over a period of days, linkerd memory usage climbs until it reaches a maximum and starts throwing OutOfDirectMemoryError errors

What you expected to happen: OutOfDirectMemoryError errors should not happen

How to reproduce it (as minimally and precisely as possible):

Running linkerd 1.3.0 single instance per host, with namerd on separate hosts. Environment memory overrides: JVM_HEAP_MAX=1024M and JVM_HEAP_MIN=1024M. Let run for days, and monitor process memory usage. Usage increases until errors start occuring:

Memory usage chart (10/27 new VMs running 1.3.0, dips at the end are me restarting linkerd):

Stack trace:

linkerd[2410]: W 1101 14:10:26.714 UTC THREAD42: Unhandled exception in connection with /10.49.154.23:42176, shutting down connection

linkerd[2410]: io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 1048576 byte(s) of direct memory (used: 1037041958, max: 1037959168)

linkerd[2410]: at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:618)

linkerd[2410]: at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:572)

linkerd[2410]: at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:764)

linkerd[2410]: at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:740)

linkerd[2410]: at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:244)

linkerd[2410]: at io.netty.buffer.PoolArena.allocate(PoolArena.java:214)

linkerd[2410]: at io.netty.buffer.PoolArena.allocate(PoolArena.java:146)

linkerd[2410]: at io.netty.buffer.PooledByteBufAllocator.newDirectBuffer(PooledByteBufAllocator.java:324)

linkerd[2410]: at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:181)

linkerd[2410]: at io.netty.buffer.AbstractByteBufAllocator.directBuffer(AbstractByteBufAllocator.java:172)

linkerd[2410]: at io.netty.buffer.AbstractByteBufAllocator.ioBuffer(AbstractByteBufAllocator.java:133)

linkerd[2410]: at io.netty.channel.DefaultMaxMessagesRecvByteBufAllocator$MaxMessageHandle.allocate(DefaultMaxMessagesRecvByteBufAllocator.java:80)

linkerd[2410]: at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:122)

linkerd[2410]: at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:645)

linkerd[2410]: at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:580)

linkerd[2410]: at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:497)

linkerd[2410]: at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:459)

linkerd[2410]: at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858)

linkerd[2410]: at java.util.concurrent.ThreadPoolExecutor.runWorker(Unknown Source)

linkerd[2410]: at java.util.concurrent.ThreadPoolExecutor$Worker.run(Unknown Source)

linkerd[2410]: at com.twitter.finagle.util.BlockingTimeTrackingThreadFactory$$anon$1.run(BlockingTimeTrackingThreadFactory.scala:23)

linkerd[2410]: at java.lang.Thread.run(Unknown Source)

Anything else we need to know?:

Environment:

- linkerd/namerd version, config files: 1.3.0 linkerd and namerd. linkerd config:

admin:

port: 9990

ip: 0.0.0.0

routers:

- protocol: http

label: microservices_prod

dstPrefix: /http

interpreter:

kind: io.l5d.mesh

dst: /$/inet/cwl-mesos-masters.service.consul/4182

experimental: true

root: /microservices-prod

identifier:

- kind: io.l5d.path

segments: 3

- kind: io.l5d.path

segments: 2

- kind: io.l5d.path

segments: 1

servers:

- port: 4170

ip: 0.0.0.0

client:

kind: io.l5d.global

loadBalancer:

kind: ewma

failureAccrual:

kind: io.l5d.consecutiveFailures

failures: 5

requeueBudget:

minRetriesPerSec: 10

percentCanRetry: 0.2

ttlSecs: 10

service:

kind: io.l5d.global

totalTimeoutMs: 10000

responseClassifier:

kind: io.l5d.http.retryableRead5XX

retries:

budget:

minRetriesPerSec: 10

percentCanRetry: 0.2

ttlSecs: 10

- protocol: http

label: proxy_by_host

dstPrefix: /http

interpreter:

kind: io.l5d.mesh

dst: /$/inet/cwl-mesos-masters.service.consul/4182

experimental: true

root: /default

identifier:

- kind: io.l5d.header.token

header: Host

servers:

- port: 4141

ip: 0.0.0.0

- port: 80

ip: 0.0.0.0

- protocol: http

label: proxy_by_path3

dstPrefix: /http

interpreter:

kind: io.l5d.mesh

dst: /$/inet/cwl-mesos-masters.service.consul/4182

experimental: true

root: /default

identifier:

- kind: io.l5d.path

segments: 3

servers:

- port: 4153

ip: 0.0.0.0

- protocol: http

label: proxy_by_path2

dstPrefix: /http

interpreter:

kind: io.l5d.mesh

dst: /$/inet/cwl-mesos-masters.service.consul/4182

experimental: true

root: /default

identifier:

- kind: io.l5d.path

segments: 2

servers:

- port: 4152

ip: 0.0.0.0

- protocol: http

label: proxy_by_path1

dstPrefix: /http

interpreter:

kind: io.l5d.mesh

dst: /$/inet/cwl-mesos-masters.service.consul/4182

experimental: true

root: /default

identifier:

- kind: io.l5d.path

segments: 1

servers:

- port: 4151

ip: 0.0.0.0

telemetry:

- kind: io.l5d.influxdb

-

Platform, version, and config files (Kubernetes, DC/OS, etc): CentOS Linux release 7.4.1708 (Core)

-

Cloud provider or hardware configuration: Google Cloud

Issue Analytics

- State:

- Created 6 years ago

- Comments:48 (26 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

@DukeyToo We have a fix up at: https://github.com/linkerd/linkerd/pull/1711 Is it possible to test this branch against your use case?

Quick update for folks watching this issue. We have reproduced a leak and are actively working on a fix. To confirm the leak you are seeing is the same one we have identified, have a look at your

open_streamsmetrics. If they grow over time, that is the leak.