[Feature] test.todo or test.manual

See original GitHub issuetestcase – a set of actions to perform to verify some functionality test – automated testcase

In our delivery process all our testcases are stored as a code:

test – automated testcase

test.skip – broken/outdated testcase (not test!) which must not be checked anyway

test.todo – testcase, which is valid and must be checked until release, but not implemented as autotest yet.

Let say we have two testcases:

test('testcase one')

test.todo('testcase two')

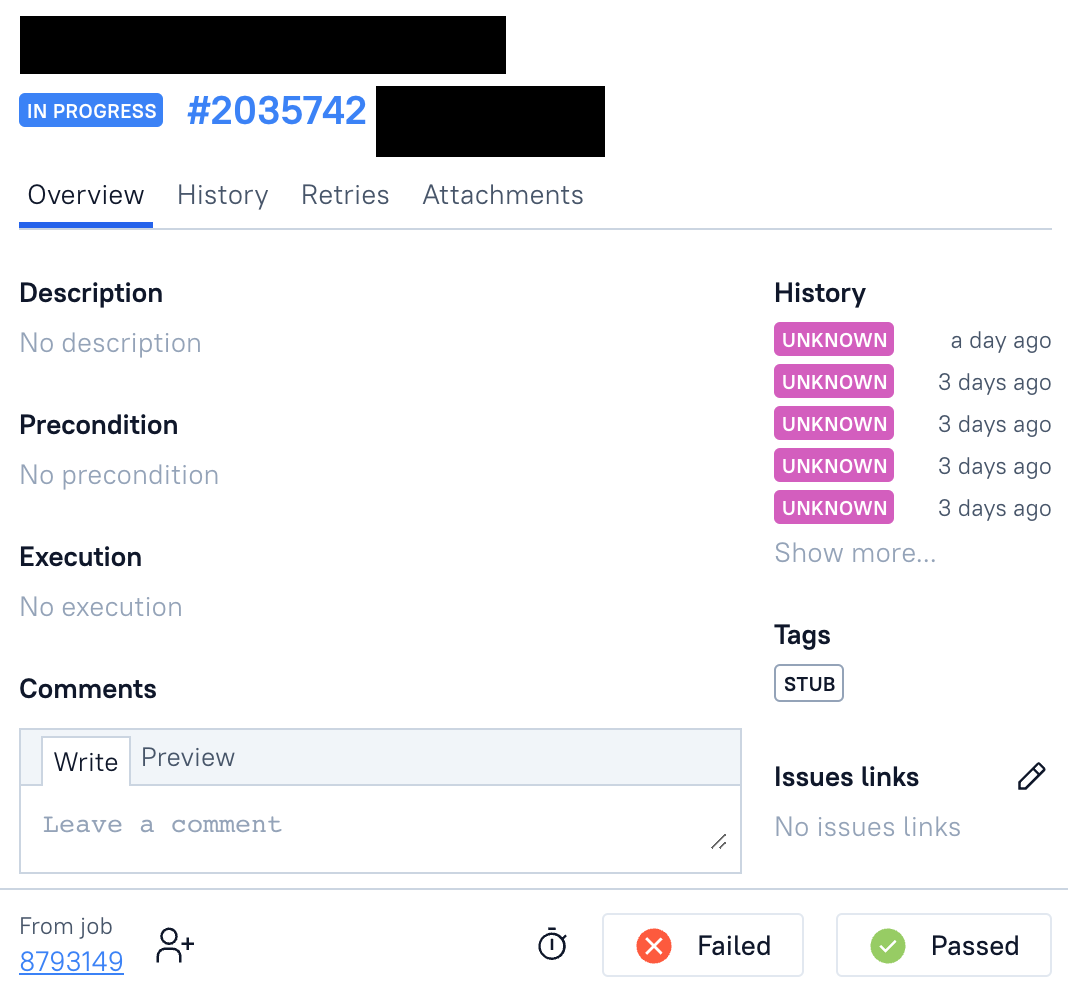

the second one will be uploaded to Allure EE as inprogress testcase, which must be checked. QA guy could mark it as Passed or Failed manually:

So, our QA do this:

- Run all tests (this is done automatically by pull request CI) → generate report → upload report to Allure as a test launch

- Inspect the test launch – and here we come 1) some testcases passed 2) some testcases failed 3) some testcases are not implemented

- Run manually all failed and not implemented testcases (within the same test launch!)

- Close test launch

- Merge pull request if launch is successful → run CD and make a release

What do you think about the idea of using autotests as a source of truth for all testcases? Including these which could not be automated (e.g. hover state or some rare browser).

What do you think about test.todo or test.manual annotation for such a case?

Jest and CodeceptJS already have this. But Jest does not allow to pass callback, which is not good, because we cannot set allure.description anywhere but in callback. allure.description is important for QA guy, because it contains a body of a testcase (a human readable set of actions to perform).

Issue Analytics

- State:

- Created a year ago

- Comments:5 (3 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

I would suggest introducing a convention for annotating those using fixme. Something like a tag, annotation or attachment:

Closing as per above, please feel free to open a new issue if this does not cover your use case.