MiniProfiler silently fails when MemoryCache is used with a SizeLimit

See original GitHub issueIn a new .NET Core 3.1 web project, I had the following code in my dependency injection setup:

services.AddMemoryCache(options => {

options.SizeLimit = 1024;

});

services.AddMiniProfiler(options =>

{

// Path to use for profiler URLs, default is /mini-profiler-resources

options.RouteBasePath = "/mini-profiler";

});

The rest of the setup was as described in the documentation.

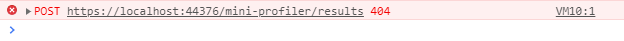

This causes all calls to miniprofiler to fail:

I traced the issue back to MemoryCacheStorage#158, which always fails to store the current MiniProfiler instance. Any subsequent calls that need to fetch it from the storage fail.

Unfortunately the exception was swallowed somewhere and I couldn’t see the exception details, but I can make an educated guess: cache entries must have sizes set when memory cache is configured with a size limit.

Removing the SizeLimit option on my MemoryCache resolved the problem. I have not found a workaround yet that let me keep the SizeLimit.

Most likely, this issue is very much related: #433

Is this a known limitation, or am I doing something wrong? For now, I’ll disable the size limit.

Thanks!

Issue Analytics

- State:

- Created 3 years ago

- Comments:9 (5 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

No blame assumed at all, just always thinking out loud in case it spurs more ideas! I agree at least mentioning this in the docs is a good idea 9512d29588ebc7f1c6a61c38540ddeff452f23ad adds a note and a link to this issue in the config section (best place I can think of), but if you’ve got other ideas for improvements there I’m all ears!

It’s really any consumer of

MemoryCache, so I’m not sure what we could do here. Ultimately, I don’t really agree with howMemoryCacheexposed this…it should be aLimitedMemoryCacheor something. The way it’s done, an object must specify a size, in an overall attempt to limit memory. But in an OO language you don’t know how big your size it.We could loop through and make a “best guess” I guess (eating some CPU to do that), basically taking the size of a timing, custom timing, commands, all strings, etc. down the ref chain. And we’d have to maintain that. While .NET has the ability to do this by crawling the pointer tree (see ClrMd as an example), it’s not done because it’s expensive.

The memory size limit on

MemoryCacheis fundamentally flawed in that it’s a cap based on a size given by the inserter, but that’s a) whatever they say it is, and b) not guaranteed to change. For example I can add an object, null out it’s ref tree, and the cache remains “full” because of a number we gave it. It’s a good thought, but the practical reality of the limit I’ve never seen in use past a fairly simple application (without many pieces using it).