[BUG] Using MlflowClient.get_latest_version with an older server instance causes 404

See original GitHub issueWillingness to contribute

The MLflow Community encourages bug fix contributions. Would you or another member of your organization be willing to contribute a fix for this bug to the MLflow code base?

- Yes. I can contribute a fix for this bug independently.

- Yes. I would be willing to contribute a fix for this bug with guidance from the MLflow community.

- No. I cannot contribute a bug fix at this time.

System information

- Have I written custom code (as opposed to using a stock example script provided in MLflow): No

- OS Platform and Distribution (e.g., Linux Ubuntu 16.04): SUSE Linux Enterprise Server 12 SP2

- MLflow installed from (source or binary): Binary

- MLflow version (run

mlflow --version): 1.22 for the client, 1.11.0 for the server - Python version: 3.6.12

- npm version, if running the dev UI:

- Exact command to reproduce:

MlflowClient().get_latest_versions("SOME_REGISTERED_MODEL")

Describe the problem

We have MlFlow 1.11.0 installed on a server, and version 1.22 on the client. When the client is connected to the server, and executing the get_latest_version command, the following message is shown:

MlflowException: API request to endpoint /api/2.0/mlflow/registered-models/get-latest-versions failed with error code 404 != 200. Response body: '<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 3.2 Final//EN">

<title>404 Not Found</title>

<h1>Not Found</h1>

<p>The requested URL was not found on the server. If you entered the URL manually please check your spelling and try again.</p>

The expected result would have been the latest version information of the model.

Code to reproduce issue

Prerequisites:

- Run mlflow version 1.11.0 on a server in a conda environment

- Connect a client to the mlflow server using

mlflow.set_tracking_uri() - Create an experiment on the server using

MlflowClient.create_experiment

Now run:

import mlflow

from mlflow.tracking import MlflowClient

mlflow.set_tracking_uri("TRACKING_URI")

client = MlflowClient()

client.get_latest_versions("EXPERIMENT_NAME")

Other info / logs

This problem seems to have been introduced with #4999. In that PR, support for POST-calls on get-latest-version was added.

This by itself is not a problem, since the author of the PR checks that if the POST-call is not available on the server, it catches the ENDPOINT_NOT_FOUND exception and tries to use a GET-call.

The problem is created however, because it calls /mlflow/registered-models/get-latest-version instead of the usual /preview/mlflow/registered-models/get-latest-versions. This means that not an ENDPONT_NOT_FOUND exception is thrown, but a 404 Not Found. This exception is not caught, meaning that the program will not continue to try the GET-call and crashes instead.

It seems to me that the omission of preview in the URL is the root cause of the bug (see: https://github.com/stevenchen-db/mlflow/blob/9bbbb0c28d285476e0f3e2a81ecfbf577d1b03ca/mlflow/protos/model_registry.proto#L133), but I’m not sure if that is on purpose or not. If the omission of preview is on purpose, some other way of exception handling could be implemented to avoid getting 404-errors when working with older servers that do not support POST-calls.

Since the reason behind the missing preview part is not entirely clear to me, I did not want to provide a bug fix immediately. If the maintainers could provide some guidance on which approach to fix this bug would be best for this project, I’d be happy to help implement the change.

What component(s), interfaces, languages, and integrations does this bug affect?

Components

-

area/artifacts: Artifact stores and artifact logging -

area/build: Build and test infrastructure for MLflow -

area/docs: MLflow documentation pages -

area/examples: Example code -

area/model-registry: Model Registry service, APIs, and the fluent client calls for Model Registry -

area/models: MLmodel format, model serialization/deserialization, flavors -

area/projects: MLproject format, project running backends -

area/scoring: MLflow Model server, model deployment tools, Spark UDFs -

area/server-infra: MLflow Tracking server backend -

area/tracking: Tracking Service, tracking client APIs, autologging

Interface

-

area/uiux: Front-end, user experience, plotting, JavaScript, JavaScript dev server -

area/docker: Docker use across MLflow’s components, such as MLflow Projects and MLflow Models -

area/sqlalchemy: Use of SQLAlchemy in the Tracking Service or Model Registry -

area/windows: Windows support

Language

-

language/r: R APIs and clients -

language/java: Java APIs and clients -

language/new: Proposals for new client languages

Integrations

-

integrations/azure: Azure and Azure ML integrations -

integrations/sagemaker: SageMaker integrations -

integrations/databricks: Databricks integrations

Issue Analytics

- State:

- Created 2 years ago

- Comments:8 (1 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Reporting the same issue with

1.23.1. To be more precise, it yields405HTTP response code:Method Not Allowed.A solution to the issue is, both the

pythonandmlflowversions ofclientandserverneeds to be the same.Would be good for the future for the mismatch in versions to be allowed; this would facilitate MLFlow interaction across different projects. Otherwise it is quite troublesome to maintain all projects with different developers at the same time.

Needless to say, the versioning issue also affects the

cloudpickleso either way, in current state of things, the version overlap appears is required.I came across a similar issue for the `` endpoint. MLflow server version: 1.21.0 (docs: https://www.mlflow.org/docs/1.21.0/rest-api.html#get-latest-modelversions) MLflow client version: 1.24.0 (docs: https://www.mlflow.org/docs/1.24.0/rest-api.html#get-latest-modelversions)

The obvious first: the request method changed from

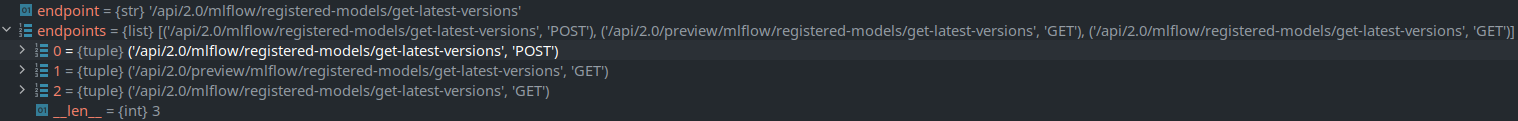

GETtoPOST. Apparently, the MLflow team tries to accommodate for these exact changes here: https://github.com/mlflow/mlflow/blob/e78d6e90b0011b4ad33aa9cda84e8e0c7d202349/mlflow/utils/rest_utils.py#L265-L270 which does not work because if the first method (POST) fails, it will break out of the loop and never try to do theGETmethod later.While debugging, I don’t get past the first entry and the exception is reraised:

A current workaround is to down-/upgrade either client or server until the REST API matches. To the MLflow team (not meant to be offensive):