InstancedMesh uses an incorrect normal matrix

See original GitHub issueAs discussed here.

The vertex normal should be transformed by the normal matrix computed from the instanceMatrix.

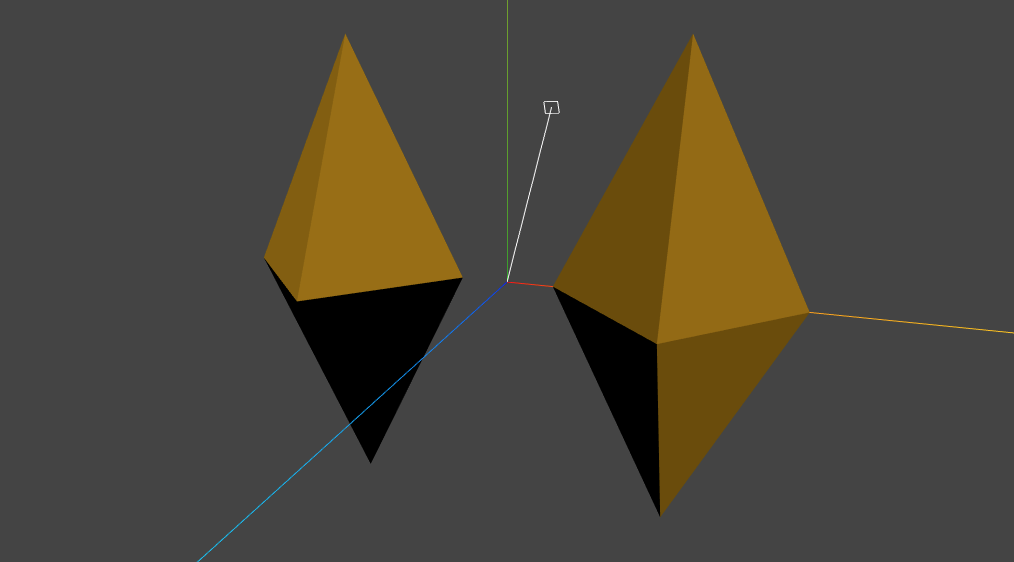

In this image, the mesh on the left is an InstancedMesh having a single instance. The image on the right is a non-instanced Mesh. They should have identical shading.

Three.js version

- [ x ] r113 dev

- [ x ] r112

Issue Analytics

- State:

- Created 4 years ago

- Comments:6 (4 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Problem with normals when drawing instanced mesh

The solution was relatively simple: there is no point in passing a normal-matrix to the shader. Instead, the normal needs to be computed...

Read more >InstancedMesh from GLTF doesn't work?! - three.js forum

Hi, InstancedMesh ([1] in code) doesn't work when geometry loaded from GLTF - is this a bug or I'm doing smth wrong?! When...

Read more >Incorrect normals on after rotating instances [Graphics ...

I am currently passing positions and rotations in two seperate ComputeBuffers (using a Vector3 array and a Quaternion array respectively) and in ...

Read more >InstancedMesh | Babylon.js Documentation

If true (default false) and and if the mesh geometry is shared among some other meshes, the returned array is a copy of...

Read more >aframe-instanced-mesh - npm

This can be useful for raycasting or physics body shape configuration on instanced Mesh members. false. colors, array, an array of colors to...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

FWIW normal matrix isn’t the only way to transform normals, assuming the object matrix uses rotation and (non-uniform) scale there’s an alternative formulation.

I’m going to use column vectors below, so the vertex transform is

T*R*S*vwith a decomposed matrix. The canonical formulation for normal matrix suggests usingNM = inverse(transpose(R*S)).inverse(transpose(R*S)) = inverse(transpose(S) * transpose(R)) = inverse(transpose(R)) * inverse(transpose(S)), where R is a rotation matrix and S is a diagonal matrix with scale values for each axis.inverse(transpose(R)) = R, andtranspose(S) = S, so the above is equal toR * inverse(S), which is equal toR * S * inverse(S) * inverse(S).Thus it’s sufficient to pre-transform the object-space normal using

inverse(S)^2- since S is a diagonal, if you know the scale values this just involves dividing the normal by scale^2.You can recover the scale values from the combined R*S matrix by measuring the length of basis vectors.

This shader code illustrates the construction:

The cost of this correction is three dot products and a vector division, which seems reasonable. If both instance matrix and object matrix can carry non-uniform scale then I think you will need to run this code twice.

Something like this could be used as a generic normal transform function (assuming a normalization step is ran after this):

edit The above assumes that the transform matrix can be decomposed into R*S, which isn’t true of an arbitrary sequence of rotation-scale transforms, but is probably true for instance matrix transform - so I’m assuming this can be combined with using normalMatrix for handling the general scene graph transform. So this might be useful not as a replacement for existing normalMatrix, but purely as a way to correct instanceMatrix transformation in the shader.

For the current

InstancedMeshAPI, I think the fix proposed in this thread is reasonable.