When I try my dataset on instance SCNet ,FileNotFoundError: [Errno 2] No such file or directory: 'data/coco/stuffthingmaps/train2017/JPEGImages/DJI_0252.png'

See original GitHub issueFirstly, I have ran correctly on maskrcnn.And I found in scnet config train Collect ,there is a extra keys 'gt_semantic_seg' and specify the path seg_prefix='data/coco/stuffthingmaps/train2017/',.But my instance dataset not has the stuffthingmaps.

my config is that.

# 这个新的配置文件继承自一个原始配置文件,只需要突出必要的修改部分即可

_base_ = '../configs/scnet/scnet_x101_64x4d_fpn_20e_coco.py'

_delete_=True

# 我们需要对头中的类别数量进行修改来匹配数据集的标注

model = dict(

roi_head=dict(

# bbox_head=[dict(num_classes=1)], # 除了maskrcnn之外的网络,bbox_head和mask_head都是列表格式,maskrcnn是字典格式

bbox_head=[

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0., 0., 0., 0.],

target_stds=[0.1, 0.1, 0.2, 0.2]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

loss_weight=1.0)),

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0., 0., 0., 0.],

target_stds=[0.05, 0.05, 0.1, 0.1]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

loss_weight=1.0)),

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0., 0., 0., 0.],

target_stds=[0.033, 0.033, 0.067, 0.067]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0))

],

mask_head=dict(num_classes=1),

glbctx_head=dict(num_classes=1)))

runner = runner = dict(type='EpochBasedRunner', max_epochs=20)

# 修改数据集相关设置

dataset_type = 'CocoDataset'

classes = ('building',)

data = dict(

train=dict(

img_prefix='first_test_dataset/train',

classes=classes,

ann_file='first_test_dataset/train/annotations.json'),

val=dict(

img_prefix='first_test_dataset/val',

classes=classes,

ann_file='first_test_dataset/val/annotations.json'),

test=dict(

img_prefix='first_test_dataset/val',

classes=classes,

ann_file='first_test_dataset/val/annotations.json'))

# 我们可以使用预训练的 Mask R-CNN 来获取更好的性能

load_from = 'checkpoints/scnet/scnet_x101_64x4d_fpn_20e_coco-fb09dec9.pth'

and the error info is that.

/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launch.py:186: FutureWarning: The module torch.distributed.launch is deprecated

and will be removed in future. Use torchrun.

Note that --use_env is set by default in torchrun.

If your script expects `--local_rank` argument to be set, please

change it to read from `os.environ['LOCAL_RANK']` instead. See

https://pytorch.org/docs/stable/distributed.html#launch-utility for

further instructions

FutureWarning,

WARNING:torch.distributed.run:

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/utils/setup_env.py:43: UserWarning: Setting MKL_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

f'Setting MKL_NUM_THREADS environment variable for each process '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/utils/setup_env.py:43: UserWarning: Setting MKL_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

f'Setting MKL_NUM_THREADS environment variable for each process '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/utils/setup_env.py:43: UserWarning: Setting MKL_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

f'Setting MKL_NUM_THREADS environment variable for each process '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/utils/setup_env.py:43: UserWarning: Setting MKL_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

f'Setting MKL_NUM_THREADS environment variable for each process '

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

2022-04-09 20:10:50,153 - mmdet - INFO - Environment info:

------------------------------------------------------------

sys.platform: linux

Python: 3.7.13 (default, Mar 29 2022, 02:18:16) [GCC 7.5.0]

CUDA available: True

GPU 0,1,2,3: NVIDIA GeForce RTX 2080 Ti

CUDA_HOME: /usr/local/cuda-10.1

NVCC: Cuda compilation tools, release 10.1, V10.1.105

GCC: gcc (Ubuntu 8.4.0-1ubuntu1~18.04) 8.4.0

PyTorch: 1.11.0

PyTorch compiling details: PyTorch built with:

- GCC 7.3

- C++ Version: 201402

- Intel(R) oneAPI Math Kernel Library Version 2021.4-Product Build 20210904 for Intel(R) 64 architecture applications

- Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)

- OpenMP 201511 (a.k.a. OpenMP 4.5)

- LAPACK is enabled (usually provided by MKL)

- NNPACK is enabled

- CPU capability usage: AVX2

- CUDA Runtime 11.3

- NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37

- CuDNN 8.2

- Magma 2.5.2

- Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=/opt/rh/devtoolset-7/root/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF,

TorchVision: 0.12.0

OpenCV: 4.5.5

MMCV: 1.4.8

MMCV Compiler: GCC 7.3

MMCV CUDA Compiler: 11.3

MMDetection: 2.23.0+

------------------------------------------------------------

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

2022-04-09 20:10:50,544 - mmdet - INFO - Distributed training: True

2022-04-09 20:10:50,916 - mmdet - INFO - Config:

dataset_type = 'CocoDataset'

data_root = 'data/coco/'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='LoadAnnotations', with_bbox=True, with_mask=True, with_seg=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='SegRescale', scale_factor=0.125),

dict(type='DefaultFormatBundle'),

dict(

type='Collect',

keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks', 'gt_semantic_seg'])

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]

data = dict(

samples_per_gpu=2,

workers_per_gpu=2,

train=dict(

type='CocoDataset',

ann_file='first_test_dataset/train/annotations.json',

img_prefix='first_test_dataset/train',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='LoadAnnotations',

with_bbox=True,

with_mask=True,

with_seg=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='SegRescale', scale_factor=0.125),

dict(type='DefaultFormatBundle'),

dict(

type='Collect',

keys=[

'img', 'gt_bboxes', 'gt_labels', 'gt_masks',

'gt_semantic_seg'

])

],

seg_prefix='data/coco/stuffthingmaps/train2017/',

classes=('building', )),

val=dict(

type='CocoDataset',

ann_file='first_test_dataset/val/annotations.json',

img_prefix='first_test_dataset/val',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

],

classes=('building', )),

test=dict(

type='CocoDataset',

ann_file='first_test_dataset/val/annotations.json',

img_prefix='first_test_dataset/val',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

],

classes=('building', )))

evaluation = dict(metric=['bbox', 'segm'])

optimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001)

optimizer_config = dict(grad_clip=None)

lr_config = dict(

policy='step',

warmup='linear',

warmup_iters=500,

warmup_ratio=0.001,

step=[16, 19])

runner = dict(type='EpochBasedRunner', max_epochs=20)

checkpoint_config = dict(interval=1)

log_config = dict(interval=50, hooks=[dict(type='TextLoggerHook')])

custom_hooks = [dict(type='NumClassCheckHook')]

dist_params = dict(backend='nccl')

log_level = 'INFO'

load_from = 'checkpoints/scnet/scnet_x101_64x4d_fpn_20e_coco-fb09dec9.pth'

resume_from = None

workflow = [('train', 1)]

opencv_num_threads = 0

mp_start_method = 'fork'

model = dict(

type='SCNet',

backbone=dict(

type='ResNeXt',

depth=101,

num_stages=4,

out_indices=(0, 1, 2, 3),

frozen_stages=1,

norm_cfg=dict(type='BN', requires_grad=True),

norm_eval=True,

style='pytorch',

init_cfg=dict(

type='Pretrained', checkpoint='open-mmlab://resnext101_64x4d'),

groups=64,

base_width=4),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

num_outs=5),

rpn_head=dict(

type='RPNHead',

in_channels=256,

feat_channels=256,

anchor_generator=dict(

type='AnchorGenerator',

scales=[8],

ratios=[0.5, 1.0, 2.0],

strides=[4, 8, 16, 32, 64]),

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[1.0, 1.0, 1.0, 1.0]),

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

loss_bbox=dict(

type='SmoothL1Loss', beta=0.1111111111111111, loss_weight=1.0)),

roi_head=dict(

type='SCNetRoIHead',

num_stages=3,

stage_loss_weights=[1, 0.5, 0.25],

bbox_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

out_channels=256,

featmap_strides=[4, 8, 16, 32]),

bbox_head=[

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[0.1, 0.1, 0.2, 0.2]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

loss_weight=1.0)),

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[0.05, 0.05, 0.1, 0.1]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

loss_weight=1.0)),

dict(

type='SCNetBBoxHead',

num_shared_fcs=2,

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=1,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[0.033, 0.033, 0.067, 0.067]),

reg_class_agnostic=True,

loss_cls=dict(

type='CrossEntropyLoss',

use_sigmoid=False,

loss_weight=1.0),

loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0))

],

mask_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

out_channels=256,

featmap_strides=[4, 8, 16, 32]),

mask_head=dict(

type='SCNetMaskHead',

num_convs=12,

in_channels=256,

conv_out_channels=256,

num_classes=1,

conv_to_res=True,

loss_mask=dict(

type='CrossEntropyLoss', use_mask=True, loss_weight=1.0)),

semantic_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

out_channels=256,

featmap_strides=[8]),

semantic_head=dict(

type='SCNetSemanticHead',

num_ins=5,

fusion_level=1,

num_convs=4,

in_channels=256,

conv_out_channels=256,

num_classes=183,

loss_seg=dict(

type='CrossEntropyLoss', ignore_index=255, loss_weight=0.2),

conv_to_res=True),

glbctx_head=dict(

type='GlobalContextHead',

num_convs=4,

in_channels=256,

conv_out_channels=256,

num_classes=1,

loss_weight=3.0,

conv_to_res=True),

feat_relay_head=dict(

type='FeatureRelayHead',

in_channels=1024,

out_conv_channels=256,

roi_feat_size=7,

scale_factor=2)),

train_cfg=dict(

rpn=dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.7,

neg_iou_thr=0.3,

min_pos_iou=0.3,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=256,

pos_fraction=0.5,

neg_pos_ub=-1,

add_gt_as_proposals=False),

allowed_border=0,

pos_weight=-1,

debug=False),

rpn_proposal=dict(

nms_pre=2000,

max_per_img=2000,

nms=dict(type='nms', iou_threshold=0.7),

min_bbox_size=0),

rcnn=[

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.5,

neg_iou_thr=0.5,

min_pos_iou=0.5,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

mask_size=28,

pos_weight=-1,

debug=False),

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.6,

neg_iou_thr=0.6,

min_pos_iou=0.6,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

mask_size=28,

pos_weight=-1,

debug=False),

dict(

assigner=dict(

type='MaxIoUAssigner',

pos_iou_thr=0.7,

neg_iou_thr=0.7,

min_pos_iou=0.7,

ignore_iof_thr=-1),

sampler=dict(

type='RandomSampler',

num=512,

pos_fraction=0.25,

neg_pos_ub=-1,

add_gt_as_proposals=True),

mask_size=28,

pos_weight=-1,

debug=False)

]),

test_cfg=dict(

rpn=dict(

nms_pre=1000,

max_per_img=1000,

nms=dict(type='nms', iou_threshold=0.7),

min_bbox_size=0),

rcnn=dict(

score_thr=0.001,

nms=dict(type='nms', iou_threshold=0.5),

max_per_img=100,

mask_thr_binary=0.5)))

_delete_ = True

classes = ('building', )

work_dir = './work_dirs/scnet_x101_64x4d_fpn_20e_coco_building'

auto_resume = False

gpu_ids = range(0, 4)

2022-04-09 20:10:50,916 - mmdet - INFO - Set random seed to 0, deterministic: False

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/models/losses/cross_entropy_loss.py:240: UserWarning: Default ``avg_non_ignore`` is False, if you would like to ignore the certain label and average loss over non-ignore labels, which is the same with PyTorch official cross_entropy, set ``avg_non_ignore=True``.

'Default ``avg_non_ignore`` is False, if you would like to '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/models/losses/cross_entropy_loss.py:240: UserWarning: Default ``avg_non_ignore`` is False, if you would like to ignore the certain label and average loss over non-ignore labels, which is the same with PyTorch official cross_entropy, set ``avg_non_ignore=True``.

'Default ``avg_non_ignore`` is False, if you would like to '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/models/losses/cross_entropy_loss.py:240: UserWarning: Default ``avg_non_ignore`` is False, if you would like to ignore the certain label and average loss over non-ignore labels, which is the same with PyTorch official cross_entropy, set ``avg_non_ignore=True``.

'Default ``avg_non_ignore`` is False, if you would like to '

/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/models/losses/cross_entropy_loss.py:240: UserWarning: Default ``avg_non_ignore`` is False, if you would like to ignore the certain label and average loss over non-ignore labels, which is the same with PyTorch official cross_entropy, set ``avg_non_ignore=True``.

'Default ``avg_non_ignore`` is False, if you would like to '

2022-04-09 20:10:52,330 - mmdet - INFO - initialize ResNeXt with init_cfg {'type': 'Pretrained', 'checkpoint': 'open-mmlab://resnext101_64x4d'}

2022-04-09 20:10:52,330 - mmcv - INFO - load model from: open-mmlab://resnext101_64x4d

2022-04-09 20:10:52,330 - mmcv - INFO - load checkpoint from openmmlab path: open-mmlab://resnext101_64x4d

2022-04-09 20:10:57,298 - mmdet - INFO - initialize FPN with init_cfg {'type': 'Xavier', 'layer': 'Conv2d', 'distribution': 'uniform'}

2022-04-09 20:10:57,320 - mmdet - INFO - initialize RPNHead with init_cfg {'type': 'Normal', 'layer': 'Conv2d', 'std': 0.01}

2022-04-09 20:10:57,324 - mmdet - INFO - initialize SCNetBBoxHead with init_cfg [{'type': 'Normal', 'std': 0.01, 'override': {'name': 'fc_cls'}}, {'type': 'Normal', 'std': 0.001, 'override': {'name': 'fc_reg'}}, {'type': 'Xavier', 'distribution': 'uniform', 'override': [{'name': 'shared_fcs'}, {'name': 'cls_fcs'}, {'name': 'reg_fcs'}]}]

2022-04-09 20:10:57,417 - mmdet - INFO - initialize SCNetBBoxHead with init_cfg [{'type': 'Normal', 'std': 0.01, 'override': {'name': 'fc_cls'}}, {'type': 'Normal', 'std': 0.001, 'override': {'name': 'fc_reg'}}, {'type': 'Xavier', 'distribution': 'uniform', 'override': [{'name': 'shared_fcs'}, {'name': 'cls_fcs'}, {'name': 'reg_fcs'}]}]

2022-04-09 20:10:57,508 - mmdet - INFO - initialize SCNetBBoxHead with init_cfg [{'type': 'Normal', 'std': 0.01, 'override': {'name': 'fc_cls'}}, {'type': 'Normal', 'std': 0.001, 'override': {'name': 'fc_reg'}}, {'type': 'Xavier', 'distribution': 'uniform', 'override': [{'name': 'shared_fcs'}, {'name': 'cls_fcs'}, {'name': 'reg_fcs'}]}]

2022-04-09 20:10:57,626 - mmdet - INFO - initialize SCNetSemanticHead with init_cfg {'type': 'Kaiming', 'override': {'name': 'conv_logits'}}

2022-04-09 20:10:57,633 - mmdet - INFO - initialize FeatureRelayHead with init_cfg {'type': 'Kaiming', 'layer': 'Linear'}

2022-04-09 20:10:57,707 - mmdet - INFO - initialize GlobalContextHead with init_cfg {'type': 'Normal', 'std': 0.01, 'override': {'name': 'fc'}}

loading annotations into memory...

Done (t=0.02s)

creating index...

index created!

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

loading annotations into memory...

loading annotations into memory...

Done (t=0.01s)

creating index...

index created!

Done (t=0.01s)

creating index...

index created!

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

loading annotations into memory...

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

loading annotations into memory...

Done (t=0.00s)

creating index...

index created!

Done (t=0.00s)

creating index...

index created!

2022-04-09 20:10:58,448 - mmdet - INFO - load checkpoint from local path: checkpoints/scnet/scnet_x101_64x4d_fpn_20e_coco-fb09dec9.pth

2022-04-09 20:11:00,847 - mmdet - WARNING - The model and loaded state dict do not match exactly

size mismatch for roi_head.bbox_head.0.fc_cls.weight: copying a param with shape torch.Size([81, 1024]) from checkpoint, the shape in current model is torch.Size([2, 1024]).

size mismatch for roi_head.bbox_head.0.fc_cls.bias: copying a param with shape torch.Size([81]) from checkpoint, the shape in current model is torch.Size([2]).

size mismatch for roi_head.bbox_head.1.fc_cls.weight: copying a param with shape torch.Size([81, 1024]) from checkpoint, the shape in current model is torch.Size([2, 1024]).

size mismatch for roi_head.bbox_head.1.fc_cls.bias: copying a param with shape torch.Size([81]) from checkpoint, the shape in current model is torch.Size([2]).

size mismatch for roi_head.bbox_head.2.fc_cls.weight: copying a param with shape torch.Size([81, 1024]) from checkpoint, the shape in current model is torch.Size([2, 1024]).

size mismatch for roi_head.bbox_head.2.fc_cls.bias: copying a param with shape torch.Size([81]) from checkpoint, the shape in current model is torch.Size([2]).

size mismatch for roi_head.mask_head.conv_logits.weight: copying a param with shape torch.Size([80, 256, 1, 1]) from checkpoint, the shape in current model is torch.Size([1, 256, 1, 1]).

size mismatch for roi_head.mask_head.conv_logits.bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([1]).

size mismatch for roi_head.glbctx_head.fc.weight: copying a param with shape torch.Size([80, 256]) from checkpoint, the shape in current model is torch.Size([1, 256]).

size mismatch for roi_head.glbctx_head.fc.bias: copying a param with shape torch.Size([80]) from checkpoint, the shape in current model is torch.Size([1]).

2022-04-09 20:11:00,864 - mmdet - INFO - Start running, host: qinhaobo@zkti, work_dir: /home/qinhaobo/proxyrecon/mmdetection-master/demo/work_dirs/scnet_x101_64x4d_fpn_20e_coco_building

2022-04-09 20:11:00,864 - mmdet - INFO - Hooks will be executed in the following order:

before_run:

(VERY_HIGH ) StepLrUpdaterHook

(NORMAL ) CheckpointHook

(LOW ) DistEvalHook

(VERY_LOW ) TextLoggerHook

--------------------

before_train_epoch:

(VERY_HIGH ) StepLrUpdaterHook

(NORMAL ) NumClassCheckHook

(NORMAL ) DistSamplerSeedHook

(LOW ) IterTimerHook

(LOW ) DistEvalHook

(VERY_LOW ) TextLoggerHook

--------------------

before_train_iter:

(VERY_HIGH ) StepLrUpdaterHook

(LOW ) IterTimerHook

(LOW ) DistEvalHook

--------------------

after_train_iter:

(ABOVE_NORMAL) OptimizerHook

(NORMAL ) CheckpointHook

(LOW ) IterTimerHook

(LOW ) DistEvalHook

(VERY_LOW ) TextLoggerHook

--------------------

after_train_epoch:

(NORMAL ) CheckpointHook

(LOW ) DistEvalHook

(VERY_LOW ) TextLoggerHook

--------------------

before_val_epoch:

(NORMAL ) NumClassCheckHook

(NORMAL ) DistSamplerSeedHook

(LOW ) IterTimerHook

(VERY_LOW ) TextLoggerHook

--------------------

before_val_iter:

(LOW ) IterTimerHook

--------------------

after_val_iter:

(LOW ) IterTimerHook

--------------------

after_val_epoch:

(VERY_LOW ) TextLoggerHook

--------------------

after_run:

(VERY_LOW ) TextLoggerHook

--------------------

2022-04-09 20:11:00,864 - mmdet - INFO - workflow: [('train', 1)], max: 20 epochs

2022-04-09 20:11:00,864 - mmdet - INFO - Checkpoints will be saved to /home/qinhaobo/proxyrecon/mmdetection-master/demo/work_dirs/scnet_x101_64x4d_fpn_20e_coco_building by HardDiskBackend.

Traceback (most recent call last):

File "../tools/train.py", line 220, in <module>

main()

File "../tools/train.py", line 216, in main

meta=meta)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/apis/train.py", line 208, in train_detector

runner.run(data_loaders, cfg.workflow)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

epoch_runner(data_loaders[i], **kwargs)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 47, in train

for i, data_batch in enumerate(self.data_loader):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 530, in __next__

data = self._next_data()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1224, in _next_data

return self._process_data(data)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1250, in _process_data

data.reraise()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/_utils.py", line 457, in reraise

raise exception

FileNotFoundError: Caught FileNotFoundError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 218, in __getitem__

data = self.prepare_train_img(idx)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 241, in prepare_train_img

return self.pipeline(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/compose.py", line 41, in __call__

data = t(data)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 401, in __call__

results = self._load_semantic_seg(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 375, in _load_semantic_seg

img_bytes = self.file_client.get(filename)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 993, in get

return self.client.get(filepath)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 518, in get

with open(filepath, 'rb') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'data/coco/stuffthingmaps/train2017/JPEGImages/DJI_0252.png'

Traceback (most recent call last):

File "../tools/train.py", line 220, in <module>

main()

File "../tools/train.py", line 216, in main

Traceback (most recent call last):

meta=meta)

File "../tools/train.py", line 220, in <module>

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/apis/train.py", line 208, in train_detector

runner.run(data_loaders, cfg.workflow)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

main()

File "../tools/train.py", line 216, in main

meta=meta)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/apis/train.py", line 208, in train_detector

epoch_runner(data_loaders[i], **kwargs)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 47, in train

runner.run(data_loaders, cfg.workflow)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

for i, data_batch in enumerate(self.data_loader):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 530, in __next__

epoch_runner(data_loaders[i], **kwargs)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 47, in train

for i, data_batch in enumerate(self.data_loader):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 530, in __next__

data = self._next_data()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1224, in _next_data

data = self._next_data()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1224, in _next_data

return self._process_data(data)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1250, in _process_data

data.reraise()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/_utils.py", line 457, in reraise

return self._process_data(data)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1250, in _process_data

raise exception

FileNotFoundError: Caught FileNotFoundError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 218, in __getitem__

data = self.prepare_train_img(idx)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 241, in prepare_train_img

return self.pipeline(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/compose.py", line 41, in __call__

data = t(data)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 401, in __call__

results = self._load_semantic_seg(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 375, in _load_semantic_seg

img_bytes = self.file_client.get(filename)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 993, in get

return self.client.get(filepath)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 518, in get

with open(filepath, 'rb') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'data/coco/stuffthingmaps/train2017/JPEGImages/DJI_0728.png'

data.reraise()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/_utils.py", line 457, in reraise

raise exception

FileNotFoundError: Caught FileNotFoundError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 218, in __getitem__

data = self.prepare_train_img(idx)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 241, in prepare_train_img

return self.pipeline(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/compose.py", line 41, in __call__

data = t(data)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 401, in __call__

results = self._load_semantic_seg(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 375, in _load_semantic_seg

img_bytes = self.file_client.get(filename)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 993, in get

return self.client.get(filepath)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 518, in get

with open(filepath, 'rb') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'data/coco/stuffthingmaps/train2017/JPEGImages/DJI_0584.png'

Traceback (most recent call last):

File "../tools/train.py", line 220, in <module>

main()

File "../tools/train.py", line 216, in main

meta=meta)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/apis/train.py", line 208, in train_detector

runner.run(data_loaders, cfg.workflow)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 127, in run

epoch_runner(data_loaders[i], **kwargs)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/runner/epoch_based_runner.py", line 47, in train

for i, data_batch in enumerate(self.data_loader):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 530, in __next__

data = self._next_data()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1224, in _next_data

return self._process_data(data)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/dataloader.py", line 1250, in _process_data

data.reraise()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/_utils.py", line 457, in reraise

raise exception

FileNotFoundError: Caught FileNotFoundError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 218, in __getitem__

data = self.prepare_train_img(idx)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/custom.py", line 241, in prepare_train_img

return self.pipeline(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/compose.py", line 41, in __call__

data = t(data)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 401, in __call__

results = self._load_semantic_seg(results)

File "/home/qinhaobo/proxyrecon/mmdetection-master/mmdet/datasets/pipelines/loading.py", line 375, in _load_semantic_seg

img_bytes = self.file_client.get(filename)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 993, in get

return self.client.get(filepath)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/mmcv/fileio/file_client.py", line 518, in get

with open(filepath, 'rb') as f:

FileNotFoundError: [Errno 2] No such file or directory: 'data/coco/stuffthingmaps/train2017/JPEGImages/13x00029.png'

ERROR:torch.distributed.elastic.multiprocessing.api:failed (exitcode: 1) local_rank: 0 (pid: 661922) of binary: /home/qinhaobo/anaconda3/envs/open-mmlab/bin/python

Traceback (most recent call last):

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launch.py", line 193, in <module>

main()

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launch.py", line 189, in main

launch(args)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launch.py", line 174, in launch

run(args)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/run.py", line 718, in run

)(*cmd_args)

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launcher/api.py", line 131, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/home/qinhaobo/anaconda3/envs/open-mmlab/lib/python3.7/site-packages/torch/distributed/launcher/api.py", line 247, in launch_agent

failures=result.failures,

torch.distributed.elastic.multiprocessing.errors.ChildFailedError:

============================================================

../tools/train.py FAILED

------------------------------------------------------------

Failures:

[1]:

time : 2022-04-09_20:11:09

host : zkti

rank : 1 (local_rank: 1)

exitcode : 1 (pid: 661923)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[2]:

time : 2022-04-09_20:11:09

host : zkti

rank : 2 (local_rank: 2)

exitcode : 1 (pid: 661924)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

[3]:

time : 2022-04-09_20:11:09

host : zkti

rank : 3 (local_rank: 3)

exitcode : 1 (pid: 661925)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

------------------------------------------------------------

Root Cause (first observed failure):

[0]:

time : 2022-04-09_20:11:09

host : zkti

rank : 0 (local_rank: 0)

exitcode : 1 (pid: 661922)

error_file: <N/A>

traceback : To enable traceback see: https://pytorch.org/docs/stable/elastic/errors.html

============================================================

Issue Analytics

- State:

- Created a year ago

- Comments:9

Top Results From Across the Web

Top Results From Across the Web

FileNotFoundError: [Errno 2] No such file or directory [duplicate]

I am trying to open a CSV file but for some reason python cannot locate it. Here is my code (it's just a...

Read more >How to fix FileNotFoundError Errno 2 no such file or directory

There might be times when your files won't not exist in the current directory. These are called relative paths and are the most...

Read more >Python FileNotFoundError: [Errno 2] No such file or directory ...

This error tells you that you are trying to access a file or folder that does not exist. To fix this error, check...

Read more >FileNotFoundError: [Errno 2] No such file or directory

No such file or directory " is telling you that there is no file of that name in the working directory. So, try...

Read more >[Errno 2] No such file or directory: '/dbfs/FileStore/tables ...

i am trying to read csv file using databricks, i am getting error like ......FileNotFoundError: [Errno 2] No such file or directory: ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

https://github.com/open-mmlab/mmdetection/issues/7683#issuecomment-1094065710

You should rewrite the config like this,the link had offered the correct config which can use directly.

Thanks for the response @wjw6692353.

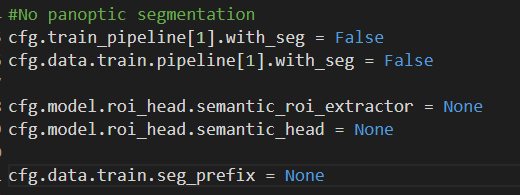

Yes, I set both ‘with_seg’ = False. I also set semantic_roi_extractor=None, semantic_head=None However, I don’t know how to delete the keywords ‘seg_prefix’. At the moment I set ‘seg_prefix’ = None. My code to do this is shown:

However: -> ‘gt_semantic_seg’ is still in keys… -> And I get the following error:

Thanks again! Much appreciated