Transfer Learning

See original GitHub issueCode version (Git Hash) and PyTorch version

PyTorch: 1.0.1

Dataset used

Self-generated from youtube - similar to Kinetics-skeleton

Expected behavior

Being able to load pretrained weights from your kinetics-st_gcn.pt, freeze them, and only train new layers

Actual behavior

Able to load pretrained weights, but not able to freeze them.

Steps to reproduce the behavior

Add flag ‘–weights’, with path to the aformentioned pretrained pytorch model

Other comments

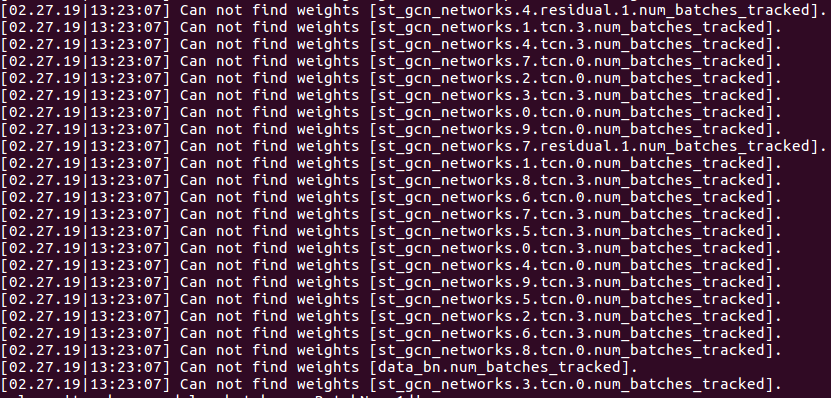

I am currently able to load the weights from your pretrained model. During this operation I am presented with this output:

Which I am interpreting to being the layers in the new model, which are not present in the old model, and therefore not getting its weights from the old model.

Which I am interpreting to being the layers in the new model, which are not present in the old model, and therefore not getting its weights from the old model.

What I am wondering is how would I go about to freeze all layers, except for those in the output above? I.e. how can i freeze all pretrained layers, and only train the new layers?

I was thinking I could do it using torch’s .apply(), but I am unable to find a similarity between the names in the output above, and the names being used in your weights_init(m), i.e. finding a way to map st_gcn_networks.4.residual.1.num_batches_tracked to either Conv1D, Conv2d, etc.

Crossing my fingers that you are able to assist me 😃

Issue Analytics

- State:

- Created 5 years ago

- Comments:13

Top Related StackOverflow Question

Top Related StackOverflow Question

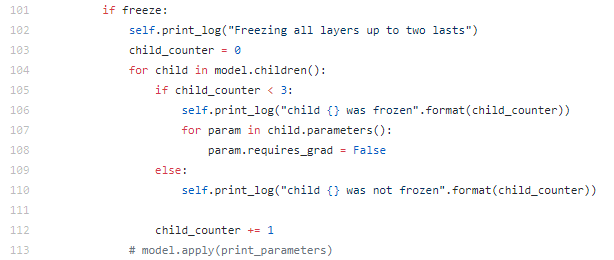

@645704621, yes - you can check out my forked repo: https://github.com/Sindreedelange/st-gcn/blob/master/torchlight/torchlight/io.py, lines 101 - 114. Disclaimer: Needs refactoring, but only POC.

Disclaimer: Needs refactoring, but only POC.

@Sindreedelange

When you load the network you will have the model creation code where you can find the param. You can also traverse the network layers by recursively using

module.chidren()and find the layer you want.