to_parquet() method fails even though column names are all strings

See original GitHub issueProblem description

While attempting to serialize a pandas data frame with the to_parquet() method, I got an error message stating that the column names were not strings, even though they seem to be.

Code Example

I have a pandas data frame with the following columns:

In [1]: region_measurements.columns

Out [1]: Index([ u'measurement_id', u'aoi_id',

u'created_ts', u'hash',

u'algorithm_instance_id', u'state',

u'updated_ts', u'aoi_version_id',

u'ingestion_ts', u'imaging_ts',

u'scene_id', u'score_map',

u'confidence_score', u'fill_pct',

u'local_ts', u'is_upper_bound',

u'aoi_cloud_cover', u'valid_pixel_frac'],

dtype='object')

Seemingly, all of the column names are strings. The cells of the dataframe contain mixed information with a JSON blob in some of them. (I’ve pasted a row of the frame in the <details> tag.

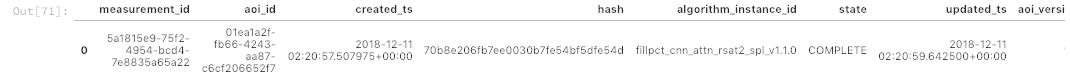

measurement_id | aoi_id | created_ts | hash | algorithm_instance_id | state | updated_ts | aoi_version_id | ingestion_ts | imaging_ts | scene_id | score_map | confidence_score | fill_pct | local_ts | is_upper_bound | aoi_cloud_cover | valid_pixel_frac

5a1815e9-75f2-4954-bcd4-7e8835a65a22 | 01ea1a2f-fb66-4243-aa87-c6cf206652f7 | 2018-12-11 02:20:57.507975+00:00 | 70b8e206fb7ee0030b7fe54bf5dfe54d | fillpct_cnn_attn_rsat2_spl_v1.1.0 | COMPLETE | 2018-12-11 02:20:59.642500+00:00 | 12512 | 2018-11-03 21:37:10.210798+00:00 | 2018-11-03 00:36:18.575107+00:00 | RS2_OK103191_PK897635_DK833095_SLA27_20181103_... | None | 1.0 | 17.161395 | 2018-11-02 19:36:18 | False | 0.000048 | NaN

When I attempt to serialize this dataframe I get the following error:

In [2]: region_measurements.to_parquet('tmp.par')

Out [2]: ---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-69-082c78243d16> in <module>()

----> 1 region_measurements.to_parquet('tmp.par')

/usr/local/lib/python2.7/site-packages/pandas/core/frame.pyc in to_parquet(self, fname, engine, compression, **kwargs)

1647 from pandas.io.parquet import to_parquet

1648 to_parquet(self, fname, engine,

-> 1649 compression=compression, **kwargs)

1650

1651 @Substitution(header='Write out the column names. If a list of strings '

/usr/local/lib/python2.7/site-packages/pandas/io/parquet.pyc in to_parquet(df, path, engine, compression, **kwargs)

225 """

226 impl = get_engine(engine)

--> 227 return impl.write(df, path, compression=compression, **kwargs)

228

229

/usr/local/lib/python2.7/site-packages/pandas/io/parquet.pyc in write(self, df, path, compression, coerce_timestamps, **kwargs)

105 def write(self, df, path, compression='snappy',

106 coerce_timestamps='ms', **kwargs):

--> 107 self.validate_dataframe(df)

108 if self._pyarrow_lt_070:

109 self._validate_write_lt_070(df)

/usr/local/lib/python2.7/site-packages/pandas/io/parquet.pyc in validate_dataframe(df)

53 # must have value column names (strings only)

54 if df.columns.inferred_type not in {'string', 'unicode'}:

---> 55 raise ValueError("parquet must have string column names")

56

57 # index level names must be strings

ValueError: parquet must have string column names

As far as I can tell, the column names are all strings. I did try to reset_index() and save the resultant data frame, but I got the same error.

Expected Output

Its possible that the frame can not be serialized to parquet for some other reason, but the error message in this case seems to be misleading. Or there is a trick that i’m missing.

I’d be grateful for any help in resolving this!

Output of pd.show_versions()

pandas: 0.22.0 pytest: 3.3.1 pip: 18.1 setuptools: 39.2.0 Cython: 0.25.2 numpy: 1.14.2 scipy: 0.19.0 pyarrow: 0.9.0 xarray: None IPython: 5.7.0 sphinx: None patsy: 0.4.1 dateutil: 2.7.5 pytz: 2017.3 blosc: None bottleneck: None tables: 3.4.4 numexpr: 2.6.8 feather: None matplotlib: 2.0.0 openpyxl: None xlrd: 0.9.4 xlwt: None xlsxwriter: 0.7.6 lxml: None bs4: 4.6.3 html5lib: 0.9999999 sqlalchemy: 1.0.5 pymysql: None psycopg2: 2.7 (dt dec pq3 ext lo64) jinja2: 2.10 s3fs: 0.2.0 fastparquet: 0.1.5 pandas_gbq: None pandas_datareader: None

</details>

Issue Analytics

- State:

- Created 5 years ago

- Reactions:2

- Comments:6 (2 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

You can try to force the type of column to be string. It works for me. df.columns = df.columns.astype(str) https://github.com/dask/fastparquet/issues/41

What would be against embedding

df.columns = df.columns.astype(str)into the.to_parquetmethod?