Extreme Memory Usage with LoadListDataAsStreamAsync

See original GitHub issueCategory

- Bug

Describe the bug

When using list.LoadListDataAsStreamAsync in combination with a LOT of list items, our K8S pod runs out of memory.

Steps to reproduce

- Generate a lot of files.

for ($i = 1; $i -lt 2500; $i++) {

$fname = "File_" + $i + ".tst"

fsutil file createnew $fname (Get-Random -Minimum 25 -Maximum 10000)

}

- Upload these files to a Document library.

- Run this code:

private static readonly PnPContextOptions ContextOptions = new PnPContextOptions()

{

AdditionalSitePropertiesOnCreate = new List<Expression<Func<ISite, object>>>()

{

s => s.Url

},

AdditionalWebPropertiesOnCreate = new List<Expression<Func<IWeb, object>>>()

{

w => w.ServerRelativeUrl,

w => w.Title,

w => w.AppInstanceId

}

};

private readonly int _queryRowLimit = 1000;

public async Task ProcessWebWithCamlQuery()

{

var myUri = new Uri("https://mysharepoint.sharepoint.com/sites/site-test");

using (var context = await _pnpContextFactory.CreateAsync(myUri, ContextOptions))

{

var lists = GetListsToProcess(context.Web);

var viewXml = GetCamlQuery();

foreach (var list in lists)

{

var paging = true;

string nextPage = null;

while (paging)

{

var output = await list.LoadListDataAsStreamAsync(new RenderListDataOptions()

{

ViewXml = viewXml,

RenderOptions = RenderListDataOptionsFlags.ListData,

Paging = nextPage

}).ConfigureAwait(false);

if (output.ContainsKey("NextHref") && output["NextHref"] != null)

{

nextPage = output["NextHref"].ToString()?[1..];

}

else

{

paging = false;

}

}

var listItems = list.Items.AsRequested();

Console.WriteLine(GC.GetTotalMemory(false));

Console.WriteLine("Process the listItems here");

}

}

Console.WriteLine("Done processing list");

Console.WriteLine(GC.GetTotalMemory(false));

}

private string GetCamlQuery()

{

return $@"<View Scope='RecursiveAll'>

<RowLimit Paged='TRUE'>{_queryRowLimit}</RowLimit>

<Query>

<Where>

<Or>

<BeginsWith>

<FieldRef Name='ContentTypeId' />

<Value Type='ContentTypeId'>0x0101</Value>

</BeginsWith>

<BeginsWith>

<FieldRef Name='ContentTypeId' />

<Value Type='ContentTypeId'>0x0120</Value>

</BeginsWith>

</Or>

</Where>

</Query>

</View>";

}

private static readonly Expression<Func<IList, bool>> ListPredicate = l =>

l.NoCrawl == false &&

l.Hidden == false &&

l.IsApplicationList == false &&

l.TemplateType == ListTemplateType.DocumentLibrary &&

l.ListItemEntityTypeFullName != "SP.Data.AppPackagesListItem" &&

l.ListItemEntityTypeFullName != "SP.Data.FormServerTemplatesItem";

private static readonly Expression<Func<IList, object>>[] ListProperties = {

l => l.Title,

l => l.RootFolder.QueryProperties(f => f.ServerRelativeUrl),

l => l.ContentTypes.QueryProperties(

ct => ct.Name,

ct => ct.Fields

.QueryProperties(

f => f.InternalName,

f => f.Title,

f => f.Hidden,

f => f.FieldTypeKind))

};

private static IEnumerable<IList> GetListsToProcess(IWeb web)

{

// The Id (whatever is the Key) is populated automatically; no need to explicitly add it here

return web.Lists.Where(ListPredicate).QueryProperties(ListProperties);

}

Expected behavior

I realize that we’re pumping a lot of data into memory. However, I haven’t had a chance to dig into ListItem to determine what is holding on to SO much data that memory just keeps skyrocketing with each 1000 items.

Ideally, we don’t skyrocket in memory. Even if we try myListOfListItems.AddRange(list.Items.AsRequested()); list.Items.Clear();, then myListOfListItems is still holding on to a lot of memory.

Environment details (development & target environment)

- SDK version: 1.3.0

- OS: Windows 10

- SDK used in: ASP.Net Web app

- Framework: .NET Core 5

- Tooling: Visual Studio 2019

- Additional details: The more context you can provide, the easier it is (and therefore quicker) to help.

Additional context

Some customers most. definitely. exceed the LVT. To try and make the rest of our code “efficient,” we were trying to load up the all list items first, then use them in parallel later.

We’ve tried to use a new PnPContext per 1000 items, but you and I both know that’s an expensive call to keep doing (don’t want to keep making the same Site/Web/List API endpoint calls over and over).

I was hoping that maybe bringing this up may cause an immediate lightbulb to go off and you’d know why/how to fix.

Thanks, Bert, for everything.

Issue Analytics

- State:

- Created 2 years ago

- Comments:8 (8 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

@DaleyKD : Did manage to further lower the memory needs, but more important did also work on performance. In my tests reading 2500 items is approximately double as fast as it used to be (test was offline, so network responsiveness did not impact the results).

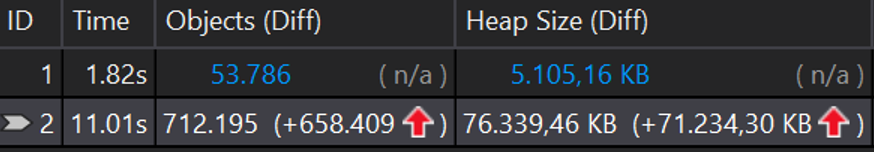

Below is the heap size for 2500 items being read in memory + overhead from the dotnet test framework. Creating a console app test should result in less memory consumption. Given I’ve only changed PnP.Core the 10Mb less memory uses comes from done optimizations:

Given the many perf updates from the last days and the fact that the nightly builds are now also strong named release builds just like the official ones I would strongly recommend trying with the next nightly (1.3.61 or higher) and see if that helps.

@DaleyKD : memory consumption is indeed quite high, did implement a test case and got below. Will further dig in tomorrow: