issues when upserting several thousand records

See original GitHub issueBug description

I have around 3500 records to insert into a - mysql - DB. I want to check they aren’t there already, and only insert the ‘new’ ones. For this I am using prisma’s upsert method:

await Promise.all(

currencies.map(async ({ name, symbol, id: nomicsId }) => {

try {

// await prisma.$connect()

await prisma.currency.upsert({

where: { nomicsId },

update: { name, symbol },

create: { nomicsId, name, symbol },

})

// await prisma.$disconnect()

} catch (err) {

console.error('err adding', err)

}

}),

)

await prisma.$disconnect()

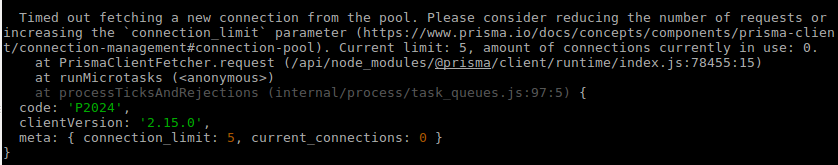

I thought by blocking via async/await would ensure I wasn’t straining the database unduly. However I get a stream of these errors:

How to reproduce

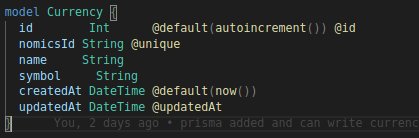

In the above snippet, my model is quite simple:

Expected behavior

I want all records to be inserted into the database that aren’t already there. I expect prisma executes one query at a time, such that I don’t have connection issues as only one would be in use.

Prisma information

As above.

Environment & setup

- OS: Linux (ubuntu) but running prisma within docker container (mhart/alpine-node)

- Database: MySQL (via dockerhub mysql:5.7)

- Node.js version: 14.4

- Prisma version: 2.15

Issue Analytics

- State:

- Created 3 years ago

- Comments:6 (3 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

performance issue when inserting large records - Stack Overflow

Perform commit for around 1000(or in batches) records rather than doing for each. Replace in with exists for the Ref cursor.

Read more >What is upsert? How it works? - Salesforce Developers

I'm looking at updating several thousand records and need to add additional information for a period of time and then remove that info...

Read more >How to Improve Upsert Performance - Snowflake Community

We can upsert millions of records in a few minutes, I would focus on the insert/Upsert logic. If you are upserting using an...

Read more >SQL Performance Best Practices | CockroachDB Docs

Experimentally determine the optimal batch size for your application by monitoring the performance for different batch sizes (10 rows, 100 rows, 1000 rows)....

Read more >4. Inserting, Updating, Deleting - SQL Cookbook [Book] - O'Reilly

And there are many handy ways to delete records. For example, you can delete rows in one table depending on whether or not...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

@samthomson I am having the exact same issue. Really disappointed that Prisma hasn’t fixed this.

FYI - When processing 100s/1000s of records I have been using

p-mapas well but alsop-retryanddelay:https://github.com/sindresorhus/p-retry

and

https://github.com/sindresorhus/delay

so that each Prisma create (or typically upsert) is wrapped in a retry with a slight delay on an error – like too many connections or timeouts.

Note that this pattern behaved better after

https://github.com/prisma/prisma/issues/4906

was fixed in 2.15.