DynUnet get_feature_maps() issue when using distributed training

See original GitHub issueDescribe the bug

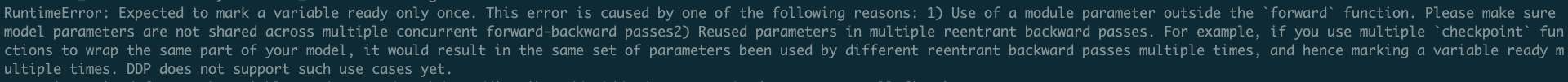

If we use DistributedDataParallel to wrap the network, calling the ‘get_feature_maps’ function will raise the following error:

It is common to return multiple variables in a network’s forward function, not only for this case, but also for other situations such as for calculating triplet loss in metric learning based tasks. Therefore, here I think we should re-consider about what to return in the forward function of DynUnet.

Let me think about it and submit a PR then we can discuss it later. @Nic-Ma @wyli @rijobro

Issue Analytics

- State:

- Created 3 years ago

- Comments:10 (10 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Source code for monai.networks.nets.dynunet

Module): """ This reimplementation of a dynamic UNet (DynUNet) is based on: `Automated Design of Deep Learning Methods for Biomedical Image Segmentation ...

Read more >Demystifying Developers' Issues in Distributed Training of ...

Specifically, we collect a dataset of 1,054 distributed-training-related developers' issues that occur during the use of these frameworks from ...

Read more >Distributed and Parallel Training Tutorials - PyTorch

This tutorial demonstrates how to get started with RPC-based distributed training. Code. Implementing a Parameter Server Using Distributed RPC Framework. This ...

Read more >How distributed training works in Pytorch - AI Summer

In this tutorial, we will learn how to use nn.parallel.DistributedDataParallel for training our models in multiple GPUs.

Read more >Distributed Training - Determined AI Documentation

Access to all IP addresses of every node in the Trial (through the ClusterInfo API). Communication primitives such as allgather() , gather() ,...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Hi @rijobro , thanks for the advice, but return this kind of dict doesn’t fix the torchscript issue as @Nic-Ma mentioned. I suggest here we still return a list, and add the corresponding docstrings. In order to help users use this network, I will also add/update tutorials. Let me submit a PR first for you to review.

Hi @rijobro ,

I think most of the previous problems are due to the

listoutput during validation or inference. So I suggest to return a list of data during training, return only the output instead of [out] during validation or inference.Thanks.