Relationship between LayerIntegratedGradients & configure_interpretable_embedding_layer

See original GitHub issueHi, I am working with the tutorial here. I took the example

question, text = “What is important to us?”, “It is important to us to include, empower and support humans of all kinds.”

and tried to see the effect of the word “What” on the start position using

attributions_start_sum[1]

which is

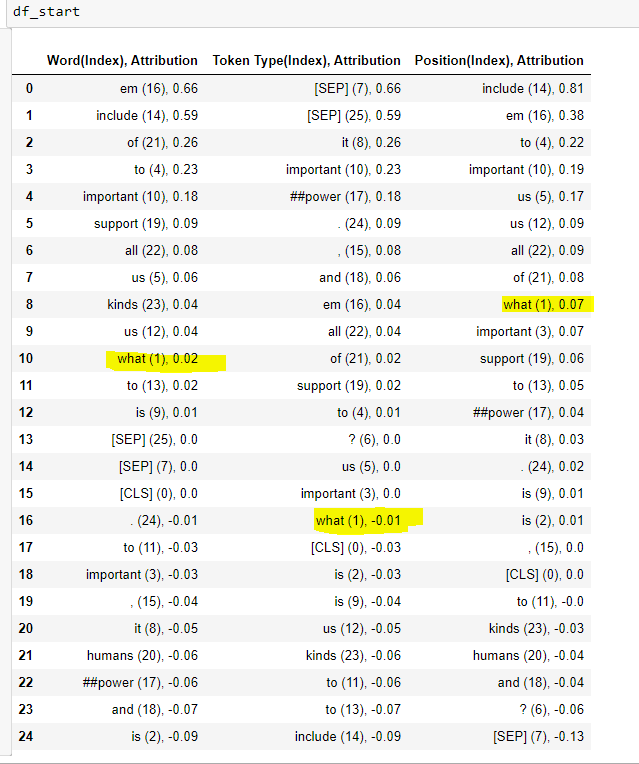

tensor(0.3861, device=‘cuda:0’, dtype=torch.float64, grad_fn=<SelectBackward>) Then I ran the code to get the effect of word, token_type and position embeddings and the attributions look like

for “What”

0.02 + (-0.01) + 0.07 != 0.3861

Is this expected ? Could you please help me understand this

Issue Analytics

- State:

- Created 3 years ago

- Reactions:1

- Comments:6 (3 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

FAQ - Captum

I am working on a new interpretability or attribution method and would like to add it to Captum. How do I proceed? I...

Read more >Interpretability in Deep Learning NLP Models

To calculate integrated gradients for a given sample, first we need to select a baseline. The paper suggests using a zero embedding vector....

Read more >Model Interpretability and Understanding for PyTorch using ...

This technique is similar to applying integrated gradients, which involves integrating the gradient with regard to the layer (rather than the input).

Read more >Integrated gradients | TensorFlow Core

IG aims to explain the relationship between a model's predictions in terms of its features. It has many use cases including understanding feature...

Read more >Problem with inputs using Integrated Gradients and model ...

The essential idea is to change your model from taking input indices to taking the input embedding directly as input. The Interpretable ...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

@LopezGG , I’d also look into LayerNorm and Dropout that is happening after concatenating those layers. https://github.com/huggingface/transformers/blob/master/src/transformers/modeling_bert.py#L213

If you attribute to the inputs of the

LayerNormyou might get the result that you’re expecting ? Let me know if you get chance to try that.Not looking into this right now. thank you Narine