Negative loss

See original GitHub issueWhat might I be doing wrong?

Train on 6 samples, validate on 2 samples

Epoch 1/10

6/6 [==============================] - 11s 2s/step - loss: 23.4232 - iou_score: 0.8338 - val_loss: 270.5751 - val_iou_score: 0.2407

Epoch 2/10

6/6 [==============================] - 0s 52ms/step - loss: -10.5406 - iou_score: 1.1558 - val_loss: 15.3342 - val_iou_score: 1.3462

Epoch 3/10

6/6 [==============================] - 0s 51ms/step - loss: -41.8985 - iou_score: 1.6151 - val_loss: -61.7260 - val_iou_score: 2.2258

Epoch 4/10

6/6 [==============================] - 0s 54ms/step - loss: -75.2752 - iou_score: 2.2215 - val_loss: -55.5733 - val_iou_score: 2.1647

Epoch 5/10

6/6 [==============================] - 0s 53ms/step - loss: -106.0778 - iou_score: 3.0294 - val_loss: -94.5141 - val_iou_score: 3.3411

Epoch 6/10

6/6 [==============================] - 0s 52ms/step - loss: -144.1045 - iou_score: 3.9788 - val_loss: -78.0447 - val_iou_score: 2.5654

Epoch 7/10

6/6 [==============================] - 0s 51ms/step - loss: -172.3163 - iou_score: 4.8828 - val_loss: -97.2337 - val_iou_score: 2.8799

Epoch 8/10

6/6 [==============================] - 0s 52ms/step - loss: -194.8336 - iou_score: 6.2343 - val_loss: -152.3081 - val_iou_score: 4.4320

Epoch 9/10

6/6 [==============================] - 0s 51ms/step - loss: -212.0709 - iou_score: 7.1287 - val_loss: -206.3270 - val_iou_score: 5.0225

Epoch 10/10

6/6 [==============================] - 0s 54ms/step - loss: -227.2620 - iou_score: 7.6453 - val_loss: -256.2039 - val_iou_score: 5.6111

Segmentation for a single class.

Dataset:

X:

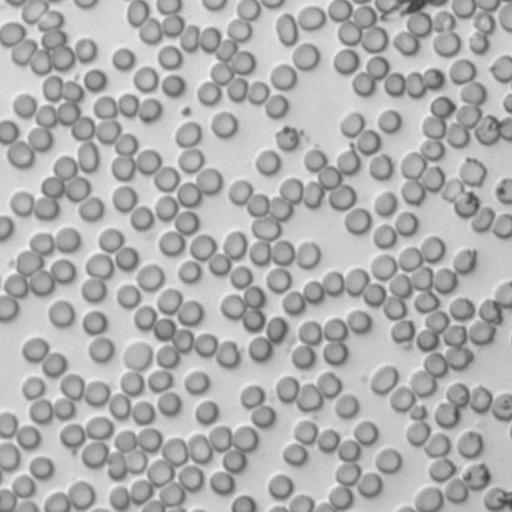

Y:

Code: unet_train.txt

Issue Analytics

- State:

- Created 4 years ago

- Comments:6 (2 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

What happens when loss are negative? - PyTorch Forums

Loss is multiplied to gradient when taking a step with gradient descent. So when gradient becomes negative, gradient descent takes a step in ......

Read more >why is my loss function return negative values? - Stack Overflow

The loss is just a scalar that you are trying to minimize. It's not supposed to be positive. One of the reason you...

Read more >Does negative loss make sense? : r/learnmachinelearning

Hello. I'm currently training a model and am using PyTorch. The loss function that I'm using is negative log likelihood (NLL) loss.

Read more >What if training loss is negative - Data Science Stack Exchange

I tried squaring loss which is resulting in a 0.0 loss. So what happens if we negative values. how can I convert to...

Read more >Negative loss readings - DTX Fiber Modules - Fluke Networks

Know the casuses of negative loss readings in DTX Fiber Modules : Poor quality reference leads , incorrect test reference method and not...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Usually, output of the network is activated with softmax/sigmoid function, so it is in range 0…1. That is why your target should be in the same range, however it depends on loss function (mask normalisation can be done there).

Hi @fuzzyBatman

make sure your masks are binary images {0, 1} (not {0, 255}).