[Bug] can't get ray cluster fully utilized

See original GitHub issueSearch before asking

- I searched the issues and found no similar issues.

(I created this issue based on a chat with Sang Cho)

Ray Component

Ray Clusters

What happened + What you expected to happen

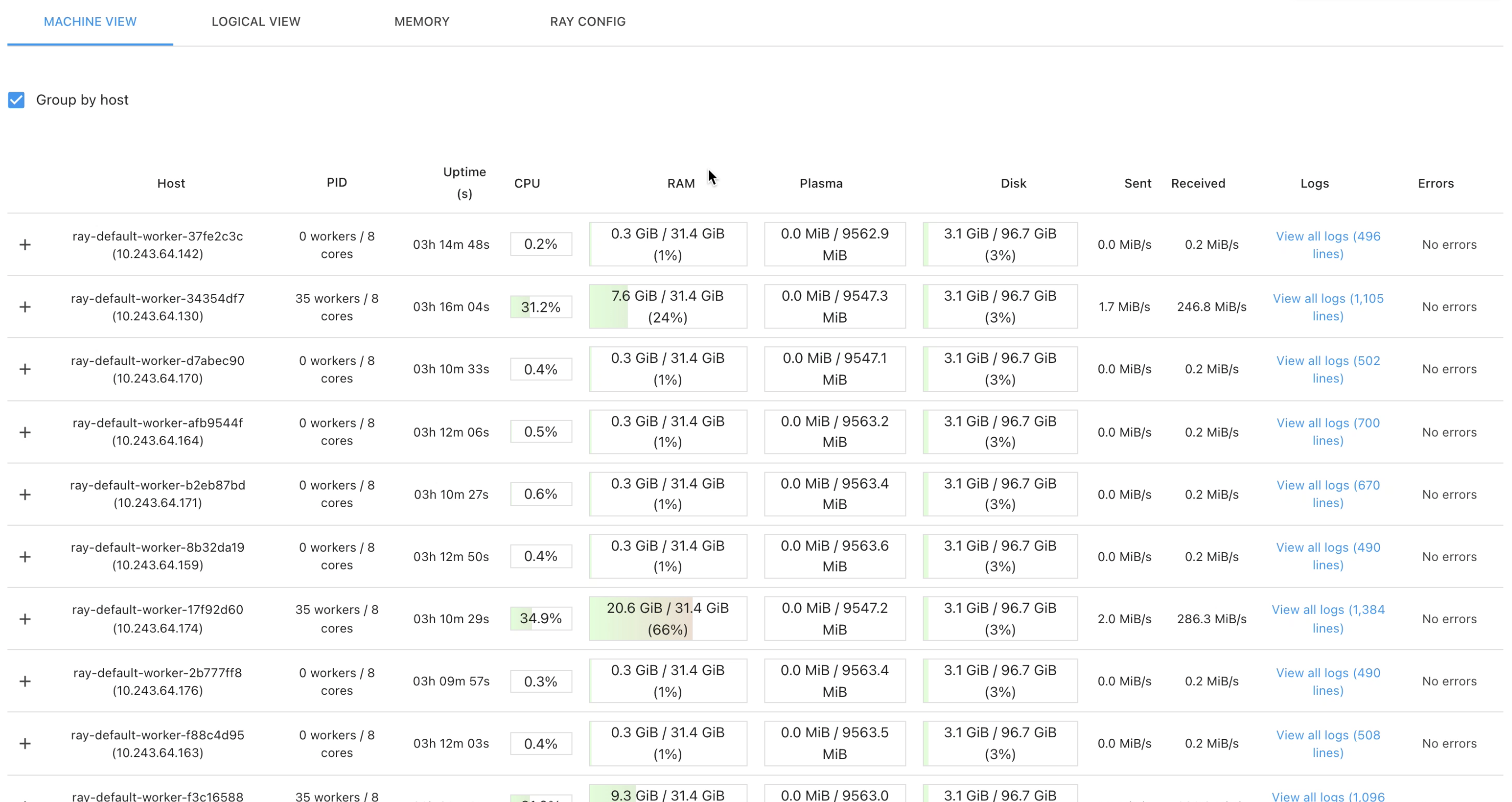

i created a cluster with 120 nodes, each having 8 vCPU and 32GB of memory. I triggered 10k task executions, and used the ray decorator assigning 0.15 vCPU to each task execution. While that should be enough work to fully utilize the cluster, many nodes are only lightly or not utilized at all (according to the ray dashboard).

Is this familiar behavior? Have you seen this in other scenarios of an equivalent size?

Versions / Dependencies

1.9

Reproduction script

I just call the task 10k times, which usually finished within a small, single-digit number of seconds. I also had tried the RAY_max_pending_lease_requests_per_scheduling_category == num_nodes environment variable setting, but didn’t see a major effect.

Anything else

No response

Are you willing to submit a PR?

- Yes I am willing to submit a PR!

Issue Analytics

- State:

- Created 2 years ago

- Comments:6 (4 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Dask on Ray + Ray Distributed Cluster - Workers not getting ...

Problem. It doesn't seem that any of my worker nodes are getting utilized. I am submitting 1000 graphs to the cluster at a...

Read more >How to clear objects from the object store in ray?

The problem is that your remote function captures big_data_object_ref , and the ... So no need to use ray.get() in the remote function....

Read more >Spark, Dask, and Ray: Choosing the Right Framework

Unlike Dask, however, Ray doesn't try to mimic the NumPy and Pandas ... clustering and parallelisation framework that can be used to build ......

Read more >The Biggest Spark Troubleshooting Challenges in 2022

It's very hard to know how long a job “should” take, or where to start in optimizing a job or a cluster. Excessive...

Read more >Autoscaling - Amazon EKS - AWS Documentation

Auto Scaling groups are suitable for a large number of use cases. ... An existing Amazon EKS cluster – If you don't have...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

we should tackle this at ray 2.1

the fundamental reason is ray single node scheduler becomes a bottleneck for embarrassingly parallel workload like this. An simple workaround for now is to submit jobs through actors placed on different nodes.