[serve] Actor Ram size increases when sending large data

See original GitHub issueWhat is the problem?

When sending video data (binary data and numpy arrays) to a ray serve endpoint the ram size of that worker encreases on each call.

While in the same time no object storage is used:

Setup:

- Ray: 1.0.1

- OS: Ubuntu 18.04

- Python: 3.7.5

Reproduction (REQUIRED)

I was able to reproduce it with that script:

import time

import requests

from ray import serve

client = serve.start()

def echo(flask_request):

return "hello " + flask_request.args.get("name", "serve!")

client.create_backend("hello", echo)

client.create_endpoint("hello", backend="hello", route="/hello")

url = "http://localhost:8000/hello"

payload = {}

while True:

files = [

('test', (

'my_video-53.webm', open('./my_video-53.webm', 'rb'),

'video/webm'))

]

headers = {

'apikey': 'asdfasfwerqexcz'

}

response = requests.request("GET", url, headers=headers, data=payload, files=files)

time.sleep(1)

The videos I used hat between 1MB and 4MB, if it is smaller the changes are not that obvious

- [x ] I have verified my script runs in a clean environment and reproduces the issue.

- I have verified the issue also occurs with the latest wheels. -> I am not able to check that because the console is broken (https://github.com/ray-project/ray/issues/11932) and does not display anything there

Issue Analytics

- State:

- Created 3 years ago

- Comments:14 (14 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

What to Do When Your Data Is Too Big for Your Memory?

If the size of our data is larger than the size of our available memory (RAM), we might face some problems in getting...

Read more >Fix an overloaded server - web.dev

Overview #. This guide shows you how to fix an overloaded server in 4 steps: Assess: Determine the server's bottleneck.

Read more >Processing large JSON files in Python without running out of ...

The solution: process JSON data one chunk at a time. ... data fits in memory, the Python representation can increase memory usage even...

Read more >What Is RAM, and How Much Memory Do You Need? - The Plug

Here's everything you need to know about what RAM does, and how to find out if you have enough memory you have on...

Read more >Chapter 4. Query Performance Optimization - O'Reilly

Some queries just have to sift through a lot of data and can't be helped. ... a few rows and then ask the...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

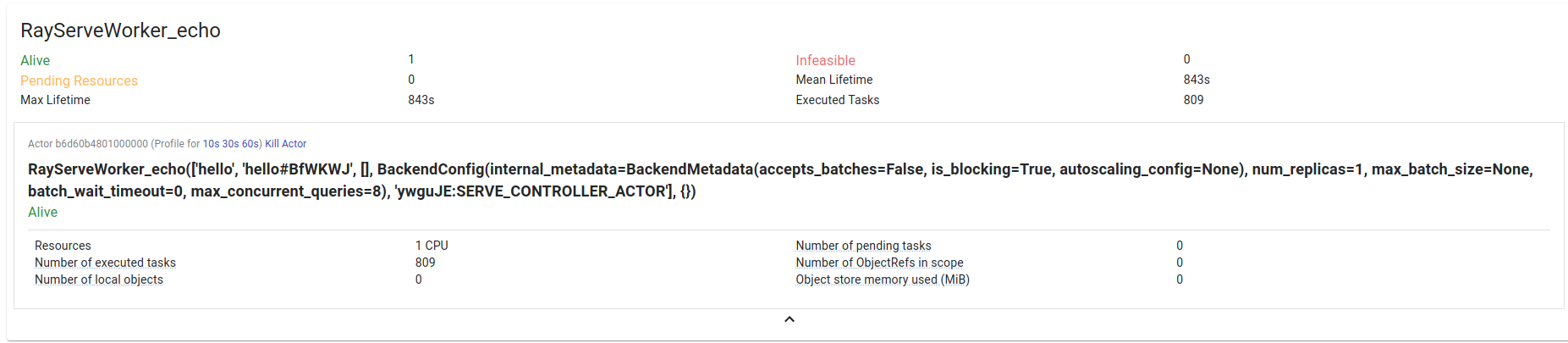

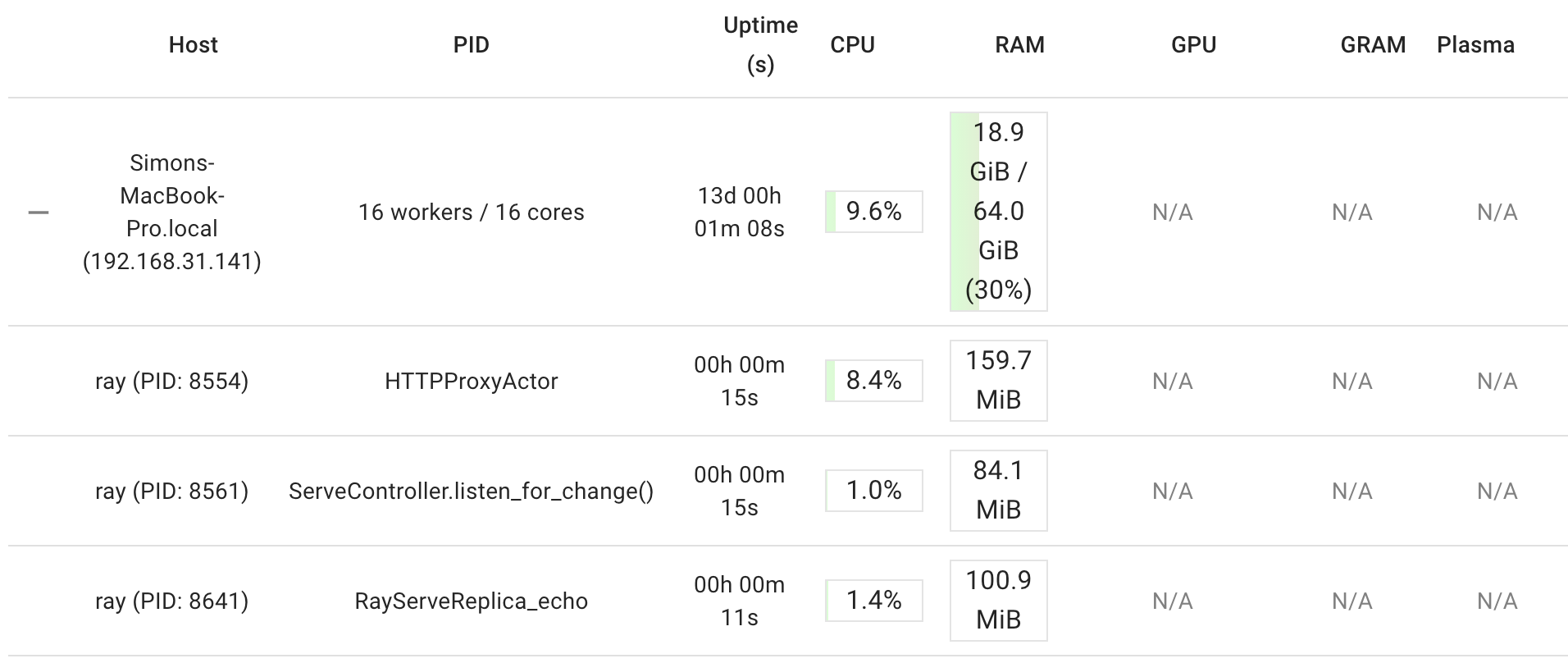

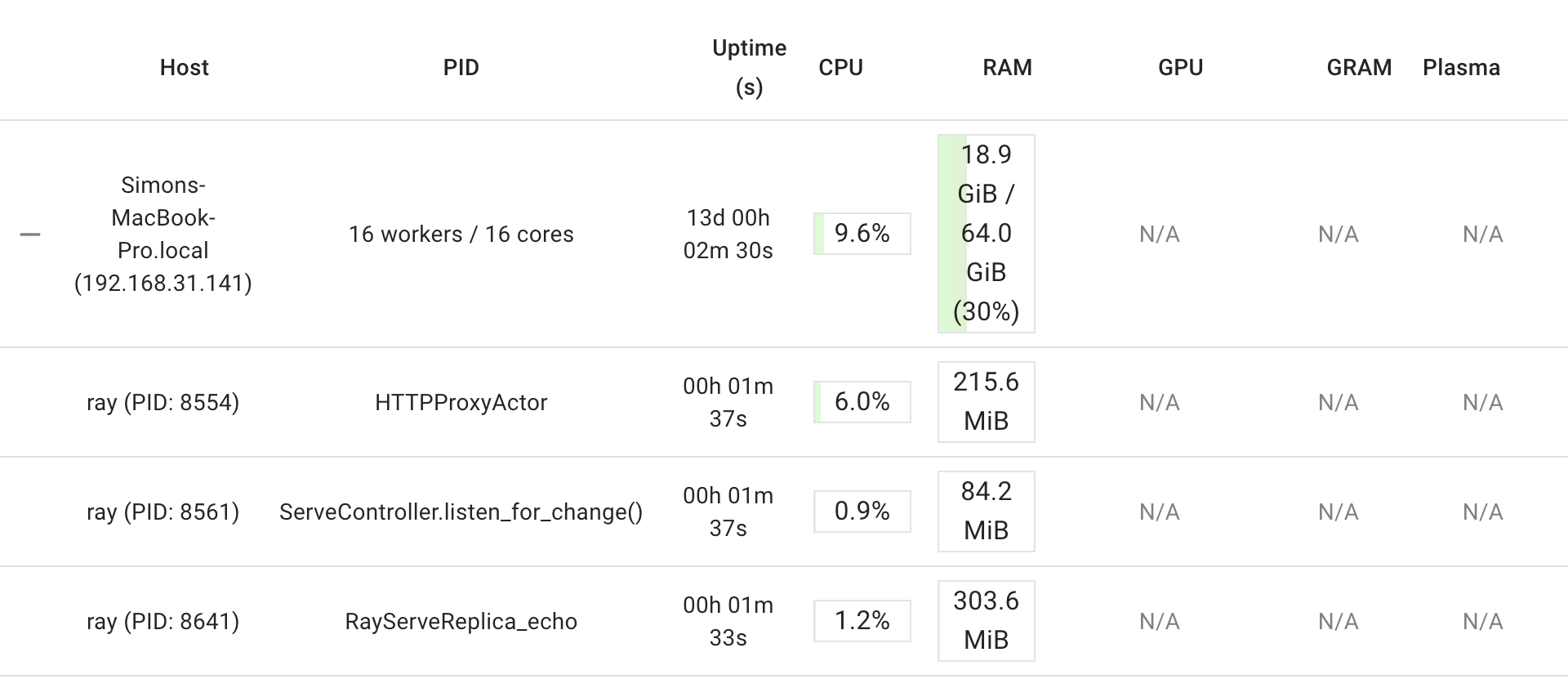

Hi @TanjaBayer, thanks for this report! I was able to reproduce it with the following script (swapped video file with np array) on latest master

At start:

After a minute

(cc @edoakes )

@edoakes not yet but there is an open discussion in slack about it