localCachedMap pubsub/updates broken after elasticache cluster maintenance or failover test

See original GitHub issueExpected behavior Updates to RMap data which include a local cache should propagate changes to all nodes, including local caches, as cluster topology changes occur. When AWS elasticache maintenance occurs, or failover happens, as topology changes occur or as nodes change from master to slave: RLocalCachedMap pubsub subscriptions should be reestablished, and any updates to localCache values should be captured and continue to be propagated across all redisson instances.

AWS elasticache cluster uptime is maintained throughout all maintenance operations: redisson should follow topology changes, node type changes (master/slave) and re-establish pubsub connections for RLocalCachedMaps to continue to keep data synchronized with local caches.

Actual behavior RLocalCachedMap local cache values are no longer updated on remote redisson clients when performing cluster maintenance. Subsequent changes to RLocalCachedMaps can be stale in local cached data for redisson nodes that did not originate the new data, even when data changes are pushed after full cluster topology is recovered. This causes stale/different datasets for the same keys across multiple nodes using local caches.

Steps to reproduce or test case

- Create elasticache redis cluster with cluster mode enabled, multiAZ enabled

- Create and connect 2 instances of Redisson, and create common RLocalCachedMap

- Trigger aws elasticache maintenance/failover test:

aws elasticache test-failover --replication-group-id dev-generic-failtest --node-group-id 0001

Redis version 6.2.6, 6.0.5

Redisson version 3.17.7

Redisson configuration

-

Using a TLS/SSL connection via “rediss://” connection string to the AWS elasticache configuration endpoint

-

We are also using a nameMapper: `

Config config = new Config(); ClusterServersConfig clusterServers = config.useClusterServers() .setRetryInterval(3000) .setTimeout(30000) .setReadMode(ReadMode.MASTER_SLAVE) .setNameMapper(new NameMapper() { @Override public String map(String name) { return KEY_PREFIX_COLON + name; } @Override public String unmap(String name) { return name.replace(KEY_PREFIX_COLON, ""); } });

`

- Default values for everything else.

I created a simple test application to confirm and reproduce this issue. I can to publish the entire repo if desired, here is the pertinent code: `

MainApp() {

LOG.info("Starting up test...");

int count = -1;

RLocalCachedMap<Object, Object> testMap = redissonConnection.getRedisson()

.getLocalCachedMap("cachedmap", LocalCachedMapOptions.defaults().syncStrategy(LocalCachedMapOptions.SyncStrategy.UPDATE));

testMap.preloadCache();

String shortId = redissonConnection.getRedisson().getId().substring(0, 7);

testMap.put(shortId + "_increasing", ++count);

testMap.put(shortId + "_timestamp", System.currentTimeMillis());

Map<Object, Object> localCachedMap = testMap.getCachedMap();

LOG.info("starting main loop in " + this.getClass().getName());

Thread printingHook = new Thread(testMap::clear);

Runtime.getRuntime().addShutdownHook(printingHook);

while (true) {

try {

Thread.sleep(5000);

LOG.info("mynode: {}, current time: {} local cache:", shortId, System.currentTimeMillis());

localCachedMap.forEach((key, value) -> LOG.info("{}: {}", key, value));

LOG.info("mynode: {}, current time: {} real cache:", shortId, System.currentTimeMillis());

testMap.forEach((key, value) -> LOG.info("{}: {}", key, value));

testMap.put(shortId + "_increasing", ++count);

testMap.put(shortId + "_timestamp", System.currentTimeMillis());

} catch (Exception ex) {

ex.printStackTrace();

}

}

}

`

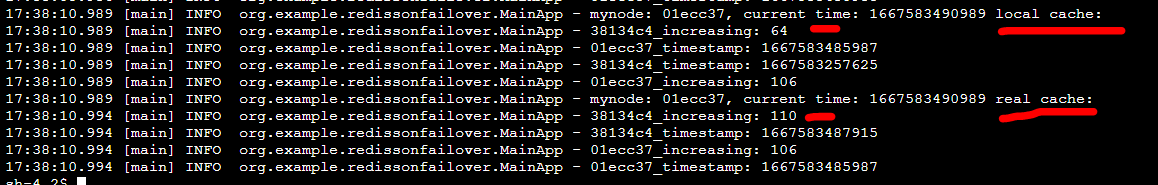

With this test, I run 2 copies of this same application. In the logs you can see that that after the test-failover occurs, the redisson instances stop getting new values in their local cache that originated in the other node.

I have enabled TRACE logging for org.redisson and have included the logs generated for both of these instances (same code running in 2 different JVMs). app02.log app01.log

Here is a pertinent section of app02.log:

Notice how the local cache for the data originating at the remote node is old and stale. You can see the local cache staying correct on both nodes, and local cache stops working during failover test.

Issue Analytics

- State:

- Created a year ago

- Reactions:1

- Comments:8 (4 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Please try attached version.

redisson-3.18.1-SNAPSHOT.jar.zip

@servionsolutions

Thank you for the analysis.

Can you ask AWS Team to comment this case?