User guide formulas for macro average recall and precision seem wrong

See original GitHub issueTo clarify, the calculation provided by recall_score(y_true, t_pred, average='macro') is correct, but the formula in the User Guide seems to be wrong. Here’s an example that shows that recall_score and precision_score calculate the correct numbers:

In [2]: y_true = [0, 1, 2, 0, 1, 2]

In [3]: y_pred = [0, 2, 1, 0, 0, 1]

In [4]: confusion_matrix(y_true, y_pred)

Out[4]:

array([[2, 0, 0],

[1, 0, 1],

[0, 2, 0]])

In [5]: recall_score(y_true, y_pred, average='macro')

Out[5]: 0.3333333333333333

In [6]: precision_score(y_true, y_pred, average='macro')

Out[6]: 0.2222222222222222

In [14]: np.mean(np.diagonal(C) / np.sum(C, axis=1))

Out[14]: 0.3333333333333333

In [15]: np.mean(np.diagonal(C) / np.sum(C, axis=0))

Out[15]: 0.2222222222222222

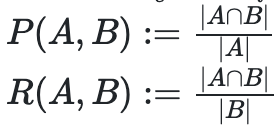

However, this is what the User Guide gives for the precision and recall formulas:

So, the user guide is saying that recall is calculated for each class, l, by dividing by the number of samples with predicted label l, but it should be dividing by the number of samples with true label l, and vice versa for the precision definition.

Issue Analytics

- State:

- Created 3 years ago

- Reactions:2

- Comments:12 (8 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Choosing Performance Metrics. Accuracy, recall, precision, F1 ...

Accuracy, recall, precision, F1 score––how do you choose a metric for judging model performance? And once you choose, do you want the macro...

Read more >How to Calculate Precision, Recall, and F-Measure for ...

In this tutorial, you will discover how to calculate and develop an intuition for precision and recall for imbalanced classification. After ...

Read more >Micro-average & Macro-average Scoring Metrics - Python

In this post, you will learn about how to use micro-averaging and macro-averaging methods for evaluating scoring metrics (precision, recall, ...

Read more >Why are precision, recall and F1 score equal when using ...

In a recent project I was wondering why I get the exact same value for precision, recall and the F1 score when using...

Read more >Confusion matrix- Machine learning | Clairvoyant Blog

Accuracy is calculated for the model as a whole but recall and precision are calculated for individual classes. We use macro or micro...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

I think the confusing notation should be fixed, and where possible we should make the functions take arguments true then predicted.

I believe strongly that precision and recall should be defined in terms of set notation. It makes it much easier to reason about variants of the metric (e.g. micro averages in multiclass classification where some class is considered the majority class to be ignored; or the usages of precision and recall in information retrieval and information extraction where you can’t presume to be able to count the total number of items being considered for prediction). It also makes the equivalence between F1 and dice coefficient more apparent

Hi, guys! I have a paper starting from next Tuesday and kind of busy this weekend preparation. Will be back next week after my papers end. Hope you don’t mind, @CameronBieganek 😄