WIP: Addition of MLE for stats.invgauss/wald

See original GitHub issuePart of the #11782 issue.

I have been investigating the maximum likelihood estimators given in Chapter 25, Inverse Gaussian (Wald) Distribution, but the results from the MLEs don’t seem to come close to the results of either the wald or invgauss distributions in scipy.

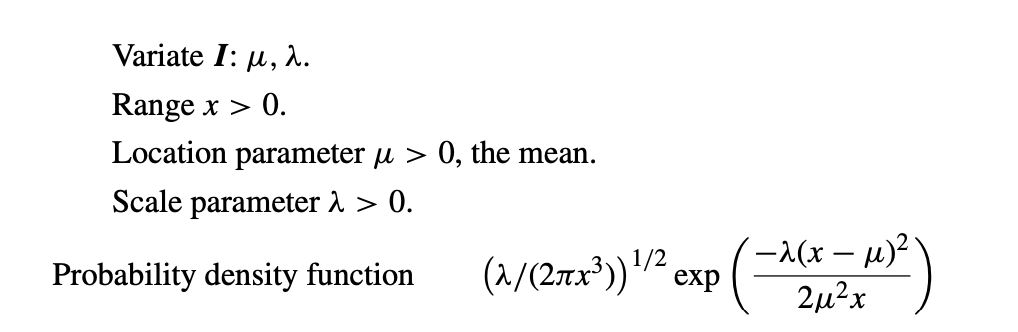

Here is how the PDF is described in the textbook we have been using, on page 120:

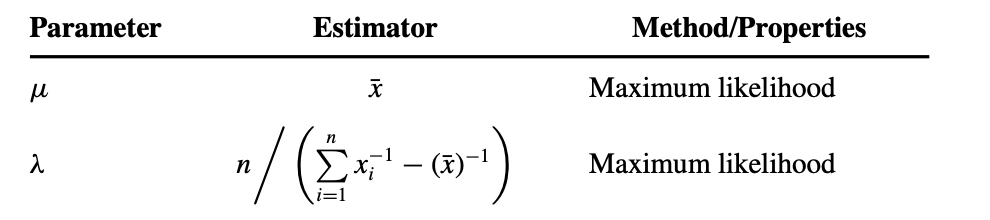

And here are the equations for MLE.

It may be another issue with terminology, since the use of mu to represent the location, as the textbook describes, is implemented as a shape parameter in stats.invgauss. However, the mean of a random generate only appears to represent the mu used in generation when loc and scale are the standard loc=0, scale=1.

For example,

from scipy.stats import invgauss

data = invgauss.rvs(mu=3.25, size=10000)

print(data.mean())

=> 3.223328.... Just one example, but it appears that in general it matches.

With other location and scale values it doesn’t match.

from scipy.stats import invgauss

data = invgauss.rvs(mu=3.25, scale=2, size=10000)

print(data.mean())

=> 6.62447.... Just one example, but in general they don’t match.

The code for the scale is

scale = len(data) / (np.sum(data**(-1) - mu**(-1)))

and I played around with both of these equations quite a bit to try to get them to match up with / or be better than the default fit method, but other than the relation I described above, I’m not sure that these will work for these distributions. It may be possible to use the mean to determine the shape parameter in invgauss if the person has already fixed floc=0, scale=1, but that seems like a very niche usage.

What are your thoughts?

Issue Analytics

- State:

- Created 3 years ago

- Comments:6 (6 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

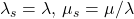

I think if you go a bit further, you’ll find with

loc=0that they are equivalent withSo if the user passes in

floc=0, you could use the equations from the book to get the book’s version of the parameters, then use the relationships above to get SciPy’s parameters.Update: Yes:

gives

If the user doesn’t pass in

floc=0, then you could at least use the analytical solution above (assumingfloc=0) as a guess to thesuperfitmethod. You might try following @WarrenWeckesser’s argument here aboutweibull_minto see if it applies to this distribution.@mdhaber @WarrenWeckesser

Thanks for the pointers! I really appreciate it. I’ll create a PR for this soon.