OOM errors in streaming pipeline

See original GitHub issueScio version 0.4.7/0.5.0-beta1 Following Scio pipeline throws out of memory errors. Volume of traffic is about 65K/s. Increasing VM size/counts only delays the occurrence of the OOM errors.

c.customInput("Read From Pubsub", PubsubIO

.readMessages()

.withIdAttribute("id")

.withTimestampAttribute("ts")

.fromTopic("topic"))

.withFixedWindows(Duration.standardSeconds(2))

.filter(e => {

Try {

e.getName != null

}.getOrElse(false)

})

.map { e =>

val name = e.getName.toString

(name, 1L)

}

.sumByKey

.withWindow

.map(transformNameStreamsWithWindow)

def transformArtistStreamsWithWindow(nameStreams: ((String, Long), IntervalWindow)):

(ByteString, Iterable[Mutation]) = {

val ((name, streams), window) = nameStreams

val windowMillis = window.end().getMillis

val key = s"$ByteString:$windowMillis"

val mutation = Mutations.newSetCell(

familyName = FAMILY_NAME,

columnQualifier = COLUMN_QUALIFIER,

value = ByteString.copyFrom(Longs.toByteArray(streams))

)

(ByteString.copyFromUtf8(key), Iterable(mutation))

}

Java/Apache Beam version works fine

final PubsubIO.Read<PubsubMessage> pubsubRead = PubsubIO

.readMessages()

.withIdAttribute("id")

.withTimestampAttribute("ts")

.fromTopic("topic");

pipeline.apply("Read from", pubsubRead)

.apply("Window Fixed",

Window.into(FixedWindows.of(Duration.standardSeconds(2))))

.apply("Names", ParDo.of(new GetNames()))

.apply( Sum.<String>longsPerKey())

.apply(ParDo.of(new CreateMutation()));

private static class GetNames extends DoFn<EndSong, KV<String, Long>> {

private static final long serialVersionUID = 1;

@ProcessElement

public void processElement(ProcessContext c) {

final EName e = c.element();

if (e.getName() != null) {

final KV<String, Long> kv = KV.of(e.getName().toString(), 1L);

c.output(kv);

}

}

}

private static class CreateMutation extends DoFn<KV<String, Long>,KV<ByteString, Iterable<Mutation>> > {

private static final long serialVersionUID = 1;

@ProcessElement

public void process(ProcessContext c, BoundedWindow window) {

final long millis = window.maxTimestamp().getMillis();

final String key = c.element().getKey() + ":" + millis;

final Long value = c.element().getValue();

final Mutation mutation = Mutations.newSetCell(

FAMILY_NAME,

COLUMN_QUALIFIER,

ByteString.copyFrom(Longs.toByteArray(value)));

c.output(KV.<ByteString, Iterable<Mutation>>of(ByteString.copyFromUtf8(key),

ImmutableList.<Mutation>of(

mutation)));

}

}

To get heap dump run pipeline in DataFlow with this flag --dumpHeapOnOOM. May have to run a few times to get the heap dump.

Looking at the logs for heap dump location:

jsonPayload: { job: "2018-02-20_15_01_56-4938495852979082999" logger: "com.google.cloud.dataflow.worker.StreamingDataflowWorker" message: "Execution of work for S0 for key cf5e9028c0d6445eb96d6c86bd3f71f6 failed with out-of-memory. Will not retry locally. Heap dump written to '/var/log/dataflow/heap_dump.hprof'." stage: "S0" thread: "316" work: "cf5e9028c0d6445eb96d6c86bd3f71f6-6846990601398222167" worker: "newrealease-test-02201501-d21b-harness-fbw5" }

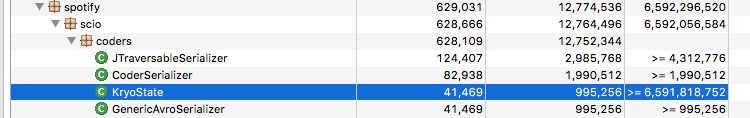

Heap dump seem to show a lot KryoState objects.

Issue Analytics

- State:

- Created 6 years ago

- Comments:15 (9 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

We’re using beam pipelines to handle our streaming cases at the moment. I can bump the version and try a scio pipe and let you know what happens.

Closing this now since it was fixed in #1143.