Sampling with tfq.layers.Sample() produces same outputs

See original GitHub issuePython 3.7 Tensorflow 2.3.1 Tensorflow Quantum 0.4.0

Hi,

I ran into something strange when I was trying to calculate errorbars on the estimators of some observables.

It seems that there are differences between how the cirq.Simulator() object handles random numbers compared to how this is done in the default tfq.layers.Sample() backend.

Expected behavior

Calling tfq.layers.Sample() twice on the same circuit within a script should give me different bitstrings.

Launching the same script multiple times where I sample from a circuit should give me different bitstring for each script.

Actual behaviour Multiple scripts executed at different times gave me exactly the same set bitstrings. Also, reinitializing the sampling layer within a script can give the same set of bitstrings depending on what backend one uses.

Here is a minimal working example illustrating the problem:

import tensorflow_quantum as tfq

import tensorflow as tf

import cirq

import numpy as np

CASE = 'tfq'

def sample_generator(circuits_tf, repetitions, nqubits):

# take tfq backend or cirq backend

if CASE == 'tfq':

sample_layer = tfq.layers.Sample()

elif CASE=='cirq':

sample_layer = tfq.layers.Sample(cirq.Simulator())

# create generator from sample_layer outputs, repeat `repetition` times.

data_x = tf.reshape(sample_layer(circuits_tf, repetitions=repetitions).to_tensor(), (-1, nqubits))

data_x = tf.unstack(data_x)

for d in data_x:

yield d

def main():

# tf.random.set_seed('supcom')

nqubits = 4

nsamples = 100

batch_size = 10

# create a simple circuit

qubits = cirq.GridQubit.rect(1, 4)

circuit = cirq.Circuit()

circuit.append(cirq.H.on_each(qubits))

# convert to tfq tensor

circuits_tf = tfq.convert_to_tensor([circuit])

# create data generator

sample_gen = tf.data.Dataset.from_generator(sample_generator, args=(circuits_tf, nsamples, nqubits),

output_types=tf.int32)

sample_gen = sample_gen.batch(batch_size)

sample_gen = sample_gen.prefetch(3 * batch_size)

# get samples and return them

samples = []

for batch in sample_gen:

samples.append(batch.numpy())

return samples

if __name__ == '__main__':

samples_1 = main()

samples_2 = main()

for s1, s2 in zip(samples_1, samples_2):

# print(s1, s2)

print(np.allclose(s1, s2))

I consider the following cases:

1.) CASE=='tfq'

Calling main() twice gives a different set of 100 bitstrings for samples_1 and samples_2 (only False is printed in the final line)

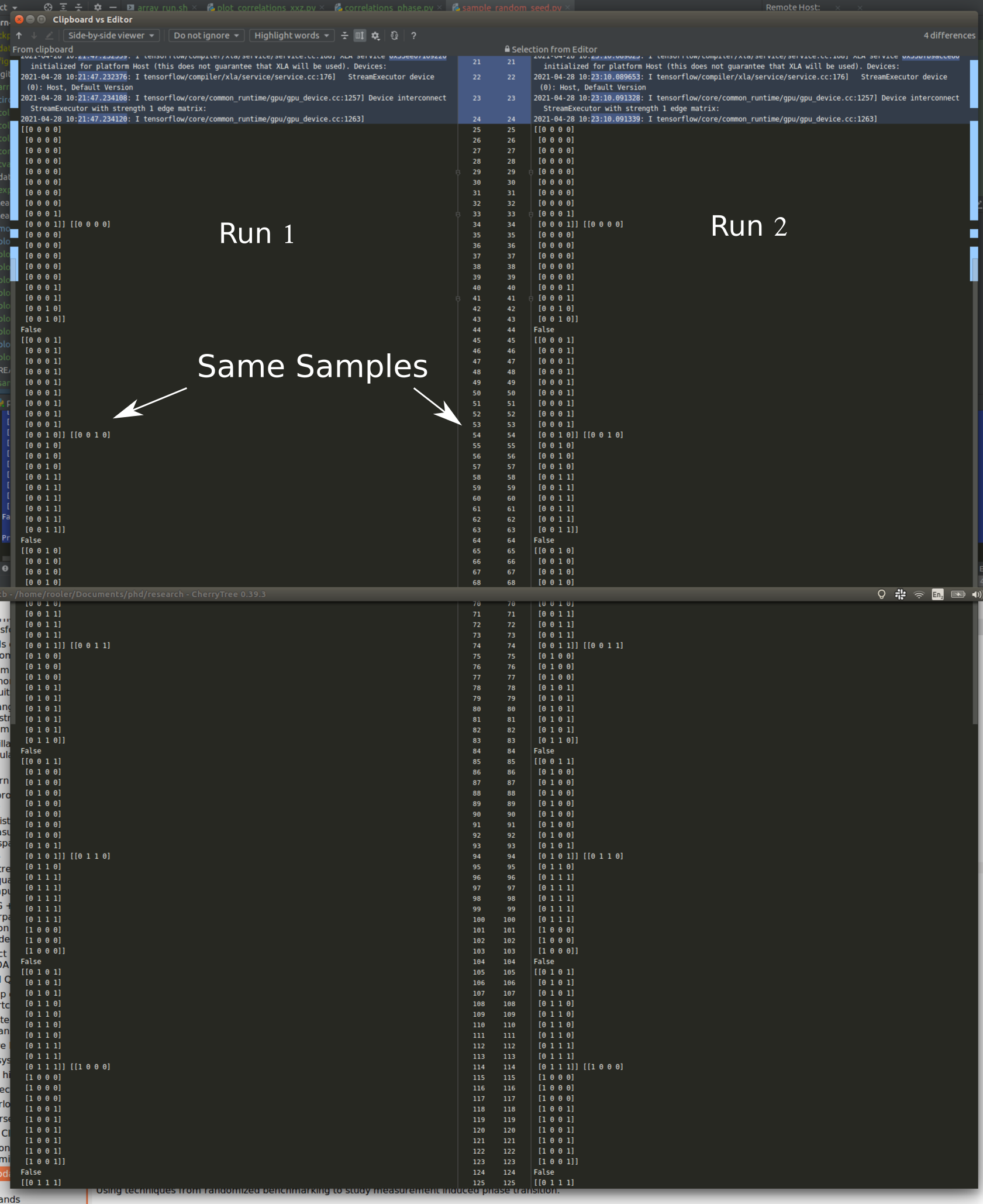

2.) CASE=='tfq', two separate script executions.

Again, different bitstrings for samples_1 and samples_2, but the two scripts give the same output:

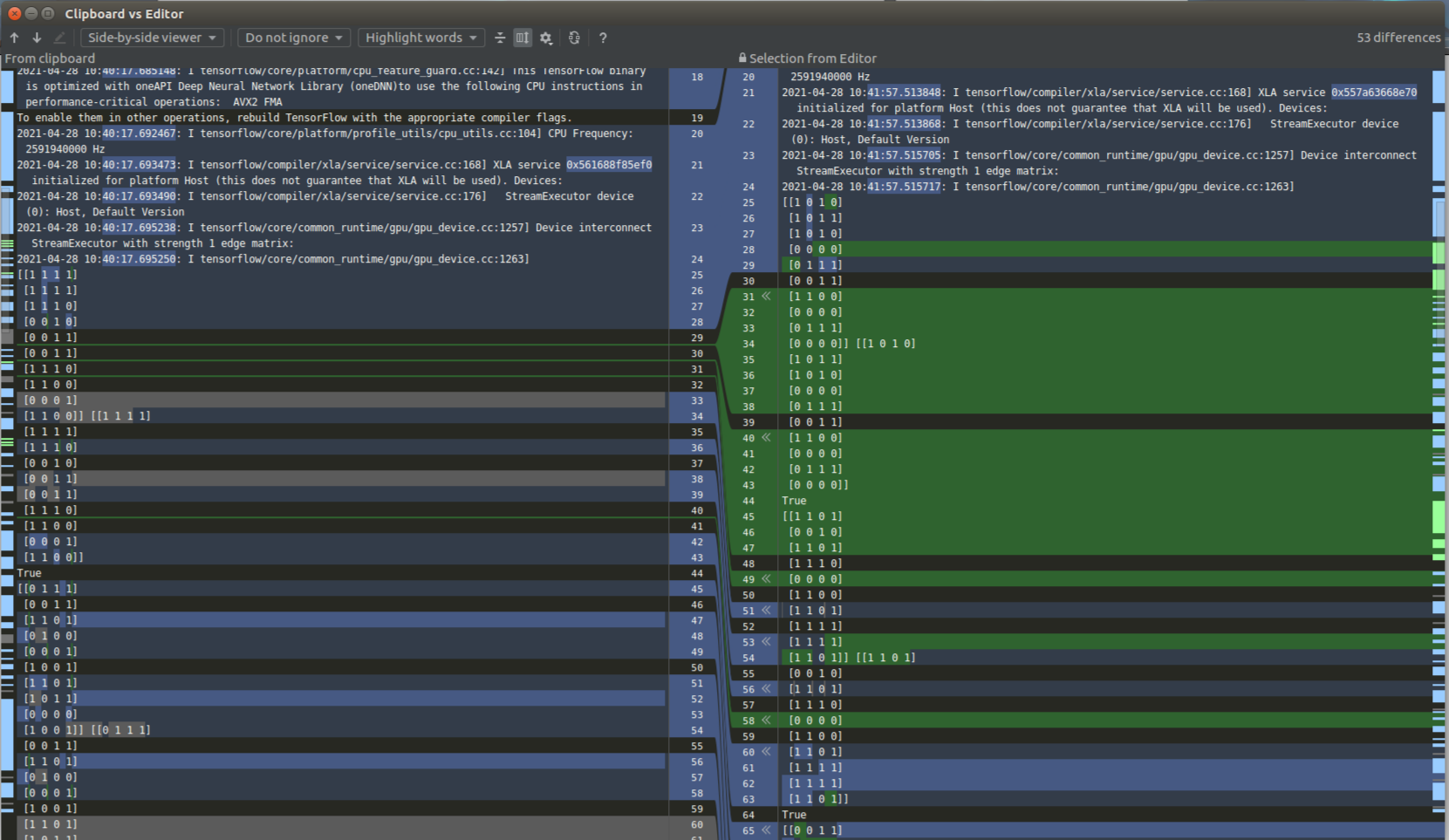

3.) CASE=='cirq'

Calling main() twice gives exactly the same 100 bitstrings for samples_1 and samples_2 (only True is printed in the final line)

4.) CASE=='cirq'

Again, the bitstrings for samples_1 and samples_2 are equal, but now the two scripts give different output

(All of this is unaffected by changing the random seed in Tensorflow between scripts.)

From this I deduce that in TFQ, initializing the Sampling layer initializes a global random number generator once with a fixed seed independent of time (at which the script is executed), and this random number generator is reused for all subsequent calls. This would explain 1.) and 2.). Whereas with the Cirq backed, the random number generator is reinitialized when the Sampling layer is initialized, but the seed is now dependent on the time (at which the script is executed), which would explain 3.) and 4.).

I fixed my problems by passing a seed to the cirq.Simulator() object that depends on time

I this expected behavior with the way I coded this up? Is there a better way to sample bitstrings from the circuit and ensure that everytime I call the sampling layer I get different bitstrings?

Best, Roeland

Issue Analytics

- State:

- Created 2 years ago

- Comments:6 (2 by maintainers)

Top Related StackOverflow Question

Top Related StackOverflow Question

Yes it is what we expect, just wanted to make sure the last changes hadn’t re-introcued any bad behavior. I think this is safe to close now.

Using

tfq-nightly-0.5.0.dev20210504I get the same behavior as in my previous comment.But this is what we expect right? 1.) Within the same script, calling the sampler twice gives two chains of different samples. 2.) Executing the same script twice gives different samples.

So we never end up with the same bitstrings.