[Pusher][AI Platform Training & Prediction] Unexpected model deploy status

See original GitHub issueWhile going though your tutorial link, I have encountered following error in Kubeflow logs console:

Traceback (most recent call last):

File "/tfx-src/tfx/orchestration/kubeflow/container_entrypoint.py", line 371, in <module>

main()

File "/tfx-src/tfx/orchestration/kubeflow/container_entrypoint.py", line 364, in main

execution_info = launcher.launch()

File "/tfx-src/tfx/orchestration/launcher/base_component_launcher.py", line 205, in launch

execution_decision.exec_properties)

File "/tfx-src/tfx/orchestration/launcher/in_process_component_launcher.py", line 67, in _run_executor

executor.Do(input_dict, output_dict, exec_properties)

File "/tfx-src/tfx/extensions/google_cloud_ai_platform/pusher/executor.py", line 91, in Do

executor_class_path,

File "/tfx-src/tfx/extensions/google_cloud_ai_platform/runner.py", line 253, in deploy_model_for_aip_prediction

name='{}/versions/{}'.format(model_name, deploy_status['response']

KeyError: 'response'

AI Platform model version shows error (without any further explanation):

Create Version failed. Bad model detected with error: "Failed to load model: a bytes-like object is required, not 'str' (Error code: 0)"

I have no further possibility to debug this with above messages. Is your tutorial incomplete?

Issue Analytics

- State:

- Created 4 years ago

- Comments:10 (5 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Deploying models | AI Platform Prediction | Google Cloud

This page explains how to deploy your model to AI Platform Prediction to get predictions. In order to deploy your trained model on...

Read more >GCP AI platform Custom prediction routine fails to load model ...

I'm currently trying to deploy a model to GCP AI platform using ... to load model: Unexpected error when loading the model: 92...

Read more >Retraining Model During Deployment: Continuous Training ...

There are several ways to productionalize your machine learning models, such as model-as-service, model-as-dependency, and batch predictions (precompute serving) ...

Read more >3 facts about time series forecasting that surprise experienced ...

For most ML models, you train a model, test it, retrain it if necessary until you've gotten satisfactory results, and then evaluate it...

Read more >Deployment prediction and training data export for custom ...

Select the deployment's model, current or previous, to export prediction data for. Range (UTC), Select the start and end dates of the period...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

It turns out that even if we used TF 1.15 while running TFX, the base container image which is used for training had TF2. And the model had some TF2 ops. Full error messages:

So there are two possible work-arounds.

Using TF1 for training

We uses a custom container image for training. This is the image we specify in

build_target_imageflag and contains all model codes in it. This image is built automatically when we create or update the pipeline.Dockerfilefor this image is generated in the working directory when we first create the pipeline. And you can safely modify this Dockerfile.tfxCLI doesn’t touch the file if it already exists.Open

Dockerfileand addRUN pip install tensorflow==1.15like below:And update the pipeline and run again. If you are using a pipeline which already ran some training, Trainer will try to use cached result rather than do the training again. So it might be need to set

enable_cacheofPipelineobject toFalsein pipeline.py:173.Debugging a model deployment

FYI, you can debug your deploy using Stream logging in Prediction service. It is not supported in TFX yet, and you should use

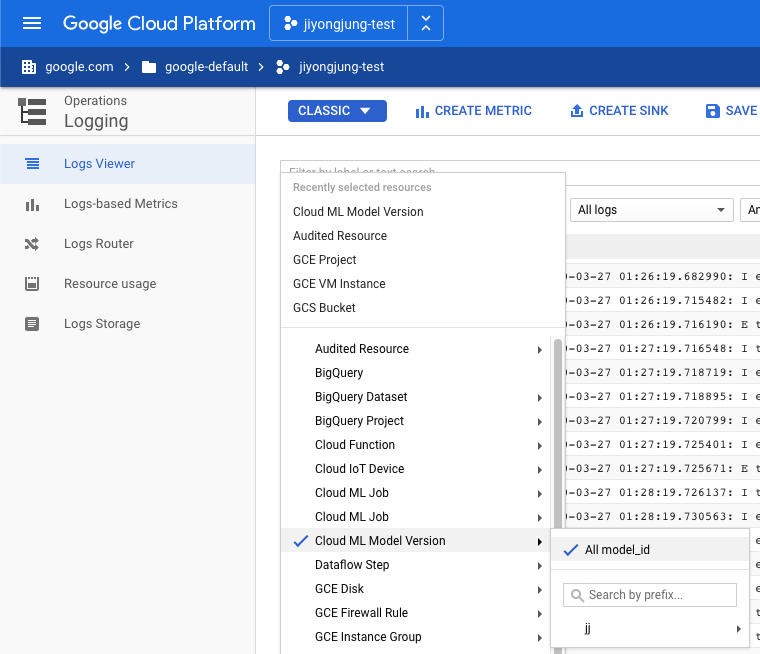

gcloud(or REST API) in your local terminal environment to deploy your model. For example,See also documentation in Google Cloud. Then you can see detailed log in Google Cloud Console

This problem will be fixed when we can use 2.1 CAIP Prediction runtime in next version of TFX.

Thank you for raising this issue.

Python: 3.7.6 TF: 2.1.0 TFX: 0.21.0 KFP: 0.2.5 Region: us-central1