[BUG] PYTORCH CAUSES LIVE TO LOSE COLOR

See original GitHub issueHi. I notice when importing PyTorch while using Live it causes everything to turn to black and white.

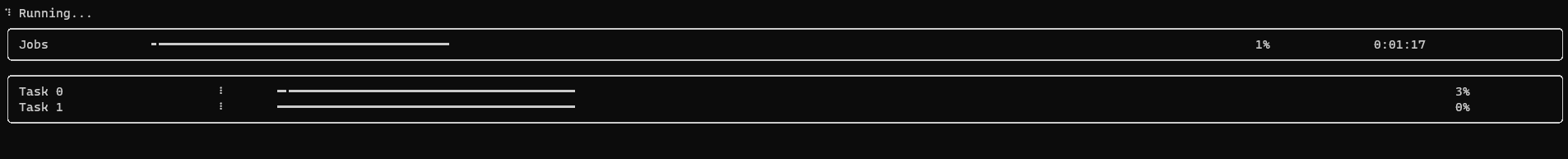

Image below is the one with torch imported.

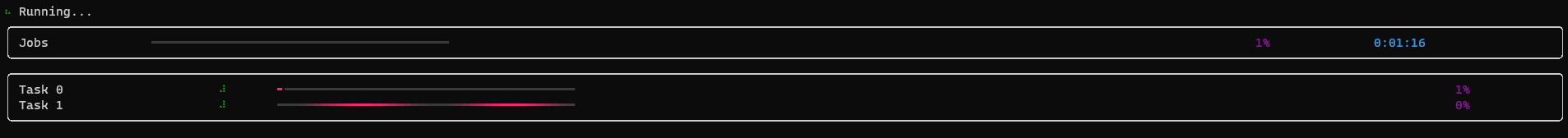

And this is the expected.

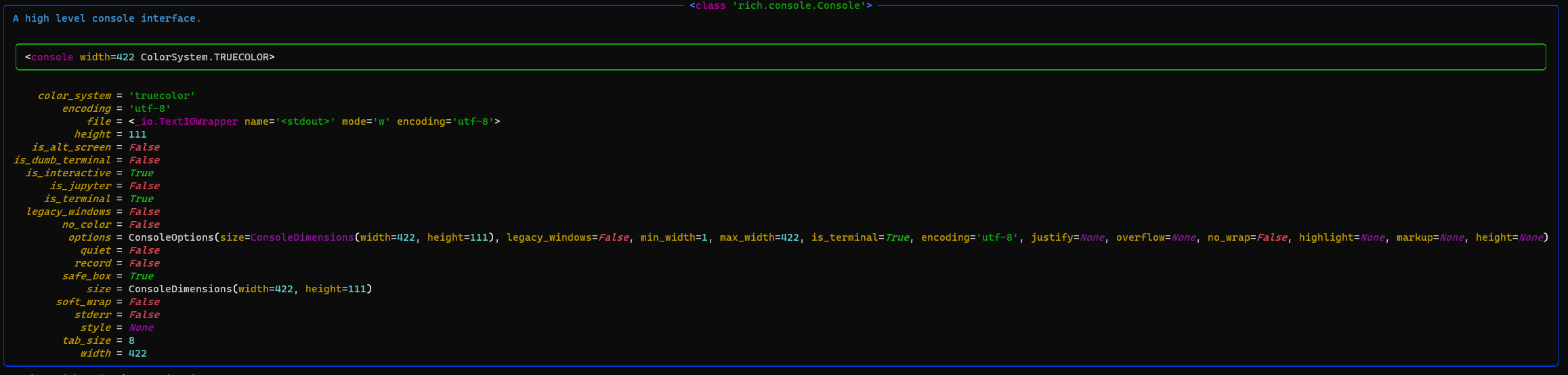

python -m rich.diagnose output:

python -m rich._windows output:

platform=“Windows”

WindowsConsoleFeatures(vt=True, truecolor=True)

pip freeze output:

rich==10.1.0

How should I fix this?

Issue Analytics

- State:

- Created 2 years ago

- Comments:6

Top Results From Across the Web

Top Results From Across the Web

[bug] pytorch causes live to lose color #1201 - GitHub

Hi. I notice when importing PyTorch while using Live it causes everything to turn to black and white. Image below is the one...

Read more >Frequently Asked Questions — PyTorch 1.13 documentation

My model reports “cuda runtime error(2): out of memory” · total_loss is accumulating history across your training loop, since · loss is a...

Read more >Accelerating Inference Up to 6x Faster in PyTorch with Torch ...

IT tracks the activations in FP32 to calibrate a mapping to INT8 that minimizes the information loss between FP32 and INT8 inference. TensorRT ......

Read more >Deep Learning With PyTorch - Medium

PyTorch makes it easy to load pre-trained models and build upon them, which is what we will do in this project.

Read more >nvdiffrast - NVlabs

Nvdiffrast is a PyTorch/TensorFlow library that provides high-performance primitive operations for rasterization-based differentiable rendering.

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

I see what has happened. Torch has initialized colorama for some reason, which wraps stdout. Colorama isn’t needed in Windows Terminal.

Try adding the following directly after the

import torch:Thanks! It works now. Man you’re good.