fast-stable-diffusion is not utilizing xformers

See original GitHub issueWe have found out that fast-stable-diffusion is not utilizing xformers, and is in fact slower than the default colab for Automatic1111’s webui.

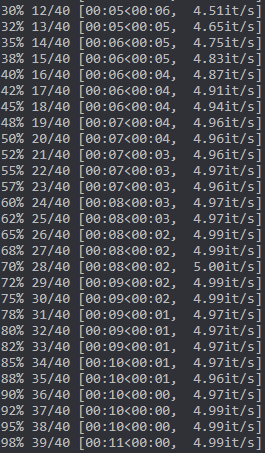

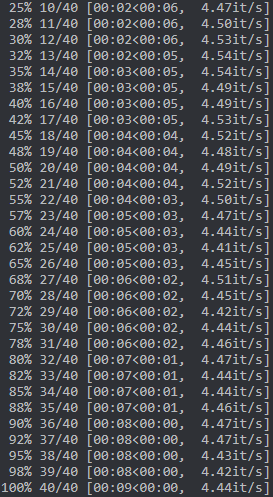

Base webui runs at around 5 it/s on a T4 w/o xformers (likely due to newer versions of dependencies)

Base fast-stable-diffusion runs around 0.5it/s slower than default on a T4

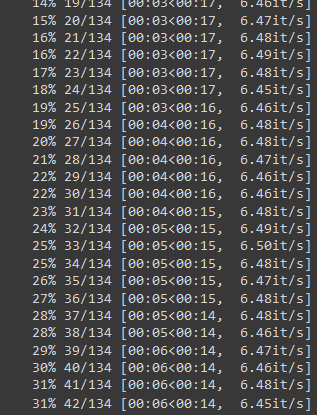

Fixed xformers implementation runs at 6.5 it/s on a T4

This is a simple fix, update the xformer wheels to 0.14 and add the --xformers launch option.

You can test this out using our fork’s T4 wheel if you’d like, keep in mind this only fixes the issue for T4s.

The actual wheel is xformers-0.0.14.dev0-cp37-cp37m-linux_x86_64.whl which we built ourselves on a T4.

Issue Analytics

- State:

- Created 10 months ago

- Reactions:2

- Comments:5 (3 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

stabilityai/stable-diffusion-2

Using the 's Diffusers library to run Stable Diffusion 2 in a simple and efficient manner. pip install diffusers transformers accelerate scipy safetensors....

Read more >How to install XFormers and run Stable Diffusion 1.5x faster ...

XFormers is a library by facebook research which increases the efficiency of the attention function, which is used in many modern machine ...

Read more >Make stable diffusion up to 100% faster with Memory ...

In a previous blog post, we investigated how to make stable diffusion faster using TensorRT at inference time, here we will investigate how...

Read more >r/StableDiffusion - 30% Faster than xformers? voltaML vs ...

Welcome to the unofficial Stable Diffusion subreddit! ... Any chance of AMD support, or is this not possible like xformers?

Read more >AI Image Generation Technical Discussion

Moving on from xformers... I managed to get voltaML-fast-stable-diffusion to work and it does appear quite fast but I need to do more...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Do note; xformers 0.0.13 does not work with the

--xformersflag, it will error upon attempting the forward/step function during inference.been having the same issue and i re-installed xformers the other day. cant run T4 without cuda errors and premium only gives me 2.5-2.7s/it. put command line args at --force-enable-xformers --no-half-vae and also --xformers just to be sure. but im using ‘export’ command and not ‘set’ after uncommenting the commands. surprised everything didnt melt down. i have no idea what im doing, just over here typing shit hoping i dont randomly explode