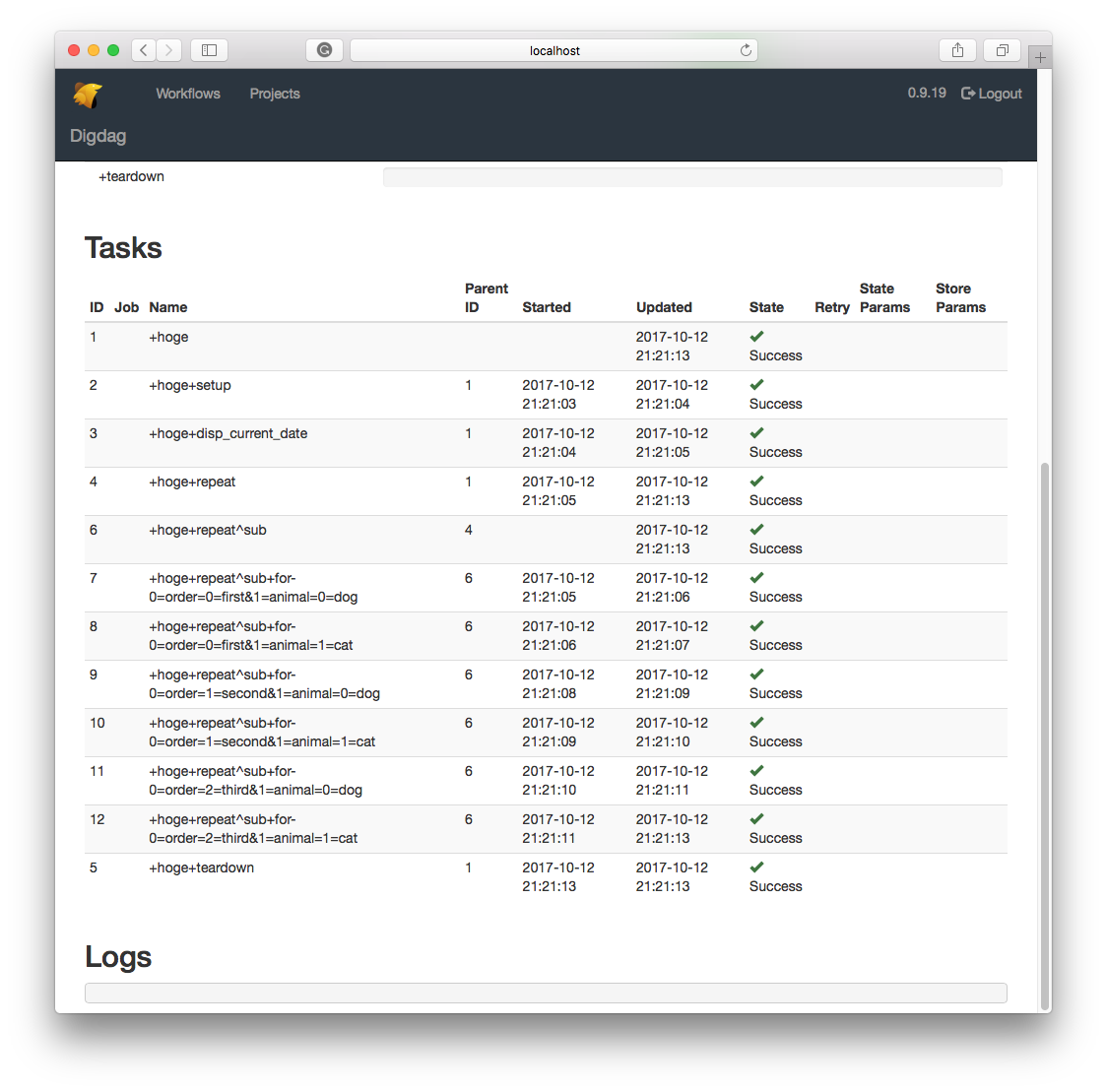

digdag-ui can't display task log if a task-log store in an S3.

See original GitHub issue- Digdag 0.9.19

digdag-ui can’t display task log if a task-log store in an S3.

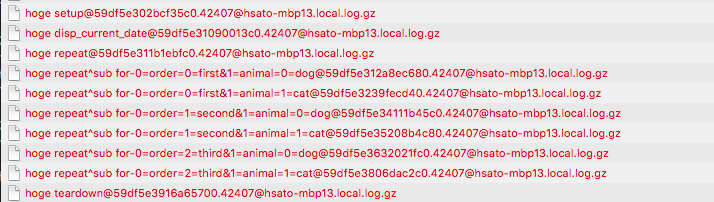

digdag-ui try to fetch %20hoge%20disp_current_date%4059df5e31090013c0.42407%40hsato-mbp13.local.log.gz

(Decode string is hoge disp_current_date@59df5e31090013c0.42407@hsato-mbp13.local.log.gz.)

This filename is wrong. It needs to replace (space) to +.

I’m not sure how to fix this yet, But the following ad-hoc patch fix this issue.

diff --git a/digdag-storage-s3/src/main/java/io/digdag/storage/s3/S3Storage.java b/digdag-storage-s3/src/main/java/io/digdag/storage/s3/S3Storage.java

index 16255a0c7..e0d0842e7 100644

--- a/digdag-storage-s3/src/main/java/io/digdag/storage/s3/S3Storage.java

+++ b/digdag-storage-s3/src/main/java/io/digdag/storage/s3/S3Storage.java

@@ -195,12 +195,19 @@ public class S3Storage

}

@Override

- public Optional<DirectDownloadHandle> getDirectDownloadHandle(String key)

+ public Optional<DirectDownloadHandle> getDirectDownloadHandle(String key2)

{

+ String key;

+ key = key2.replaceAll(" ","+");

GeneratePresignedUrlRequest req = new GeneratePresignedUrlRequest(bucket, key);

req.setExpiration(Date.from(Instant.now().plusSeconds(10*60)));

String url = client.generatePresignedUrl(req).toString();

return Optional.of(DirectDownloadHandle.of(url));

}

log-server.type: s3

log-server.s3.endpoint: http://xxx.xxx.xxx.xxx:9000

log-server.s3.bucket: log

log-server.s3.credentials.access-key-id: XXXXXXXXXXXXXXXXXXXX

log-server.s3.credentials.secret-access-key: YYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYYY

Issue Analytics

- State:

- Created 6 years ago

- Comments:8 (6 by maintainers)

Top Results From Across the Web

Top Results From Across the Web

Enabling CloudTrail event logging for S3 buckets and objects

CloudTrail stores Amazon S3 data event logs in an S3 bucket of your choosing. Consider using a bucket in a separate AWS account...

Read more >Airflow won't write logs to s3 - Stack Overflow

I use the aws connection to write to buckets in dags and this works but the Logs just remain local, no matter if...

Read more >Writing logs to Amazon S3 - Apache Airflow

Remote logging to Amazon S3 uses an existing Airflow connection to read or write logs. If you don't have a connection properly setup,...

Read more >View log files - Amazon EMR

Apache Hadoop writes logs to report the processing of jobs, tasks, and task ... View log files on the master node; View log...

Read more >10 things you should know about using AWS S3 - Sumo Logic

Learn how to optimize Amazon S3 with top tips and best practices. Bucket limits, transfer speeds, storage costs, and more – get answers...

Read more > Top Related Medium Post

Top Related Medium Post

No results found

Top Related StackOverflow Question

Top Related StackOverflow Question

No results found

Troubleshoot Live Code

Troubleshoot Live Code

Lightrun enables developers to add logs, metrics and snapshots to live code - no restarts or redeploys required.

Start Free Top Related Reddit Thread

Top Related Reddit Thread

No results found

Top Related Hackernoon Post

Top Related Hackernoon Post

No results found

Top Related Tweet

Top Related Tweet

No results found

Top Related Dev.to Post

Top Related Dev.to Post

No results found

Top Related Hashnode Post

Top Related Hashnode Post

No results found

Hello, @David-Development

IIUC, digdag-ui request the log with

+sign, not whitespace if task storage server use S3. However, It seems that digdag-ui request the log with whitespace if a task storage server uses minio.We need to take a look more deeply. I’ll try to find someone for investigating this issue.

@hiroyuki-sato thank you for the quick response!

The plan sounds good, however I still have a couple of question. The name of the tasks is shown with a leading

+sign. e.g.+myworkflow+mytask.... The logs are stored on the s3 storage in the same format. Why is the UI requesting the logs with whitespaces instead of the+sign? Isn’t that true for the AWS Storage as well? (I don’t have an AWS account either - so I can’t verify this right now).Or is it some kind of feature of AWS, that it converts whitespaces into

+signs and that’s why it working there and not on minio? I guess there is some magic going on in the s3 aws storage backend as described here and here.